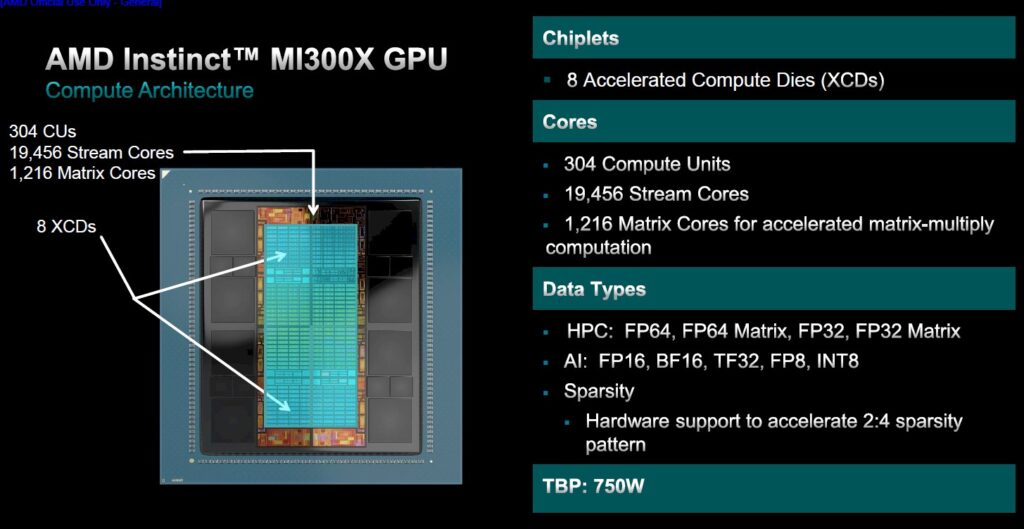

As the demand for advanced AI workloads and GPU-accelerated computing surges across industries, and as NVIDIA-based accelerators continue to be in short-supply, AMD’s MI300 accelerator is rapidly gaining traction in the cloud computing ecosystem.

The MI300’s advanced architecture, featuring high memory capacity, low power consumption, and solid performance, is finding a home among cloud providers. While Microsoft Azure previously announced MI300-based instances, there are new announcements from specialty GPU cloud provider Vultr and the mainstream CSP Oracle Cloud Infrastructure of new integrations with AMD’s MI300 accelerator.

Vultr’s Composable Cloud Infrastructure Leverages AMD MI300X

Vultr, known for its high-performance, cost-effective GPU cloud infrastructure, has embraced the AMD Instinct MI300X as an alternative AI accelerator in its composable cloud environment.

In the press release announcing its MI300 adoption, Vultr CEO J.J. Kardwell highlighted that the open ecosystem created by AMD’s architecture allows customers to access thousands of pre-trained AI models and frameworks seamlessly. He also pointed out that the MI300X’s high memory and low power consumption balance furthers Vultr’s commitment to sustainability, giving its customers an eco-friendly solution for running large-scale AI operations.

Oracle Cloud Infrastructure Supercharges AI with AMD MI300X

Oracle Cloud Infrastructure (OCI) also recently joined the ranks of cloud providers leveraging AMD MI300X accelerators to meet the growing demand for AI-intensive workloads. OCI’s new Compute Supercluster instance, BM.GPU.MI300X.8, supports up to 16,384 GPUs in a single cluster. This allows customers to train and deploy AI models that comprise hundreds of billions of parameters.

Accelerating into the Future

The adoption of AMD MI300 accelerators by Microsoft Azure, Vultr, and Oracle Cloud–along with announced offerings from Lenovo, Dell, and SuperMicro—highlights the growing momentum behind AMD’s next-generation silicon technology and provides a solid basis for AMD’s 2024 revenue projections for the part.

The demand for efficient, scalable, and high-performance computing infrastructure will only rise as AI workloads move into the enterprise. This is where NVIDIA is most exposed, as it doesn’t have the software lock-in for inference that it enjoys in the AI training world.

The market likes choice, and the AMD MI300 products are well-positioned to meet the need, offering cloud providers and enterprises the tools to innovate, scale, and drive their AI strategies forward. It’s also notable that while AMD continues to gain cloud and OEM customers, Intel, with its recently released Gaudi 3 accelerator, struggles to find traction. The market likes choice, but it can be finicky about what that choice is.

With leading cloud providers onboard, and nearly every OEM shipping product, AMD’s MI300 series’ future looks bright.