At the launch of VAST Data’s new VAST Data Platform, principal analyst Steve McDowell had a chance to talk to VAST’s CMO and co-founder Jeff Denworth about what was announced.

It’s a great conversation that will help you understand the importance of the VAST Data Platform, as well as the philosophy and vision behind VAST’s offerings.

This is a good one. Watch it above, or read through the transcript below (lighted edited for clarity)

Steve McDowell: Welcome Jeff Denworth, CMO and Co-founder of VAST Data, and Jeff, you’ve been teasing us for a while. I talked to you back in, I think, April at HPE’s event where they announced that VAST was powering their new storage offering. You teased a little bit about some big announcements coming midyear. Well, here we are. This week you made a big one. Before you tell me what you announced though, I want to ask you what’s changed in the market that’s kind of pushed you the direction you’re going, right?

Give us some insight into your thinking about why VAST is evolving beyond storage, and then you can talk about what you announced with the new VAST Data Platform.

Jeff Denworth: We’re at a point of stasis, but the big thing is when we started the company seven years ago, we had an idea for a product that had data at the center, and we’ve been progressively working to that idea. And today we’re announcing a concept that we call the VAST Data Platform that’s something that we’ve been working on and planning towards for almost a decade now. If we announced everything when we started, people would’ve thought we were kind of crazy people, and we’re also a big believer in incrementalism where you have a long range view of what you’re doing, but you kind of build towards there as opposed to try to build Rome in a day as it were. So we now have so much stuff accumulated in terms of new IP and new ideas that we wanted to get out into the market. We felt it didn’t make sense to announce things in series of string of different incremental announcements, but rather show people the entirety of our vision.

Steve McDowell: Great. Wait, you’ve been laying down kind of the stepping stones, right? You started with the data … Well, let’s start with the name. You’re VAST Data, not VAST Storage, and I think some people don’t always get that distinction.

But you started VAST Data Store and then earlier this year you talked about VAST Database and now you’re bringing in, is it VAST Data Spaces to kind of round out where we are now? Right? So what’s being announced?

Jeff Denworth: Okay, so it’s a lot of stuff. So when we launched the company in 2019, we introduced a concept that we thought was a significant simplification of how people stored and accessed their data. At the time we called that the Universal Storage Platform.

Steve McDowell: Right.

Jeff Denworth: And essentially if you can consolidate all the tiers that you have in your data center to one single scalable and affordable tier of flash, the thesis was you can go process on that till your heart’s content, right? And that to us was always integral to building this idea of a thinking machine that we’re working towards. Now in February of this year, we quietly announced that this unstructured data store that we were shipping now had support for data catalogs. All your files and all your objects could be cataloged within the system. And we were really happy that nobody asked us what database we were using for this A. Because we weren’t ready to make the full announcement, and I was also a little bit unhappy that people didn’t ask us because they didn’t think that there was space for invention there.

Steve McDowell: Right.

Jeff Denworth: But today we’re announcing a new concept that we call the VAST Database. And this isn’t an independent system, it’s all just an evolution of our data structures, but it’s a system that brings those same levels of simplification that we started with in the early days all the way forward to database management systems. And so imagine a system which is transactional like a database, analytical like a data warehouse, and as scalable and as affordable as a hard drive based data lake. Now so that’s our next big order of simplification, but when I bring that together with the data store, which is your unstructured data system, now you have a third order of simplification where you kind of bring together all of the structure and unstructured data assets that you have into a single system that can be self cataloging.

And so by building something and scaling the exabytes, of course you need transactional infrastructure to keep pace with all the modern applications that may be sending data down to a data store. But if you actually want to go use that data, well then you have to be able to have something that’s analytical. And so up until now, you would need to pair our systems with both a database or an event queuing system like Kafka with a data lake so that you could actually go and process all that metadata. Now it’s all just one unified system that’s completely synthesized. So that’s thing one, right? And that in of itself puts the entire database industry on notice, but that’s just part of it, right?

So now once you’ve got this system that marries both context and content, which we think you have both when you’re dealing with modern AI infrastructure, right? We work a lot with Nvidia on technologies like their super pod systems, and I can tell you that there’s not a lot of business reporting tools that get brought into those environments. It’s more for new types of data that come from the natural world like files, that may be imagery or text or video or things like that. And so these machines are ultimately designed to go and refine that unstructured data into structured data using inference and training applications. But what we realized is that that doesn’t only happen in one data center, this data that we’re dealing with is so much larger than a classical data platform. And so for this reason, we built a stretch namespace that it can expand across the world. And that’s the second thing that we’re announcing today called the VAST Data Space that you just referred to.

Steve McDowell: So let me ask this question, Jeff, pause right there. So when you talk about the data being stretched across the world, when I think about VAST, I think about high performance, kind of on-prem data wrangling. How are you delivering the solution? Is this cloud plus on-prem? Is it on-prem only? How are you thinking about that? Because my data [inaudible 00:06:20].

Jeff Denworth: Today it is everywhere, and as the data gets richer, meaning as typically this follows the unstructured data market. As it gets richer, it has more gravity, and so we wanted to build a system that could also could first address that topic by providing a global and consistent namespace that went all the way around the world, including into different public cloud platforms. So now you can deploy our systems in the public cloud like a AWS, Google, and Azure. You can deploy it in your on-premises data center on infrastructure that comes from a bunch of different hardware platform providers that we work with, including HPE, which is where I saw you last. And we even have rugged systems that can deploy our infrastructure out to the edges. So we work with a company called Mercury Computer that makes ruggedized equipment for mobile infrastructure.

And the interesting thing here is that what we realize is that if you can rethink consistency management and lock management on a global scale, you can actually build a mechanism that can move data to where it needs to go as opposed to move processing to the data, which is kind of the legacy convention, which is like oh, data has so much gravity. But if you can manage data with fine granularity and pipeline it and write different parts of the world, and send lock management out to the edges of the network, we can do really interesting things. You can build a global data space that has high performance all the way to the edge. And so it’s like a transactional CDN, if you will, I think is a way to think about that.

Steve McDowell: I like that you’re inventing a new category. So one of the things that struck me, what I like about what you’re doing is you’re taking a lot of … You’re simplifying the experience to some degree, right? When you brief the analyst, you put a slide up that I really liked and it had, I don’t how many dozens of little blocks that kind of said this is your analytics stack, and you’re taking a lot of that, munging it together, so you’re simplifying it, you’re taking a lot of complexity out. I assume that’s going to remove some latency as well. Go ahead.

Jeff Denworth: This is true. We always think about how do you consolidate the problems, right? So that data space basically consolidates all the silos of infrastructure that you’ve got and the APIs that you have to deal with across the cloud into one abstraction that you can just get to your data from anywhere. But the last thing that we announced brings us very, very core to how we think about analytics, and more importantly, deep learning is people go to build these different services in their environment, what we realized is that the thing that needs to refine data from unstructured data into structured data is a compute engine. And so now you can deploy … Well, in 2024 is when it’ll be available. You’ll be able to deploy containers that basically are part of our system that allow you to store logic and functions in the system.

And as we think about the fullness of this architecture, what you have is a system that embodies … The definition of a data platform is companies like Snowflake or Databricks have kind of popularized the term where you have the data layer, you have the storage layer, and you have the compute layer all integrated into one. But for a new style of processing that you would find in these kind of modern foundational model training environments or domain specific model training environments that’s popular with deep learning right now. And we kind of looked at it to your point about all these different cloud components that you have to put together for a pipeline, and we said well, the reference architecture for a self-driving car is 50 different products that you have to stitch together within a public cloud platform, and that feels really complicated. A lot of those technologies that are being prescribed weren’t even born in the era of AI. VAST was founded ten days after OpenAI actually.

Steve McDowell: Really? Okay.

Jeff Denworth: Yeah. And so we kind of thought okay, well, how can you build infrastructure that can power machines of discovery in the future? And so we’ve been slowly putting everything together, but not as a collection of different components, all one unified system that just makes it easier to compute.

Steve McDowell: Okay. So who is this for? Who are you targeting this product at?

Jeff Denworth: Well, that’s an interesting one. If you throw a tennis ball down a hall of a modern organization, you’ll hit at least one person that considers them like a deep learning expert. My favorite job description is hiring a deep learning expert, needs 10 years of experience or something like that. Now the way that our business works is we’re very much of the mind that we want to build enterprise infrastructure as opposed to building exotic AI infrastructure. And the thinking is it’s much better to bring AI to your data than your data to these new AI systems. So if you can just build something that solves the problems of a modern organization where their just data storage, their database storage, and their archive and backup systems, and then start to bring functions and things like that to the system, well, then it’s just a nice way for customers to kind of graduate into this new style of computing.

And so in truth, we’re selling very much to the core audience that we sell to today, which is your classic storage buyer. We’re graduating to also sell to different data science teams and chief data officers thanks to the database that we’ve built. And then as the engine kicks in next year, we’ll start selling more and more to deep learning developers and pipeline engineers that want to work on our system and bring life to their data. But it’s very progressive, and the thinking is if you can solve the problems for some of the largest AI training environments in the world, well, you can also scale that down to meet the needs of most modern organizations, whether they’re enterprises or service providers.

Steve McDowell: Okay. So with VAST Data Platform, you’re taking on a big segment of the stack, but not the full stack. Does that change what your partnership landscape looks like, who you’re going to market with, who you’re integrating with?

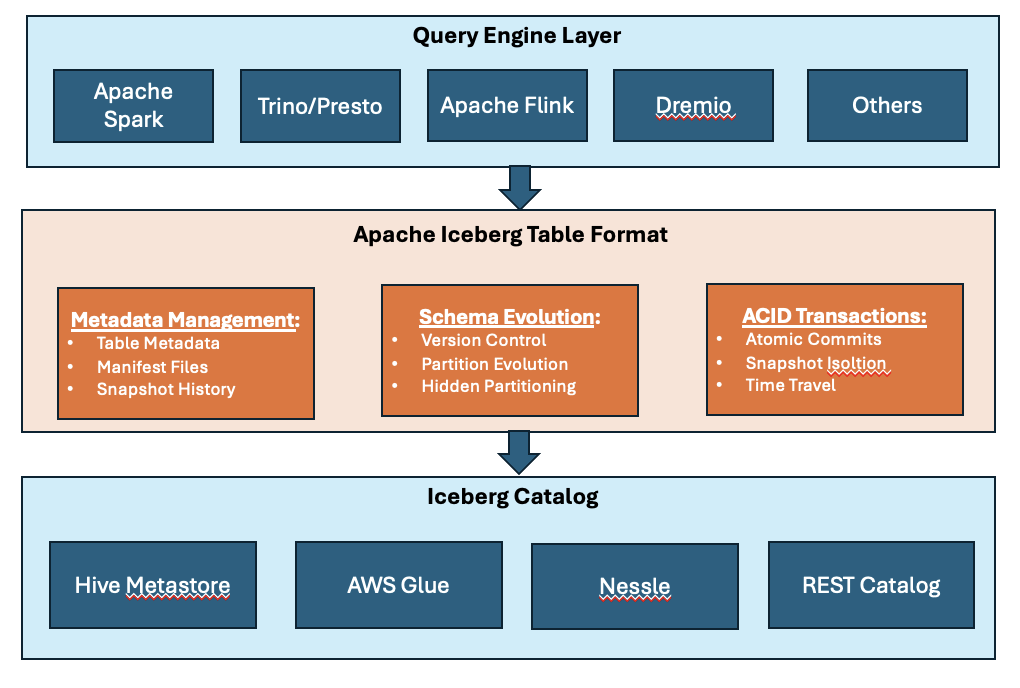

Jeff Denworth: I think very much so. For example, with respect to just the database, now we have connectors for environments that run with query engines that would typically look for something like an S3 style data lake. So we’ve got connectors for spark environments, we’ve got connectors for Trino environments, and other modern query engines. And basically think of the system that we built as an accelerator for customers that don’t want to write to our version of SQL.

In addition to that, as time goes on, actually, one of the key partners that we’re working with is Nvidia, so they actually attended the event that we just hosted. And the way that we think about Nvidia is they’re building the infrastructure of this modern form of computing, and we’re putting the glue on top of that that A. kind of stitches data onto it and makes it easy to process across all the different sites that a customer may have their AI machines, whether that’s on premises or in the cloud.

Steve McDowell: So …

Jeff Denworth: Yeah, so … Oh, go ahead.

Steve McDowell: It’s the latency, right? So I’m a VAST customer and I want to take advantage of all of these new capabilities. You going to charge me an arm and a leg? I mean, what’s …?

Jeff Denworth: Two arms, two legs, the whole shebang. No, no, no. So actually there’s no additional cost for the database or the data space in terms of what customers buy today. So they buy just a software license that covers the capacity that they want to manage, and we’re very much advocates of this idea that you don’t load up quotes with a bunch of SKUs that customers then need to go brazing a fine tooth comb across. And so as we add compute, we’ll add another SKU, a second SKU, which gives me a fair bit of heartburn, but I don’t know how to solve the problem otherwise, where you’ll also … You’ll subscribe to compute units and software, basically core hours, and between the two, that’s all you need to buy.

Steve McDowell: That’s excellent. That’s excellent. So all batteries included whether I want to use the features or not. That’s good.

Jeff Denworth: Yes.

Steve McDowell: So I’ll give you the last one.

Jeff Denworth: Batteries are included.

Steve McDowell: What did I not ask you that you wished I would’ve, if anything?

Jeff Denworth: Well, you didn’t ask us what we’re going to do today, and it’s the same thing we do every day, which is try to take over the world. No…

Steve McDowell: Of course!

Jeff Denworth: Yeah, so it’s an extremely ambitious product that we’re building, one that it can’t be built in a day, so we’ve been working at it for seven years. We’ve got one of the largest data infrastructure engineering teams in play now, and I think the question is why. Why are we doing this? And as we kind of look at what’s happening in the marketplace, all the buzz is, of course, around things like large language models, everybody’s talking. You can’t throw a tennis ball across Sandhill Road without hitting an investor that wants to talk about Chat GPT and what it’s going to do to every business.

But there’s a guy named Yann LeCun who works at Facebook, he’s their Chief AI scientist. And when all these LLMs really started to become popular, you found him commenting more about how these algorithms are relatively primitive versus human’s ability to create understanding. It takes about 20 hours to teach a human to drive, for example, 20 hours is all it takes, and yet, we still don’t have a self-driving car. There’s no understanding of math within Chat GPT. There’s no understanding of physics and the reasons behind why the world lives like it does. But we see something that is on the horizon, and we don’t know when it’s going to hit, but we see an opportunity to build the infrastructure for machines that can create their own discoveries after they’ve been exposed to and understand enough of the natural world.

And so we want to lay the foundation for the next 10 to 20 years of computing aiming towards this vision, and the hope is that really amazing things happen like these machines discover new paths to clean energy or new approaches to curing cancer and things like this. And if you have a system that is global, can stitch together trillions of cores, and can see a very, very large corpus of data, and you put data at the center of the system by adding functions and triggers to it such that the system is learning in real time as we learn as humans, then interesting things can happen.

Steve McDowell: Excellent. And so I think what I heard you say in there somewhere was when you started VAST Data five plus years ago, this is where you wanted to head. You’d known the entire time this was your trajectory?

Jeff Denworth: Yes. The last slide of our pitch deck was around can we build a thinking machine? And at the time, there were only a very few number of organizations that were actually pioneering this kind of modern AI that we’re all experiencing today. So you had Facebook, you had Google, you had Uber, Baidu, and maybe just a few others, and then a bunch of stuff happening in the university space, and it was clear that something new was happening and machines were just starting to think.

Steve McDowell: Yeah.

Jeff Denworth: And now it’s kind of a foregone conclusion in 2023, but at the time it was really nascent and AI could only do things like find cats in photos, but we’ve come a long way.

Steve McDowell: We sure have. I think that’s a good place to stop. It’s an exciting week. Congratulations on the product launch.

Jeff Denworth: Thank you.

Steve McDowell: And we’ll talk again.