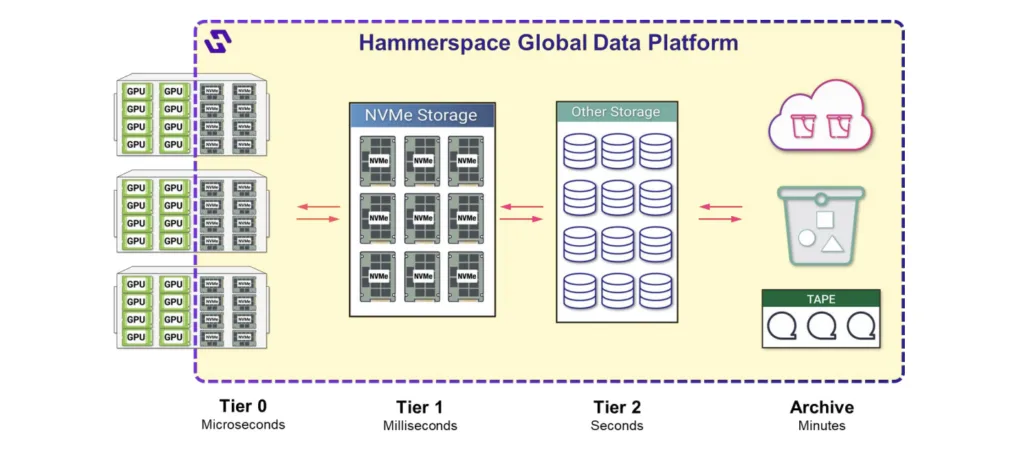

Hammerspace recently announced the version 5.1 release of its Hammerspace Global Data Platform. The flagship feature of the release its new Tier 0 storage capability, which takes unused local NVMe storage on a GPU server and uses it as part of the global shared filesystem. This provides higher-performance storage for the GPU server than can be delivered from remote storage nodes – ideal for AI and GPU-centric workloads.

In addition to Tier 0, updates in Hammerspace v5.1 include client-side S3 protocol support, improved performance and resiliency, enhanced GUI, and expanded deployment options, including high availability in Google Cloud.

Global Data Platform v5.1 Features

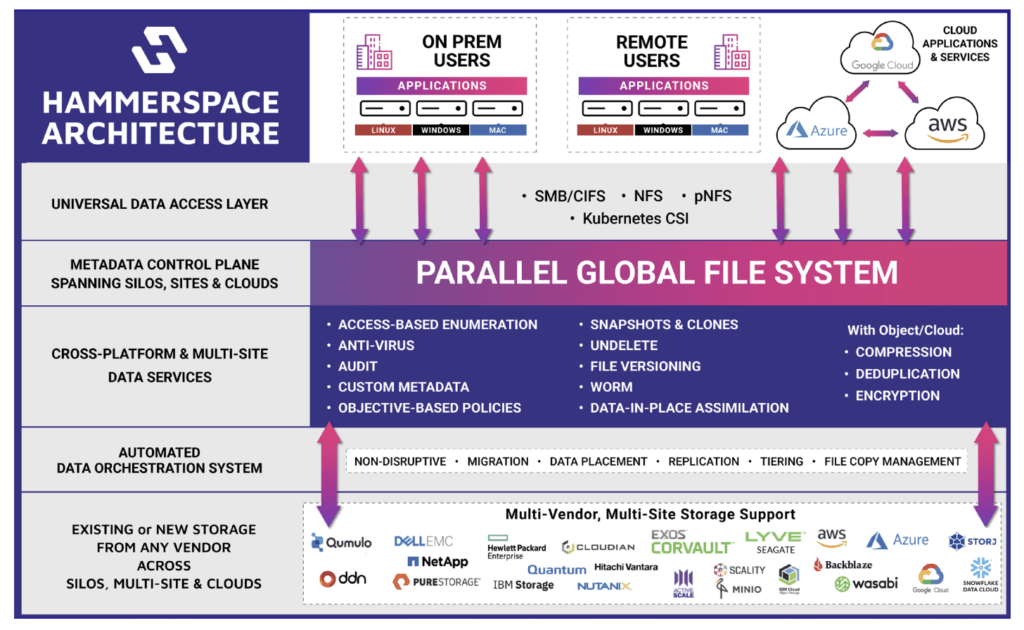

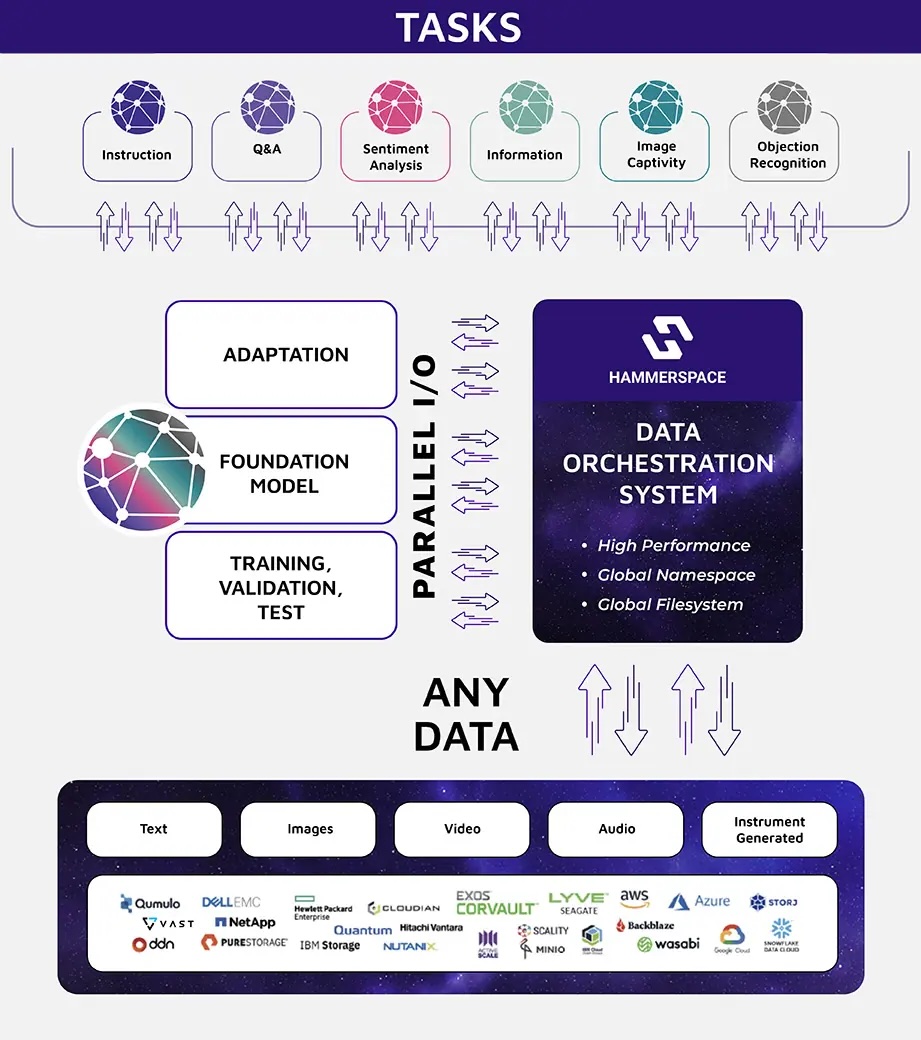

Hammerspace’s Global Data Platform version 5.1 introduces several enhancements aimed at improving performance, connectivity, and user experience:

- Native Client-Side S3 Protocol Support This feature unifies permissions and namespaces across NFS, SMB, and S3 protocols to allow seamless access to data regardless of the protocol used. It allows any Hammerspace share or directory to be exposed as an S3 bucket, facilitating direct access to the same files or objects via standard protocols without needing copies or proxies.

- Platform Performance Improvements The update delivers what Hammerspace describes as a twofold increase in metadata performance and a fivefold enhancement in data mobility, data-in-place assimilation, and cloud-bursting capabilities. These improvements support high-volume, high-performance workloads across data centers and cloud environments.

- Enhanced Resiliency Features Version 5.1 introduces three-way high availability (HA) for Hammerspace metadata servers to improve system resilience. Automated failover processes have also been improved across multiple clusters and sites, ensuring continuous operation and data availability.

- User Interface Enhancements The GUI has been updated with customizable, tile-based dashboards, allowing users to tailor their views and access richer controls for better observability and management of data activities within shares.

- Additional Enhancements:

- Client-Side SMB Protocol Enhancements: Improvements to the SMB protocol enhance connectivity and performance for client-side operations.

- High Availability in Google Cloud Platform: The platform now supports high availability deployments within the Google Cloud environment, ensuring robust cloud operations.

Tier 0 for AI & Other GPU-Centric Workloads

The flagship feature of the new release is its Tier 0 capability, which leverages the Hammerspace Global Data Platform to incorporate local NVMe storage into a unified, parallel global file system.

Tier 0 allows seamless integration across multiple storage tiers (Tier 0, Tier 1, Tier 2), automating data placement and orchestration based on workload requirements.

- Data Delivery: Data is provided directly to GPUs at local NVMe speeds, bypassing traditional network and external storage latency.

- Redundancy and Reliability: Local NVMe is augmented with shared access and reliability features, matching the redundancy of external storage.

Performance Enhancements

According to Hammerspace, its new Tier 0 functionality delivers significant performance improvements relative to remote storage nodes:

- Checkpointing Acceleration: Up to 10x faster than traditional external storage, reducing GPU idle time and increasing utilization.

- Data Tiering: Automates data movement between active and archival tiers, ensuring optimal resource use.

When Hammerspace announced the capability, the company released a whitepaper detailing the performance impact on AI training checkpointing. Checkpointing is a critical part of the AI training cycle, periodically saving an application’s state to persistent storage and enabling recovery in case of failures.

Hammerspace did not disclose how the performance compares to RDMA or NVIDIA GPUDirect solutions (which Hammerspace also supports).

Analysis

The Tier 0 announcement addresses key challenges in high-performance computing, AI workloads, and data orchestration by allowing enterprises to maximize the utility of existing infrastructure. By incorporating stranded NVMe storage into a unified data platform, Hammerspace reduces costs, accelerates time to deployment, and enhances sustainability. This is a nice advancement for Hammerspace.

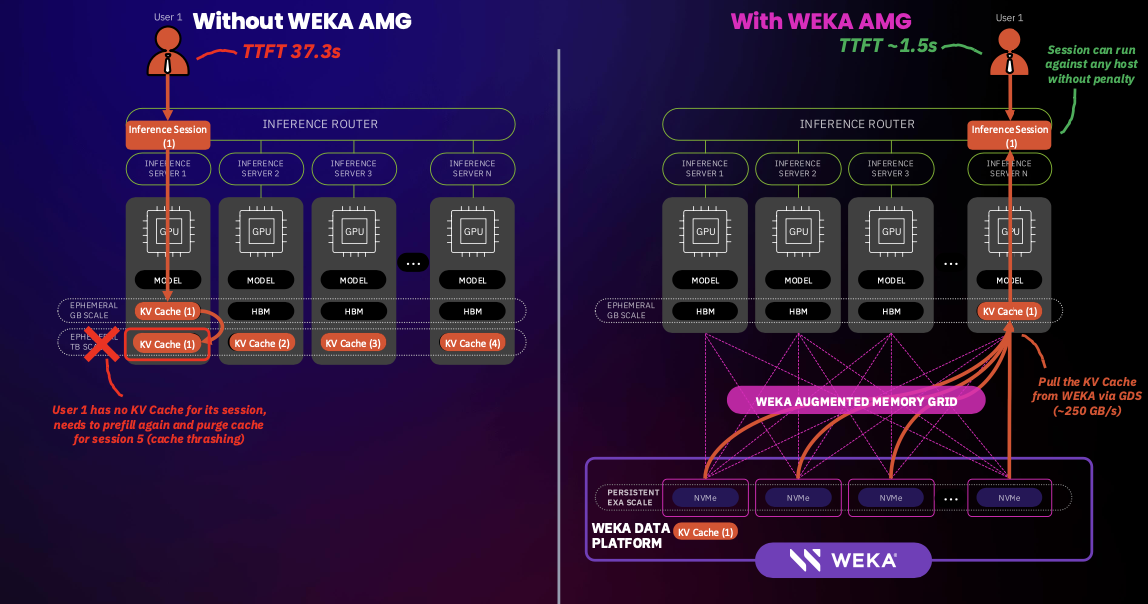

While solutions like NVIDIA GPUDirect Storage (which uses RDMA to transfer data directly from a storage node into GPU memory) emphasize raw data transfer efficiency, Hammerspace’s Tier 0 provides deeper benefits by delivering shared storage access, redundancy, and intelligent data placement. It is a compelling solution for enterprises managing multi-node GPU environments, hybrid workloads, and cloud-adjacent computing.

Hammerspace isn’t, however, the first to leverage GPU-local SSDs to improve data path performance for AI workloads. WEKA has long provided this functionality as part of its “converged mode,” which also leverages available local flash storage within GPU servers to improve data throughput to the local GPUs.

Hammerspace is a strong and growing player in the distributed storage market. It offers a compelling solution for GPU-intensive environments where performance, cost efficiency, and sustainability are critical. Tier 0 is a nice step forward, and any organization challenged with deploying performance GPU infrastructure should evaluate the offering.

Competitive Landscape & Advice to IT Buyers

These sections are only available to NAND Research clients. Please reach out to [email protected] to learn more.