Kioxia recently announced the open-source release of its “All-in-Storage” ANNS with Product Quantization (AiSAQ), an approximate nearest neighbor search (ANNS) technology optimized for SSD-based storage. AiSAQ enables large-scale retrieval-augmented generation (RAG) workloads by offloading vector data from DRAM to SSDs, significantly reducing memory requirements.

Kioxia’s approach supports billion-scale datasets while maintaining high recall accuracy with minimal latency degradation.

What is “ANNS” and Why Should We Care?

Before we delve into Kioxia’s optimized ANN search, we should first understand the technology and where it’s most applicable. We’ll keep it high-level, pointing readers who want to learn more about the technology to a well-maintained Wikipedia page.

Approximate Nearest Neighbor search is a technique for efficiently identifying data points in a dataset that are close to a given query point without necessarily finding the exact nearest neighbor.

In many applications, an approximate result is sufficient and can be obtained much faster than an exact one. For instance, in recommendation systems, image retrieval, and natural language processing, retrieving a data point approximately close to the query can be adequate and more efficient.

In AI, many tasks involve processing and retrieving information from large datasets where data points are represented as high-dimensional vectors. ANN algorithms facilitate rapid identification of data points that are similar to a given query, which is essential in several AI domains:

- Natural Language Processing (NLP): In NLP, words, phrases, or entire documents are often embedded into high-dimensional vector spaces. ANN search enables efficient retrieval of semantically similar texts, enhancing tasks like document clustering, topic modeling, and information retrieval.

- Computer Vision: ANN algorithms are employed to find visually similar images to a query image.

- Recommendation Systems: By representing user preferences and items as vectors, ANN search can quickly identify items similar to those a user has interacted with, thereby providing personalized recommendations in real-time.

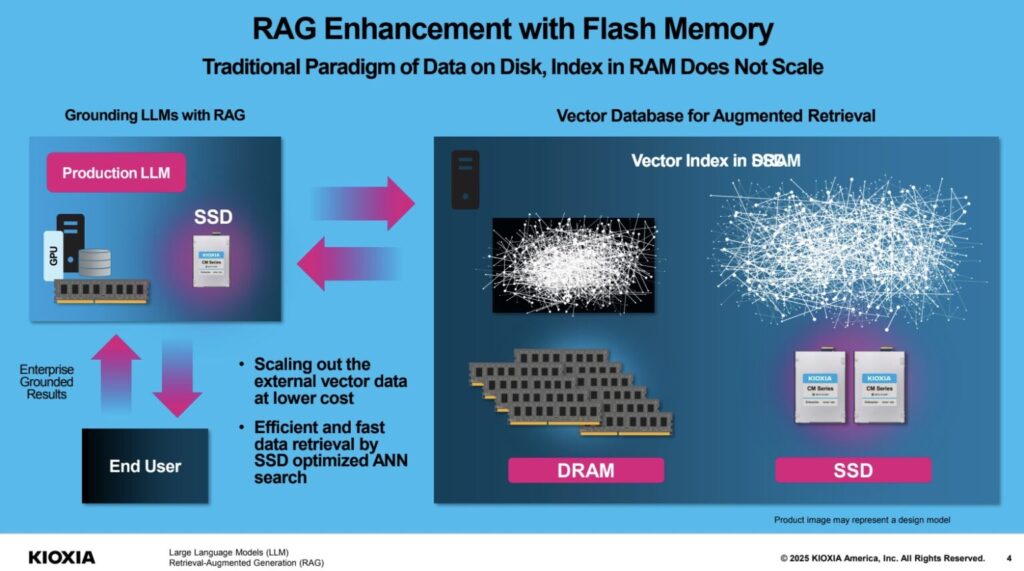

- Retrieval-Augmented Generation (RAG): In AI models that combine retrieval mechanisms with generative capabilities, such as certain large language models, ANN search fetches relevant information from vast datasets to inform and improve the generation process.

Kioxia’s AiSAQ

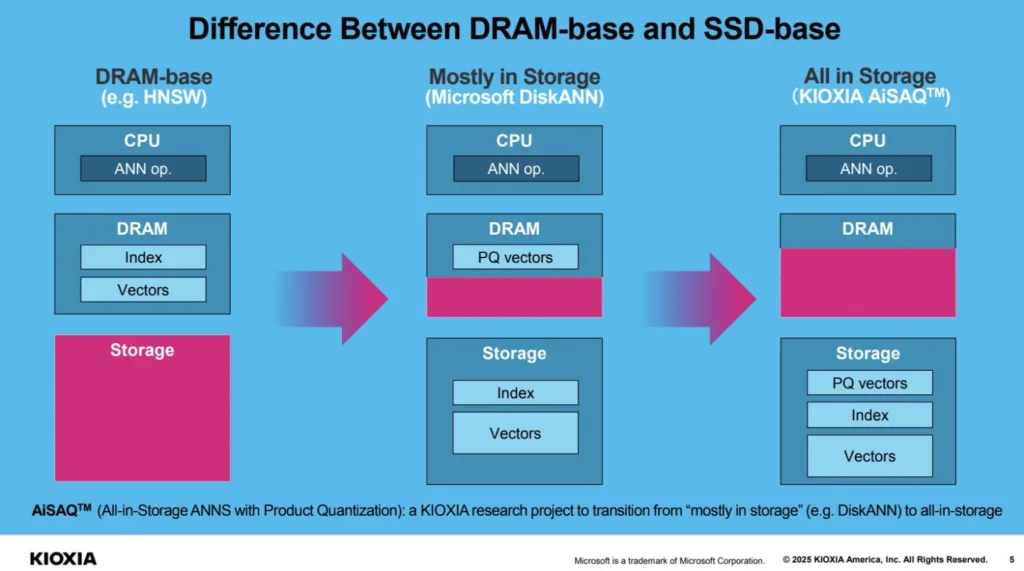

Traditionally, ANNS algorithms are deployed in DRAM to achieve the high-speed performance required for these searches. AiSAQ eliminates the need to load index data into DRAM, enabling the vector database to launch instantly. It does this by utilizing SSDs instead of DRAM for storing and accessing index data.

Kioxia’s approach enables large-scale vector databases to operate without relying on limited DRAM resources. This supports seamless switching between user-specific or application-specific databases on the same server for efficient RAG service delivery.

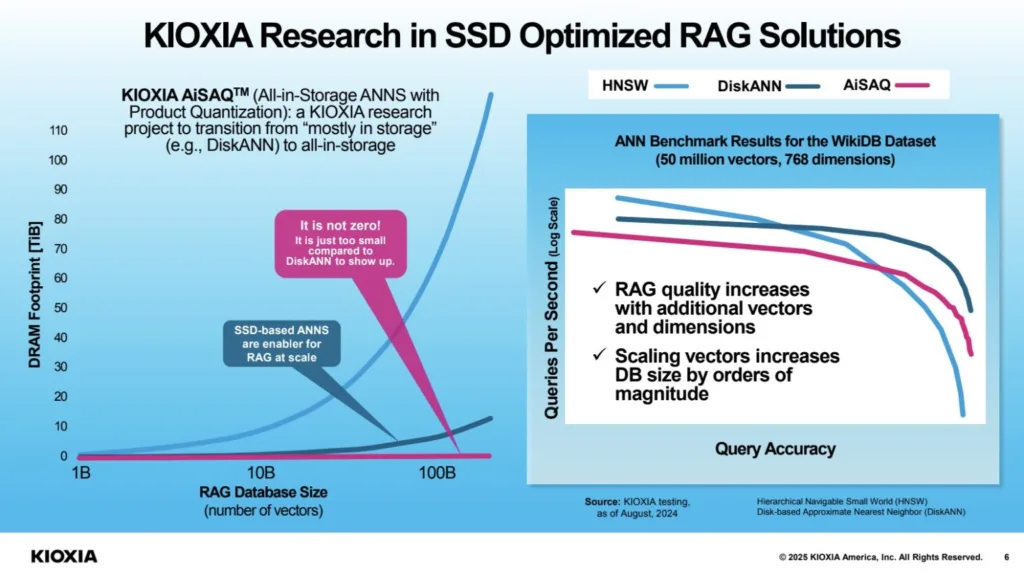

Kioxia’s AiSAQ is a fork of Microsoft’s DiskANN, a widely used ANNS algorithm that loads PQ-compressed vectors into DRAM while keeping full-precision vectors on storage. DiskANN achieves high recall but scales memory usage proportionately to dataset size, making billion-scale deployments costly.

Key Features

Kioxia’s AiSAQ implementation introduces several optimizations for memory efficiency, search latency, and index switching:

- DRAM-Free Query Execution: Unlike DiskANN, which retains PQ-compressed vectors in DRAM, AiSAQ loads both compressed and full-precision vectors from storage, reducing memory usage to approximately 10MB, regardless of dataset size.

- Optimized Data Placement: The AiSAQ index structure ensures that PQ vectors reside within the same storage blocks as node chunks, minimizing additional I/O operations.

- Scalable Performance: The search algorithm remains unchanged from DiskANN, ensuring that recall rates are maintained. Testing on billion-scale datasets shows millisecond-order query times with minor latency increases compared to DiskANN.

- Efficient Index Switching: AiSAQ reduces the time required to switch between large-scale datasets from seconds (or longer) in DiskANN to sub-millisecond levels by eliminating the need to load PQ vectors into memory.

- Compatibility with Existing ANNS Methods: AiSAQ’s storage-based approach applies to all graph-based ANNS algorithms and can integrate with higher-spec search methods in the future.

Performance Evaluation

Kioxia benchmarked AiSAQ against DiskANN under identical search conditions:

- Memory Usage: DiskANN requires 31GB RAM for a 1-billion vector dataset, while AiSAQ completes the same queries with 10MB RAM.

- Query Latency: AiSAQ maintains >95% recall@1 while adding minor search latency compared to DiskANN, with performance impact varying based on dataset structure and SSD I/O characteristics.

- Index Load Time: DiskANN index loading scales with dataset size (e.g., 16 seconds for 1B vectors), whereas AiSAQ loads instantly (sub-millisecond) by eliminating PQ vector loading from storage.

Analysis

Kioxia’s AiSAQ technology addresses the high memory costs associated with large-scale ANNS workloads in RAG, recommendation systems, and AI-driven search applications. By moving index storage to SSDs, AiSAQ reduces DRAM dependency, which allows vector searches to scale without significant hardware upgrades.

This announcement shows Kioxia innovating in high-performance storage and aligns with the shift towards cost-efficient AI infrastructure. Open-sourcing AiSAQ provides a pathway for broader adoption, particularly in cloud environments where SSD-backed search acceleration can improve AI service economics.

Future adoption will depend on further optimizations in SSD I/O efficiency and broader integration into vector database platforms such as Weaviate and Milvus.

While this isn’t a technology that can users can just plug in a run, it is a solid step forward in optimizing infrastructure for AI. Technology companies deploying large-scale AI search will find AiSAQ an efficient alternative to DRAM-heavy architectures, though performance trade-offs in query latency should be considered when evaluating use cases requiring near-instantaneous retrieval.

The is a nice move by Kioxia that helps advance the state-of-the-art AI infrastructure while also clearly demonstrating that Kioxia understands the advanced technology markets in which it competes and isn’t just building flash memory. That’s refreshing.