The CXL ecosystem is moving quickly from concept to deployment as hyperscalers, OEMs, and chipmakers seek new ways to address the memory bottlenecks that limit AI, cloud, and high-performance computing workloads. In this environment, interoperability is not a checkbox—it is the foundation that determines how quickly new architectures can reach scale.

Marvell’s Structera CXL product family is one of the (if not the) most complete CXL offerings in the market. With the latest round of interoperability testing, the company now claims a unique position: validation with both leading server CPU platforms (AMD EPYC and 5th Gen Intel Xeon) and all three major memory suppliers (Micron, Samsung, and SK hynix).

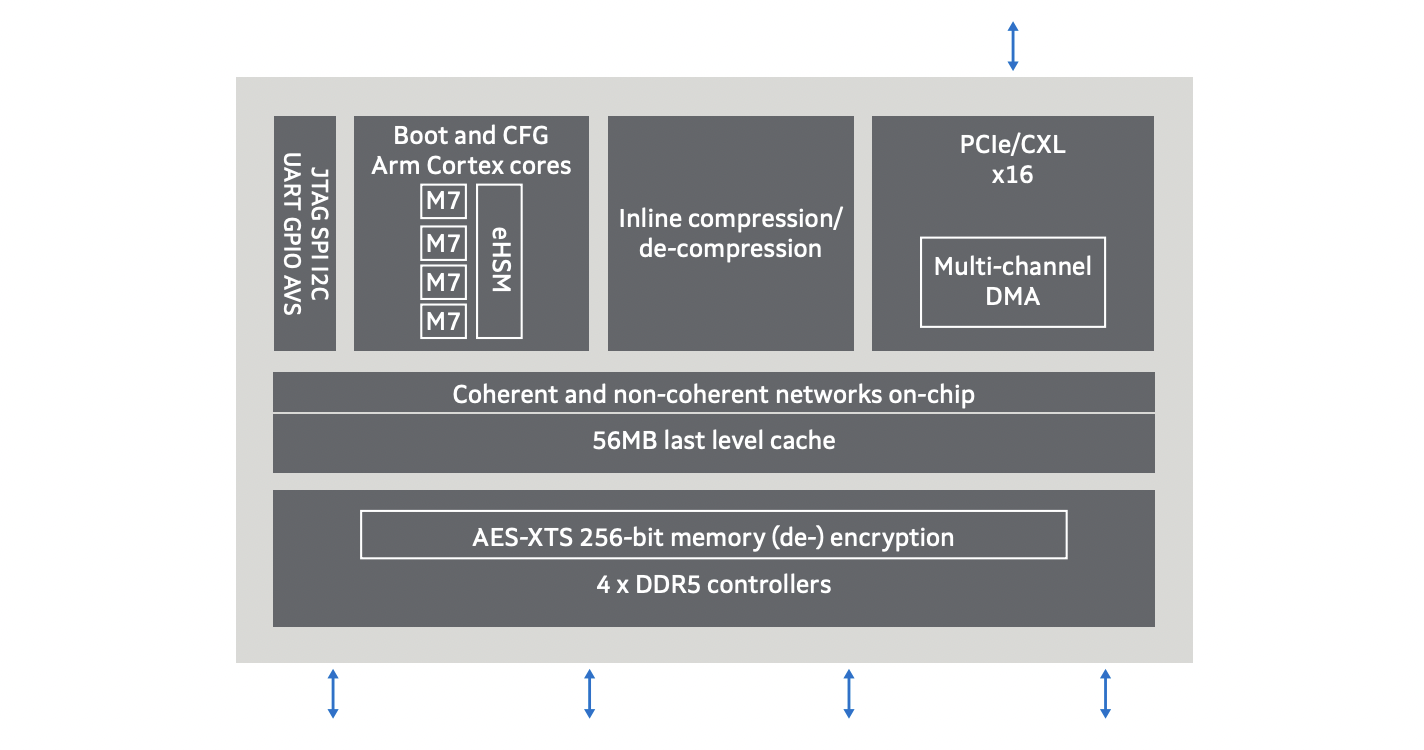

Last month at Hot Chips 2025, Marvell showcased its work in extending CXL beyond memory expansion to include integrated compute capabilities. The company demonstrated its Structera devices, which attach terabytes of DDR4 or DDR5 to servers via CXL while embedding Arm Neoverse cores, compression, encryption, and security features directly in the memory controllers.

By processing tasks such as recommendation models and AI inference near memory, these devices reduce latency, free host CPUs, and streamline data movement across the system.

Marvell’s Structera Portfolio

Marvell organizes its Structera products into two device families: Structera A and Structera X. Each addresses a different set of memory challenges within hyperscale data centers.

- Structera A near-memory accelerators integrate 16 Arm Neoverse V2 cores with multiple memory channels. The accelerators target high-bandwidth workloads such as deep learning recommendation models (DLRM) and machine learning inference, where local compute and compression reduce traffic to the host CPU.

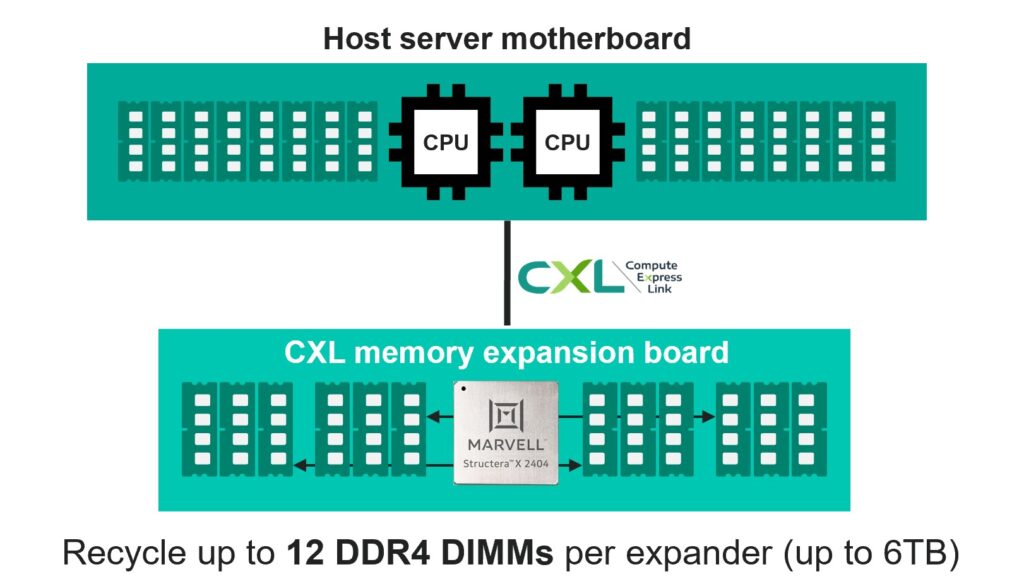

- Structera X memory expansion controllers extend memory capacity by attaching terabytes of DDR4 or DDR5 to general-purpose servers through a CXL interface. Structera X devices support up to four memory channels and integrate inline LZ4 compression to increase effective capacity.

Both device families leverage 5nm process technology, offering higher efficiency and scalability than earlier designs.

Custom Silicon IP Offerings

Marvell extends Structera beyond standard devices by offering Structera IP for custom silicon designs. Hyperscalers and semiconductor companies can integrate CXL functionality directly into their chips, tailoring memory expansion and near-memory acceleration to workload-specific requirements.

The IP model covers a range of integration scenarios:

- Fully custom SoCs that embed CXL alongside CPU or accelerator cores.

- Tightly coupled accelerators that combine compute and memory expansion within a single package.

This flexibility allows organizations to optimize for power efficiency, performance, and cost while leveraging the interoperability validation Marvell has already completed.

CXL SOlves HyperScale DDR4 Dilemma

One of the most immediate challenges for hyperscalers is the migration from DDR4 to DDR5. CPUs that support only DDR5 may threaten to strand billions of gigabytes of DDR4 memory, resulting in both financial and environmental costs. Structera X directly addresses this problem.

A single Structera X 2404 device can support up to 12 DDR4 DIMMs, equivalent to 6TB of raw capacity or 12TB with inline compression, within a one- or two-socket server.

Because the controller also supports DDR5, it allows operators to bridge the transition period and maximize the use of existing DDR4 assets while preparing for DDR5 deployments. This dual compatibility lowers overall TCO and provides measurable reductions in embodied carbon.

Sustainabilty Benefits of CXL

While it may not be immediately obvious, adopting CXL carries clear sustainability benefits for data centers facing both exponential growth in AI workloads and mounting pressure to meet carbon reduction targets. One of the largest challenges in the near term is the memory transition from DDR4 to DDR5.

While DDR5 provides higher bandwidth and efficiency, the shift risks stranding billions of gigabytes of fully functional DDR4 memory. Without an alternative, that stranded capacity would translate into massive e-waste and the carbon emissions embedded in manufacturing replacement DDR5 modules.

By enabling DDR4 modules to be redeployed in modern systems alongside DDR5, CXL devices extend the lifecycle of existing memory assets, reducing unnecessary disposal and conserving the materials, energy, and water required for semiconductor fabrication.

CXL also supports a circular model for IT infrastructure. Instead of discarding older hardware during each server refresh cycle, data centers can pool, expand, and reconfigure existing memory through CXL memory expansion devices. This not only lowers total cost of ownership but also reduces the embodied carbon associated with producing new DIMMs.

According to Marvell, producing 1GB of DRAM generates several kilograms of CO₂. By reusing terabytes of DDR4 capacity through CXL controllers, hyperscalers can avoid tens of millions of tons of emissions.

Beyond the reuse of DDR4, CXL improves the overall energy efficiency of data centers. High-capacity memory expansion devices reduce latency by bypassing CPUs and PCIe switches, which translates into less wasted compute time and better GPU/CPU utilization.

In AI environments where underutilized accelerators drive up both power draw and infrastructure costs, these efficiency gains directly contribute to lower operational emissions.

CXL’s ability to right-size memory to workloads also means fewer servers need to be deployed to meet a given application’s requirements, further cutting power and cooling demands.

Analysis

Marvell’s CXL roadmap intersects with its broader memory technology investments, including dense 2nm SRAM IP, custom HBM base dies, and high-capacity DDR expansion devices. By combining these technologies with Structera, the company addresses both bandwidth constraints and capacity scaling challenges in AI and HPC systems.

For example, Structera A accelerators offload certain ML workloads directly at the memory layer, reducing CPU overhead and cutting latency. Structera X controllers expand system memory without routing through the CPU and PCIe switches, thereby reducing latency and improving effective bandwidth utilization.

Marvell’s expanded interoperability testing positions Structera as the most validated CXL 2.0 product line available today. This gives OEMs and hyperscalers fewer integration risks and faster paths to qualification.

From a competitive perspective:

- Intel and AMD both integrate CXL capabilities into their CPUs, but their solutions rely heavily on third-party memory controllers and accelerators to extend beyond native capacities.

- Astera Labs has focused on CXL memory controllers but has not yet demonstrated ecosystem validation at the breadth Marvell now claims.

- Memory suppliers such as Samsung, Micron, and SK hynix are actively seeking partners to accelerate CXL adoption, making Marvell’s validated position strategically valuable.

The broader impact is twofold. First, data center operators gain a practical pathway to deploy CXL at scale without risking supply chain lock-in. Second, the validation strengthens Marvell’s competitive posture against both CPU vendors and specialized startups.

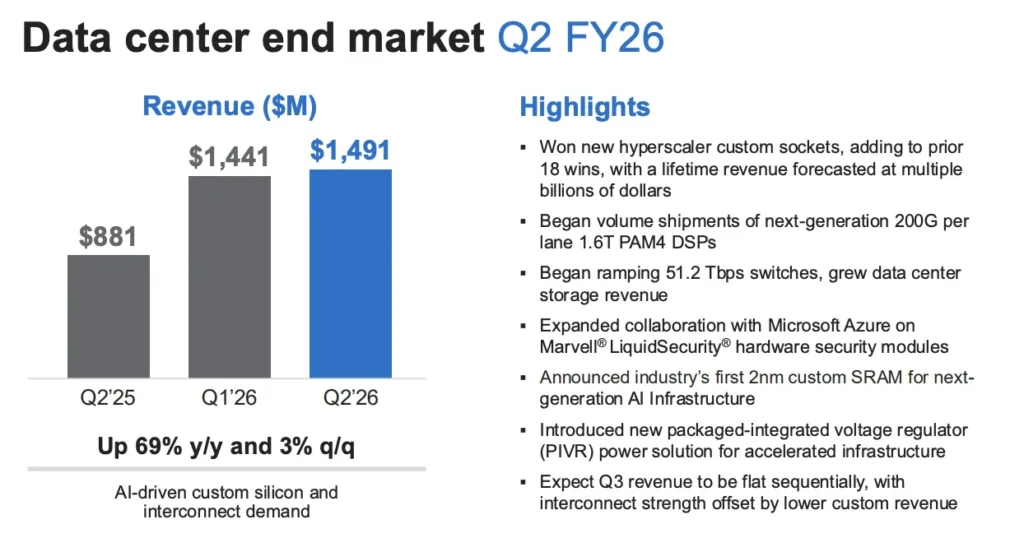

Marvell doesn’t detail its CXL or custom chip revenues, but hyperscaler customers are clearly responding to its offerings. In its most recent earnings release the company disclosed that its data center business grew 69% year-over-year to $1.5 billion in Q2 FY26.

For enterprises and hyperscalers, Marvell’s announcement about its increased interoperability shows that CXL has moved beyond theory and into production-ready infrastructure. There’s no question that Marvell is firmly embedded in the ecosystem.

Competitive Outlook & Advice to IT Buyers

These sections are only available to NAND Research clients and IT Advisory Members. Please reach out to [email protected] to learn more.