At the recent Supercomputing 2024 (SC24) conference in Atlanta, NVIDIA announced new hardware and software capabilities to enhance AI and HPC capabilities. This includes the new GB200 NVL4 Superchip, the general available of its H200 NVL PCIe, and several new software capabilities.

Hardware Updates

NVIDIA made hardware announcements that reinforce its leadership in AI and HPC, including its new GB200 NVL4 Superchip and the general availability of its Hopper-based NVL PCIe.

GB200 NVL4 Superchip:

The new GB200 NVL4 Superchip integrates two Grace CPUs and four Blackwell B200 GPUs on a single board, interconnected via NVIDIA’s NVLink technology. The architecture provides 1.3TB of coherent HBM3 memory shared across the GPUs.

NVIDIA says that the GB200 NVL4 offers substantial improvements over its predecessor, the GH200 NVL4, delivering 2.2 times faster simulation performance and 1.8 times faster training and inference capabilities.

The new part is expected to be available to customers in the second half of 2025.

H200 NVL PCIe Availability:

NVIDIA also announced the general availability of the Hopper generation H200 NVL PCIe GPU. This GPU is built for data centers with lower power requirements and air-cooled enterprise rack designs.

Compared to its predecessor, the H100 NVL, the H200 NVL provides a 1.5x increase in memory and a 1.2x boost in bandwidth. These improvements enable up to 1.7x faster inference performance for large language models and a 1.3x performance increase for HPC applications.

The H200 NVL comes with a five-year subscription to NVIDIA AI Enterprise, a cloud-native software platform for developing and deploying production AI.

Software Updates

At the SC24 event, NVIDIA unveiled several software and platform developments to advance AI and HPC across various industries.

cuPyNumeric Library

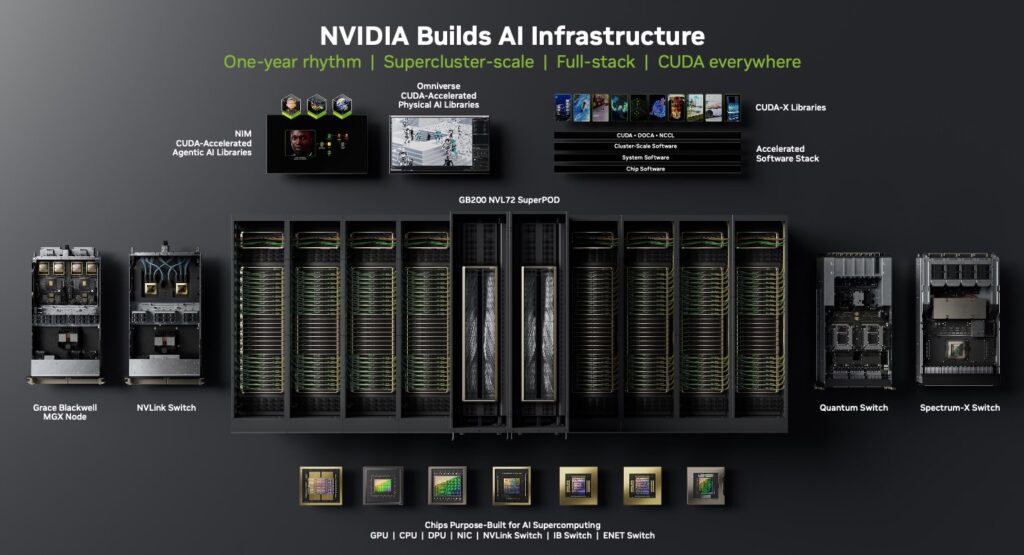

NVIDIA introduced cuPyNumeric, a GPU-accelerated implementation of NumPy, designed to enhance applications in data science, machine learning, and numerical computing. This addition expands NVIDIA’s suite of over 400 CUDA-X libraries.

Omniverse Blueprint for Real-Time Digital Twins

The company announced the NVIDIA Omniverse Blueprint, a reference workflow that enables developers to create interactive digital twins for sectors such as aerospace, automotive, energy, and manufacturing. This blueprint integrates NVIDIA’s acceleration libraries, physics-AI frameworks, and interactive physically based rendering, achieving up to 1,200x faster simulations and real-time visualization.

Earth-2 NIM Microservices

For climate forecasting, NVIDIA introduced two new NVIDIA NIM microservices for its Earth-2 digital twin platform. The CorrDiff NIM and FourCastNet NIM microservices can accelerate climate change modeling and simulation results by up to 500x, enhancing the ability to simulate and visualize weather and climate conditions.

Analysis

NVIDIA has always used software as an enabler to further its hardware solutions. The company’s dual focus on high-performance hardware and comprehensive software continued at SC24, part of its calculated strategy to maintain its competitive edge.

On the hardware side, the H200 NVL PCIe’s availability broadens NVIDIA’s reach into enterprise data centers with a modular, more power-efficient solution. While it doesn’t match the raw power of the GB200, its flexibility and cost efficiency will appeal to a broader range of customers.

NVIDIA’s primary challenge remains power consumption, particularly with the GB200 NVL4 requiring 5,400 watts. This raises valid questions about sustainability and deployment feasibility, especially for enterprises with constrained cooling infrastructure.

NVIDIA continues to be the “go-to” provider of comprehensive AI and HPC solutions by integrating cutting-edge hardware with advanced software platforms. This approach strengthens its ecosystem, fosters customer loyalty, and expands its influence across sectors.

Competitive Differentiation & Advice to IT Buyers

These sections are only available to clients. Please reach out to [email protected] to learn more.