Call Notes: Quarterly Semiconductor Update (March 2026)

Every quarter I participate in a call for buy-side investment analysts focused on the broader datacenter/hyperscaler semiconductor ecosystem. Here, I’m sharing the raw notes I used to drive that call.

Call Notes: Compute Hardware & Semiconductor Ecosystem

Every quarter I participate in a call for buy-side investment analysts focused on compute hardware and the broader semiconductor ecosystem. Here, I’m sharing the raw notes from that call.

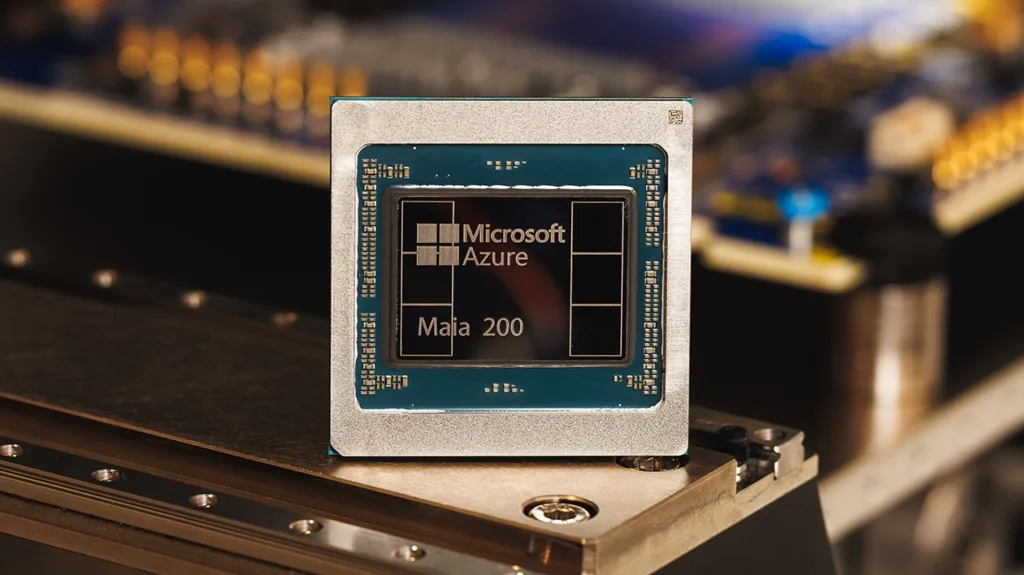

Research Note: Microsoft Azure Maia 200 Inference Accelerator

Microsoft recently announced its second-generation custom AI accelerator, the Maia 200. The new chip is an inference-optimized alternative to third-party GPUs in its Azure infrastructure. The company says the accelerator delivers 30% better performance per dollar than existing Azure hardware while supporting OpenAI’s GPT-5.2 models and Microsoft’s own synthetic data generation workloads.

Research Note: Marvell to Acquire Silicon Photonics Player Celestial AI

Marvell Technology recently announced a definitive agreement to acquire Celestial AI for $3.25 billion in upfront consideration ($1 billion cash plus $2.25 billion in stock), with potential earnout payments of up to an additional $2.25 billion based on revenue milestones through fiscal 2029.

Call Notes: Q4 Semiconductor Tracker

We’re in a moment that’s less about individual chip performance and more about processing-at-scale. It’s all about sprawling AI racks, optics, interconnect, and custom silicon hungry for scale, speed, and a story.

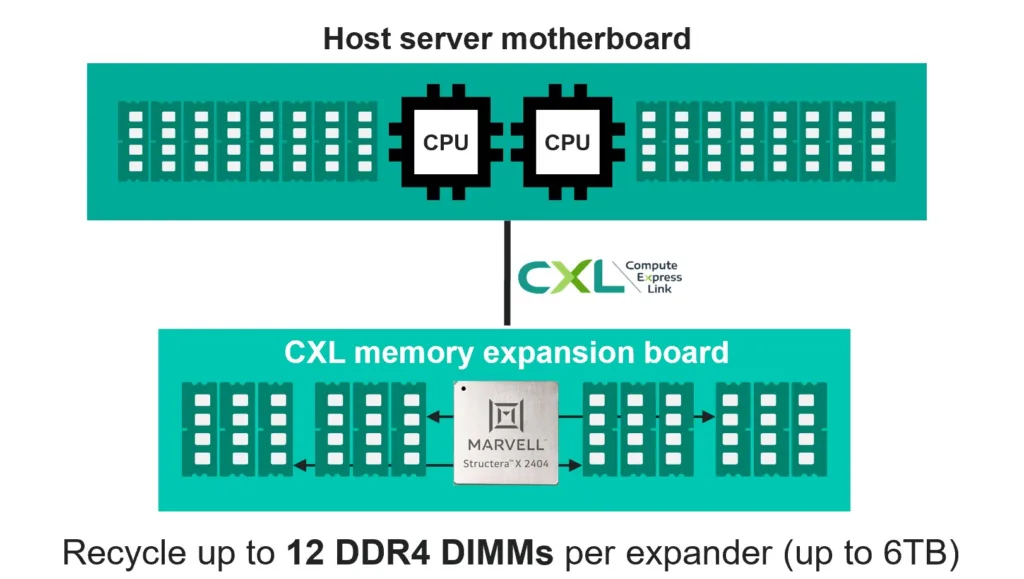

Research Note: Marvell Solidifies CXL Leadership w/ Ecosystem-Wide Interoperability

The CXL ecosystem is moving quickly from concept to deployment as hyperscalers, OEMs, and chipmakers seek new ways to address the memory bottlenecks that limit AI, cloud, and high-performance computing workloads. In this environment, interoperability is not a checkbox—it is the foundation that determines how quickly new architectures can reach scale.

OFC 2025: Optical Interconnects Take Center Stage in the AI-First Data Center

AI is reshaping the data center, bringing networking along for the ride. It’s clear that optical networking is rapidly moving from a back-end concern to a front-line enabler of next-generation infrastructure.

AI workloads, with their massive datasets, distributed training pipelines, and high-performance compute requirements, demand interconnect solutions that combine extreme bandwidth with low power consumption and low latency. At last month’s OFC 2025 event in San Francisco, this shift was unmistakable.

Research Note: Infineon Acquires Marvell’s Auto Ethernet Business

Infineon Technologies announced its intention to acquire Marvell Technology’s Automotive Ethernet business for $2.5 billion in cash in a move that expands its microcontroller and automotive systems portfolio.

NAND Insider Newsletter: February 4, 2025

Every week NAND Research puts out a newsletter for our industry customers. Below is a excerpt from this week’s, February 4, 2025.

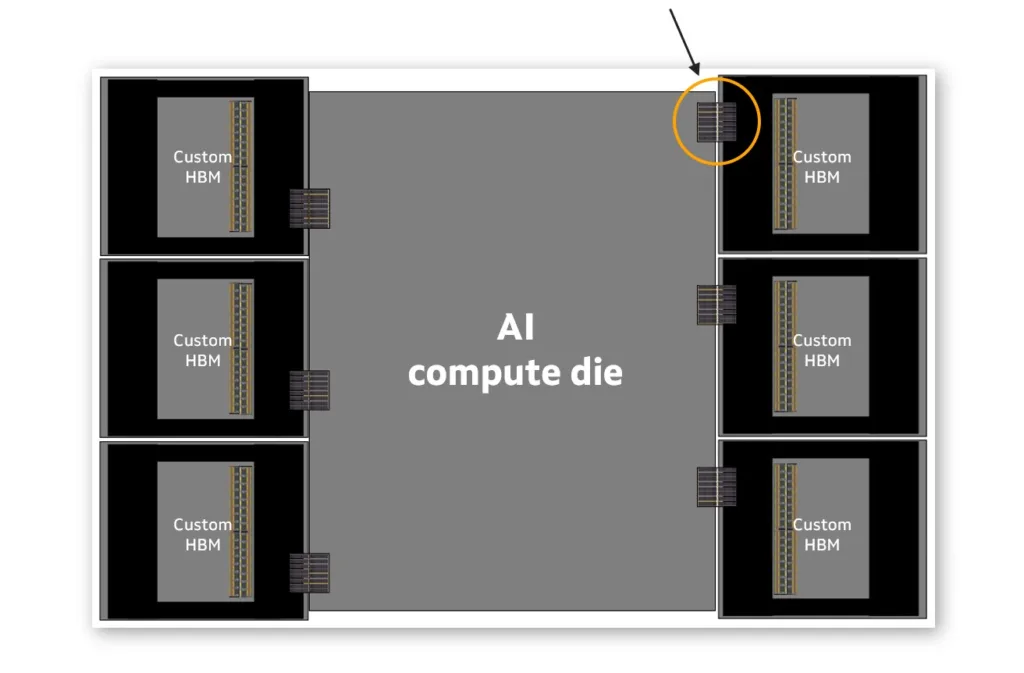

Research Note: Marvell Custom HBM for Cloud AI

Marvell recently announced a new custom high-bandwidth memory (HBM) compute architecture that addresses the scaling challenges of XPUs in AI workloads. The new architecture enables higher compute and memory density, reduced power consumption, and lower TCO for custom XPUs.

Marvell Sees Momentum in Cloud, AI, and Automotive

Last week, Marvell released its earnings for the second quarter of its fiscal 2024, demonstrating robust performance with $1.34 billion in top-line revenue. While that number was down year-over-year, it surpassed the midpoint of the company’s guidance. Insight: Growth in Cloud & AI Marvell’s data center business was a bright spot. Revenue from that business […]