Background

Cooling a data center was a challenge even before the current AI-driven boom in accelerated computing heated up. Servers run hot, with processor thermal designs reaching 500 watts by 2025. Add GPUs to the mix, some of which approach 700W today, and the problems of power consumption and heat dissipation begin to expand exponentially. Traditional cooling technologies throttle an IT organization’s ability to deploy solutions. It impacts the business.

That impact goes beyond simply increasing compute density within a rack; it can fundamentally impact the bottom line. Analysts estimate that data centers, globally, account for 1.5 – 2% of the world’s energy consumption. That’s a significant carbon footprint hanging around the neck of enterprises, nearly all of which have sustainability goals. Beyond sustainability, however, there’s cost. Up to 40% of a data center’s energy use is directly related to cooling. That’s a hefty bill to pay.

The United States Department of Energy, through its Advanced Research Projects Agency-Energy (ARPA-E) efforts, announced its COOLERCHIPS program late last year to address the problem of data center cooling. Last month the agency awarded grants totaling $40M to 15 organizations.

Each grant recipient is pursuing a novel approach to solving the data center cooling problem. The grants ranged in size from $1.2M to $5M. NVIDIA won the largest of those grants, $5M, to pursue a unique blending of concepts that promise to address cooling within a computer’s chassis.

NVIDIA, the Data Center Infrastructure Company

It’s no surprise that NVIDIA is interested in data center cooling. Its CEO, Jensen Huang, frequently talks about NVIDIA becoming a data center company, positing that the data center is the new compute unit. This isn’t just visionary language; NVIDIA has been aggressively assembling (and acquiring) nearly every technology element required to deliver that vision.

NVIDIA’s efforts are paying off. Its most recent earnings revealed that its data center business accounts for over 68% of its total revenue, bringing in $4.3B in the first quarter of 2023. The current boom in AI-related infrastructure, coupled with NVIDIA bringing several relevant new products to market, have the company predicting non-linear growth in the short term.

NVIDIA’s traditional approach to delivering accelerated compute has been via a traditional add-in card model, with NVIDIA GPUs and accelerated networking products sold independently and assembled into a server built by someone else. NVIDIA expanded that model when it introduced its DGX system in 2018. DGX is a turn-key accelerated compute solution AI. However, DGX was just the beginning, as the company continues to ramp up its system-level efforts.

Over the past quarter, however, NVIDIA announced other platform-level turn-key solutions, including its new DGX Cloud for hyper-scalers and its upcoming OmniVerse Cloud. Cooling these systems will be a continuing challenge, even with direct liquid cooling solutions becoming popular with server vendors. Solving big AI challenges requires a lot of hot processors packed as close together as possible to achieve maximum density. It’s a problem in need of a solution.

News: NVIDIA’s Hybrid Cooling Approach

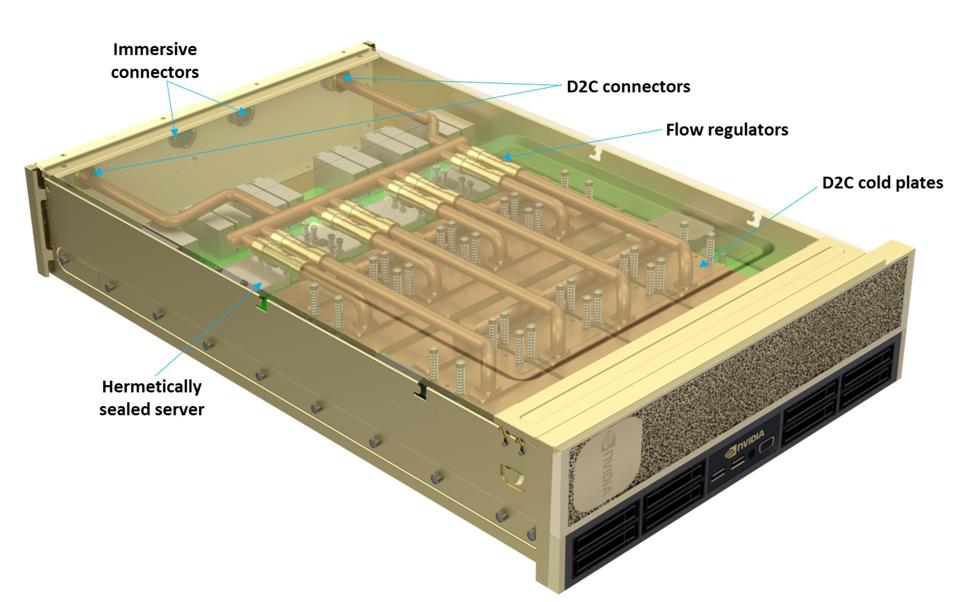

The NVIDIA COOLERCHIPS application describes a system combining two proven approaches: direct liquid cooling (DLC) and immersion cooling. DLC is well-trod ground, with numerous solutions on the market. HOWEVER, the DLC approach is limited in its effectiveness to maintain as power density increases.

Immersion cooling, where electronics are submerged in a dialectic or other fluid, can effectively enable high-density compute. However, Current immersion cooling approaches typically require submerging servers in large fluid-filled tanks. While this approach works in many scenarios, such as edge installations, it can be cumbersome to implement in a traditional rack-oriented data center.

NVIDIA describes its COOLERCHIPS approach as a blend of these technologies. NVIDIA will use traditional DLC attached to the CPUs and accelerators in the system, while also filling the chassis with fluid that turns the server into an immersion tank. This allows the temperature zones within the system to be managed independently, all within a single solution that lives in a traditional rack.

NVIDIA isn’t pursuing this project alone, instead tapping the expertise of seven technology and research partners. NVIDIA’s internal team of roughly a dozen engineers is working with BOYD Corporation for its cold plate technology, Durbin Group for the pumping system, Honeywell to help select fluids, and Vertiv Corporation for its heat rejection technology. In addition, the company is tapping Binghamton and Villanova universities to help with analysis, testing, and simulation, while also working with Sandia National Laboratory for reliability assessment.

NVIDIA said in a blog post that its COOLERCHIPS project will deliver three annual milestones. The first year will see component tests completed. The following year will see a partial rack evaluated, with a fully system-tested solution ready at the end of third.

Analysis

Finding new approaches to cooling the accelerator-rich AI-enabled data center is needed. Current practices lead to genuine operational complexities, adding costs that can dramatically impact an enterprise’s bottom line. Therefore, solving datacenter cooling challenges is paramount to the future of accelerating computing.

NVIDIA is far from alone in exploring innovative solutions to data center cooling challenges. The Open Compute Project (OCP) has long had a working group focused on cooling technology, with several exciting offshoots emerging. Every tier-one server OEM offers some variant of a rack-level liquid-cooled solution. And there are numerous players focused on single- and two-phase immersion cooling.

However, NVIDIA is nearly alone among its peers in looking at novel cooling solutions. While Intel Corporation is exploring several approaches, including liquid immersion cooling, the company canceled the $700M liquid cooling research facility it planned to build in Oregon earlier this year.

NVIDIA and the other COOLERCHIPS grant recipients understand that the current solutions to data center cooling challenges are limited, providing a stopgap solution at best. NVIDIA’s COOLERCHIPS approach combines elements of known-effective cooling approaches, such as direct liquid cooling, with an interesting new approach to immersion cooling.

If NVIDIA can deliver a solution that keeps pace with the increased power densities without forcing IT architects to rethink the infrastructure, the company will win. I’m anxious to see what NVIDIA and its COOLERCHIPS partners deliver. And so are a lot of data center architects.