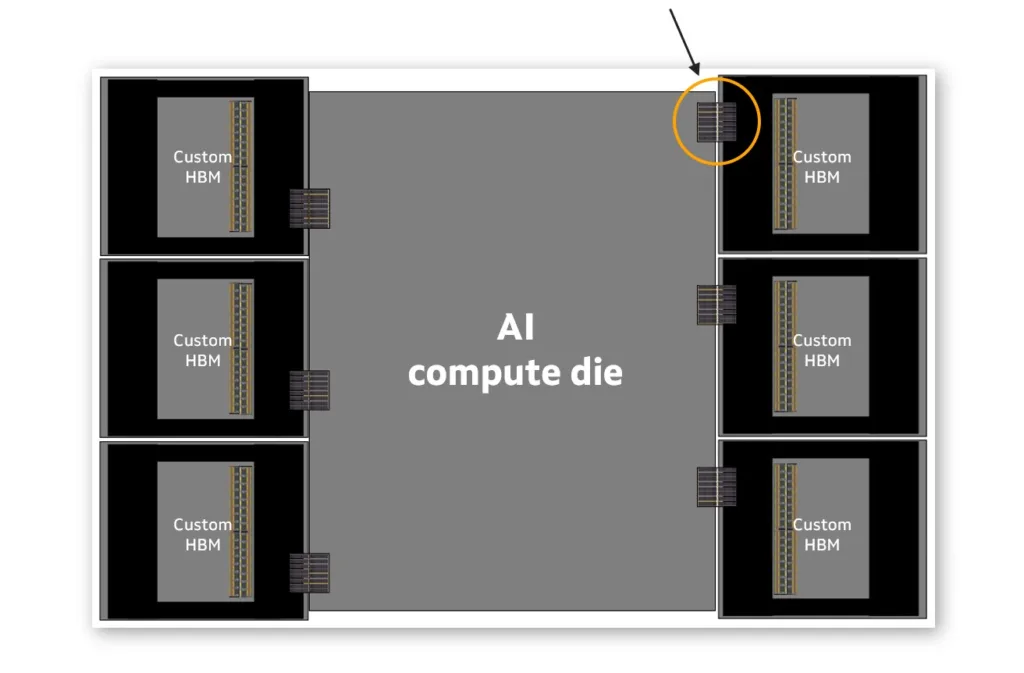

Research Note: Marvell Custom HBM for Cloud AI

Marvell recently announced a new custom high-bandwidth memory (HBM) compute architecture that addresses the scaling challenges of XPUs in AI workloads. The new architecture enables higher compute and memory density, reduced power consumption, and lower TCO for custom XPUs.

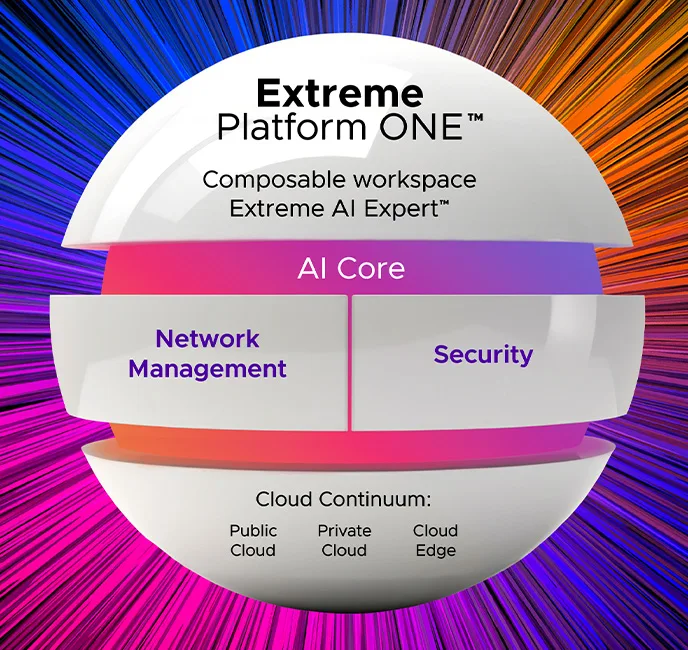

Extreme Networks Platform ONE: A New Approach to AI-Driven Network Management

Extreme Networks recently announced the launch of Extreme Platform ONE, its comprehensive management platform integrating networking, security, and AI to streamline enterprise infrastructure operations.

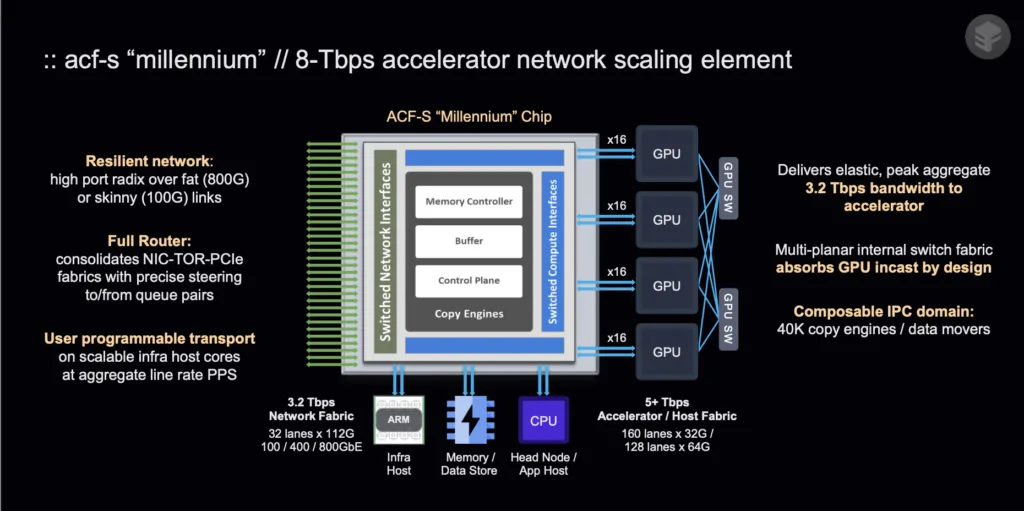

Research Note: Enfabrica ACF-S Millennium

First detailed at Hot Chips 2024, Enbrica recently announced that its ACF-S “Millennium” chip, which addresses the limitations of traditional networking hardware for AI and accelerated computing workloads, will be available to customers in calendar Q1 2025.

Research Note: Cohesity & Veritas Complete Merger

Cohesity completed its long-awaited merger with Veritas’ enterprise data protection business. The combined entity, valued at $7 billion, now serves 12,000 customers globally and generates $1.5 billion in ARR.

Research Note: Dell AI Products & Services Updates

Dell Technologies has made significant additions to its AI portfolio with its recent announcements at SC24 and Microsoft Ignite 2024 in November. The announcements span infrastructure, ecosystem partnerships, and professional services, targeting accelerated AI adoption, operational efficiency, and sustainability in enterprise environments.

Understanding AI Data Types: The Foundation of Efficient AI Models

AI Datatypes aren’t just a technical detail—it’s a critical factor that affects performance, accuracy, power efficiency, and even the feasibility of deploying AI models.

Understanding the datatypes used in AI isn’t just for hands-on practitioners, you often see published benchmarks and other performance numbers broken out by datatype (just look at an NVIDIA GPU data sheet). What’s it all mean?

Research Note: AWS Trainium2

Tranium is AWS’s machine learning accelerator, and this week at its re:Invent event in Las Vegas, it announced the second generation, the cleverly named Trainium2, purpose-built to enhance the training of large-scale AI models, including foundation models and large language models.

Quick Take: AWS re:Invent AI Services & Infrastructure Announcement Wrap-Up

AWS has made close to 50 announcements in the first 2 days of re:Invent. This blog takes a look at the most interesting AI services & infrastructure related ones.

Quick Take: AWS re:Invent Day 1

AWS unveiled a range of new features and services, reflecting its continued focus on innovation across generative AI, compute, and storage. These announcements include enhancements to Amazon Bedrock for improved testing and data integration, new capabilities for the generative AI assistant Amazon Q, high-performance storage-optimized EC2 instances, and advanced storage solutions like intelligent tiering and a dedicated data transfer terminal

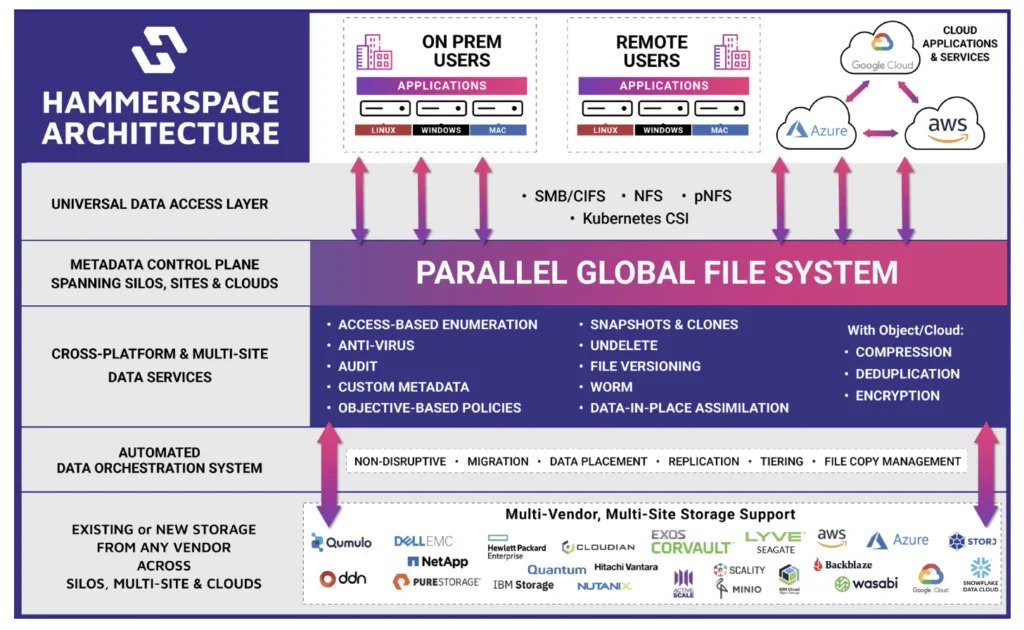

Research Note: Hammerspace Global Data Platform v5.1 with Tier 0

Hammerspace recently announced the version 5.1 release of its Hammerspace Global Data Platform. The flagship feature of the release its new Tier 0 storage capability, which takes unused local NVMe storage on a GPU server and uses it as part of the global shared filesystem. This provides higher-performance storage for the GPU server than can be delivered from remote storage nodes – ideal for AI and GPU-centric workloads.

Quick Take: Snowflake Acquires Datavolo

Snowflake recently announced its acquisition of Datavolo, a data pipeline management company, to enhance its capabilities in automating data flows across enterprise environments.

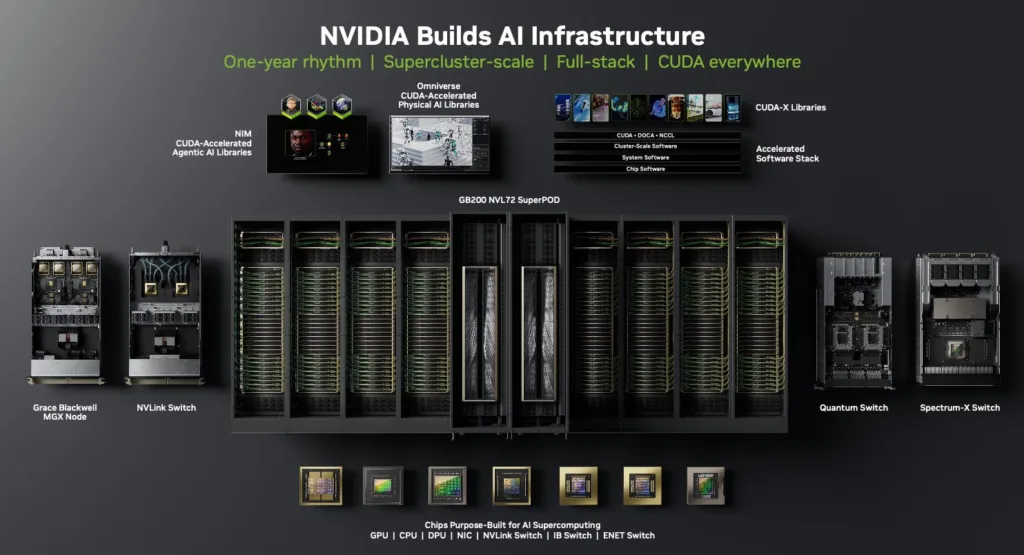

Research Note: NVIDIA SC24 Announcements

At the recent Supercomputing 2024 (SC24) conference in Atlanta, NVIDIA announced new hardware and software capabilities to enhance AI and HPC capabilities. This includes the new GB200 NVL4 Superchip, the general available of its H200 NVL PCIe, and several new software capabilities.

Quick Take: NTT Data Acquires Niveus Solutions

NTT Data, a global IT services leader, announced its acquisition of Niveus Solutions, a cloud engineering firm specializing in Google Cloud Platform (GCP) services. The deal brings a strategic addition to NTT Data’s arsenal, enhancing its position in the cloud services ecosystem and advancing its partnership with Google Cloud.

IT Infrastructure Round-Up: Emerging Trends in Infrastructure and Connectivity (Nov 2024)

November was a busy month of announcements in infrastructure and connectivity, revealing a convergence of innovation to meet the stringent demands of AI-driven workloads, scalability, and cost efficiency.