When a big Belgian with a well-earned name like “Smasher” looks you in the eye and speaks, you listen. You listen even more intently when you learn that Stefaan “Smasher” Desmedt is the rock band U2’s technical and video director for its heavily publicized residency at the MSG Sphere in Las Vegas. He’s been with the band for over twenty years and told me much of what you’re about to read. I believed him when he said that Weka.io (WEKA) is critical to the success of U2 at the Sphere.

The Sphere is a miracle of modern engineering, an unparalleled blend of architecture and modern technology. The largest spherical building in the world, it seats over 18,000 people and reaches 366 feet high. The Sphere stretches 516 feet at its broadest point. And there’s not a bad seat in the house.

Imposing as it is, it’s not the size of the building that we’re here to explore. Instead, we’ll look at how Smasher and his team struggled to find a data solution capable of keeping real-time graphics flowing into the highest-resolution LED screen in the world, all to satisfy the demands of arguably the biggest rock band in the world.

Amplifying WEKA’s success in the media and entertainment space, the U2 team chose the company to provide the data infrastructure for its Sphere shows. There are unique challenges involved, many of which are also seen with HPC and AI training workloads.

This isn’t a case study, but rather an example of how the demands of one market segment must sometimes leverage technology designed for another. Digital Transformation impacts the data management needs of every enterprise, even if that enterprise happens to be a rock band playing to 18,000 people a night.

Storage in Media & Entertainment

Media and Entertainment (M&E) places some of the harshest demands that a storage array or file system will ever see, rivaling or exceeding those of most AI systems.

Here’s an example: an hour of rendered 4K video or motion graphics can consume up to a terabyte of storage. Playing back that video can require up to 85 Mbps of bandwidth per stream. Editing that video requires latencies more common in video games than in a typical IT department. That’s for a standard production. The Sphere, however, is not standard by any measure.

The curved wall inside the Sphere is covered with the world’s largest and highest-resolution LED screen, built by Canadian LED display company SACO Technologies. Taking up 160,000 square feet, the display isn’t 4K but 16K–higher resolution than a 70mm IMAX film.

A video rendered at 16K requires 132 megapixels, 16 times as many pixels as a 4K video, and requires up to 70TB for each hour of footage. And every song U2 plays during a Sphere show is backed by an ever-changing mix of 16K motion graphics that can never fail.

Before playing back a video, it first must be created. For U2, some of that content already exists. The U2 team had more than 500 terabytes of existing content and archival footage stored on servers in the United Kingdom.

Other content must be created fresh. The U2 team relies on a globally dispersed cadre of animators, videographers, and motion graphics artists to provide its content. These craftspeople must create their content, render it in 16K, and then deliver it to Las Vegas.

This isn’t a one-time effort either, as U2 continually changes things, making data transfer an ongoing challenge. Or, as Smasher put it, “you can’t just ship hard-drives every day.”

This concern isn’t specific to U2 at the Sphere. Remote animators and artists work on video and movie productions every day. It’s a problem shared by nearly every movie studio and video production company, from DreamWorks to Marvel.

The challenges involved also extend beyond just data transfer. Content is often shared in real time, requiring a global namespace that can deliver the right balance of performance and security. This isn’t a problem that can be solved with a traditional NAS or SAN. It requires a distributed file system that can span cloud boundaries.

High-performance storage solutions are available from every major storage vendor, along with a handful of distributed file systems, that could potentially meet the needs of a demanding environment such as U2 at the Sphere.

Smasher said that he looked at many of these solutions and talked to his peers in the entertainment world about their experiences before landing on WEKA. When asked how much WEKA contributed to the show’s success, Smasher said that “it would have been impossible without WEKA.” I believed him.

WEKA’s Approach

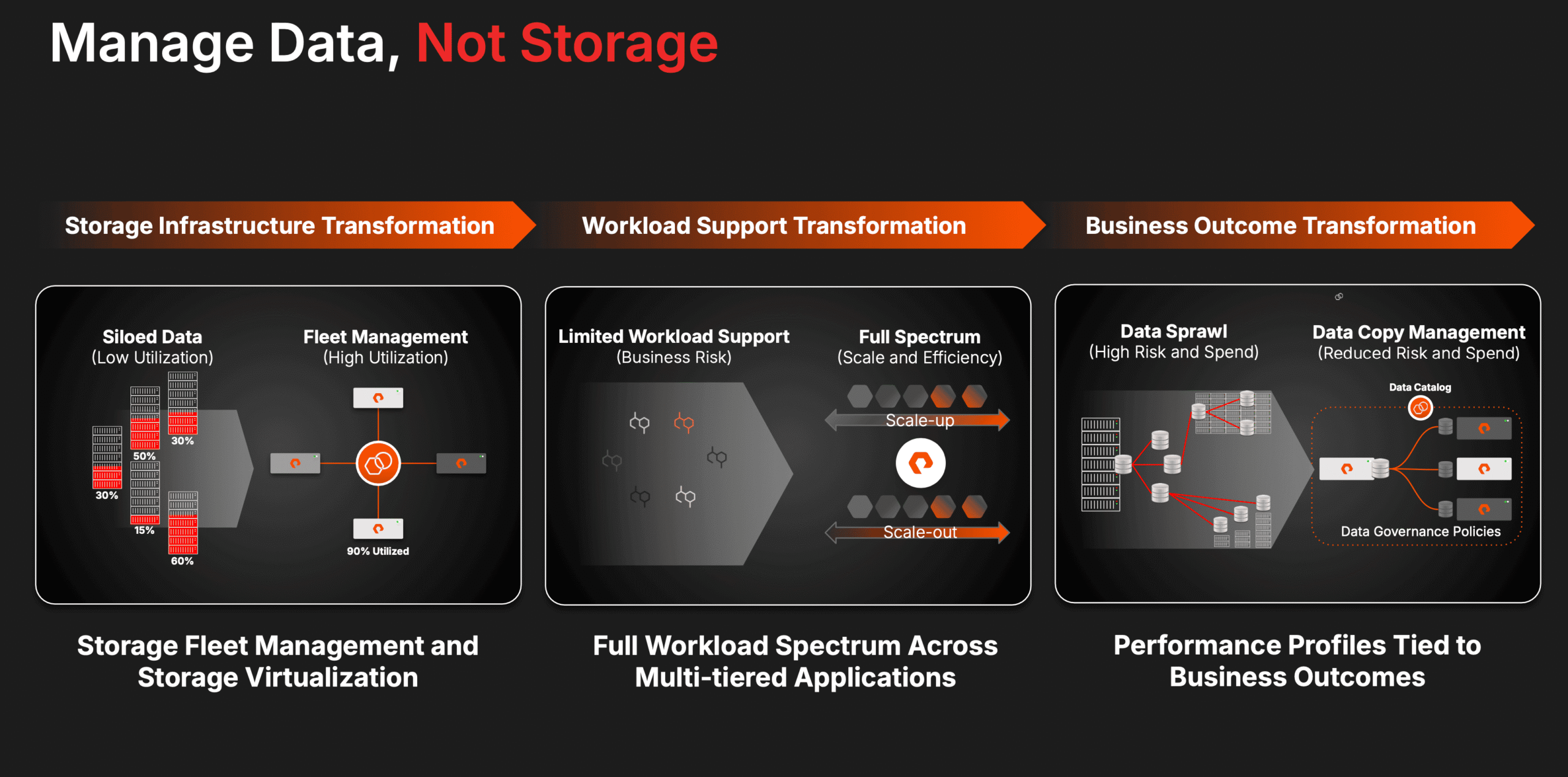

WEKA’s solution pivots around a sophisticated software-defined storage model. This paradigm separates the storage software from the hardware, enabling deployment on diverse hardware setups, including cloud-based environments and commodity hardware. This gives it the ability to ensure flexibility and cost-efficiency.

At its core, WEKA utilizes a state-of-the-art distributed file system. This system is engineered for scalability and high throughput, ensuring consistent performance even as the system scales horizontally with the addition of more storage nodes.

WEKA employs a dynamic tiered storage model, automatically moving data between different storage tiers—from high-speed SSDs to cost-effective HDDs—based on access patterns and performance requirements.

A defining feature of WEKA’s architecture is its inherent scalability, capable of adapting to growing data workloads without compromising performance, which is essential in rapidly evolving data landscapes.

Beyond its storage capabilities, WEKA offers sophisticated management tools, providing granular insights into system performance, capacity utilization, and overall health, facilitating proactive system management and optimization.

Its ability to meet the performance, scalability, data protection, and security needs made WEKA a natural choice for the U2 team. The deployed solution manages about 1.5 PB of content for the show and serves as a global namespace for the artists generating that content.

Analysis

WEKA’s roots are found in solving hard scalable data problems for high-performance computing, including many of the same challenges in the M&E space. This also gives WEKA the capabilities it needs to compete in the emerging generative AI space, where performance and scalability are paramount.

This market is filled with competent competitors, including companies with distributed file system technology, such as VAST Data, IBM with its Storage Scale solution (previously Spectrum Scale), and Panasas. Many M&E companies also look to more traditional storage solutions or even native storage solutions from public cloud providers. However, none of those solutions could meet the unique demands of U2 at the Sphere.

WEKA is experiencing a period of rapid growth and expansion. It’s also well-funded to scale with that growth, last November raising $135 million in an oversubscribed Series D funding round, doubling its previous valuation despite global market volatility.

WEKA and Stability AI recently announced that the two are collaborating to develop a “converged cloud” solution to provide the data infrastructure required to train generative AI models in the cloud efficiently. WEKA calls this its Converged Mode for Cloud solution. WEKA also revealed that it’s partnering with HPC company Applied Digital to provide the data infrastructure for Applied Digital’s GPU Cloud for generative AI.

WEKA stands at the forefront of a transformative era in data management with its innovative approach to high-performance data storage, particularly in the realms of M&E, AI/ML, and high-performance computing. Despite an impressive roster of competition, WEKA is uniquely positioned to address the escalating challenges of data intensity and complexity.

As industries increasingly rely on vast and rapid data processing, WEKA’s scalable and efficient solutions are set to become even more integral, fueling the next wave of digital innovation and efficiency.