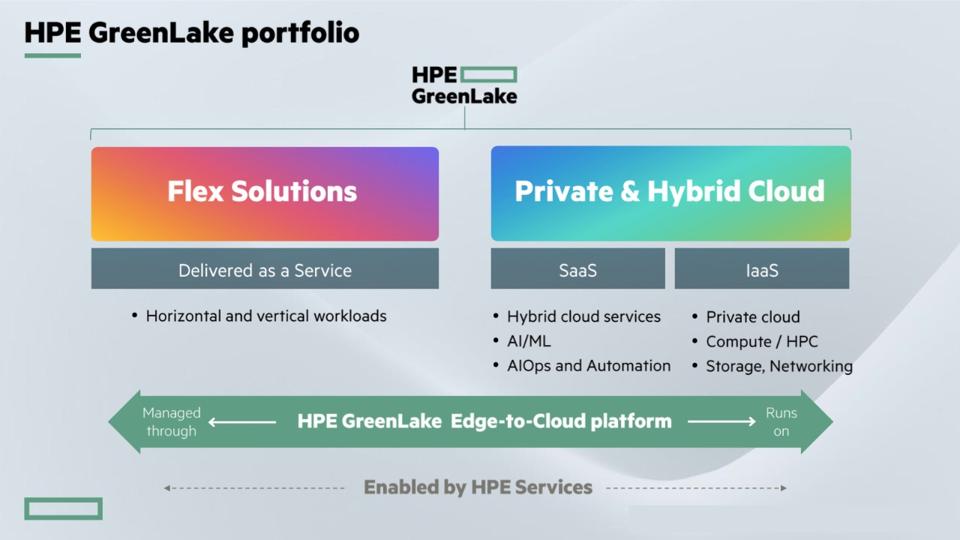

Hewlett Packard Enterprise has always taken a decidedly infrastructure-centric approach to delivering flexible consumption-based compute, storage, and networking to enterprise IT. HPE provides that flexibility through its popular HPE GreenLake offerings.

Last week at its annual HPE Discover Event in Las Vegas, HPE introduced the first of what the company says will be many domain-specific AI applications as-a-service. This move elevates HPE above its OEM peers who only deliver traditional infrastructure solutions, placing the company directly into the path of enterprise digital transformation.

Generative AI in the Enterprise

We have yet to determine where the flurry of activity around generative AI is ultimately leading us. Still, the technology is already changing how businesses across industries think about customer engagement, decision-making, marketing, workflow automation, and a dozen other tasks. The innovation around Large Language Models (LLMs), such as ChatGPT, is just getting started. The list of use cases is growing by the day.

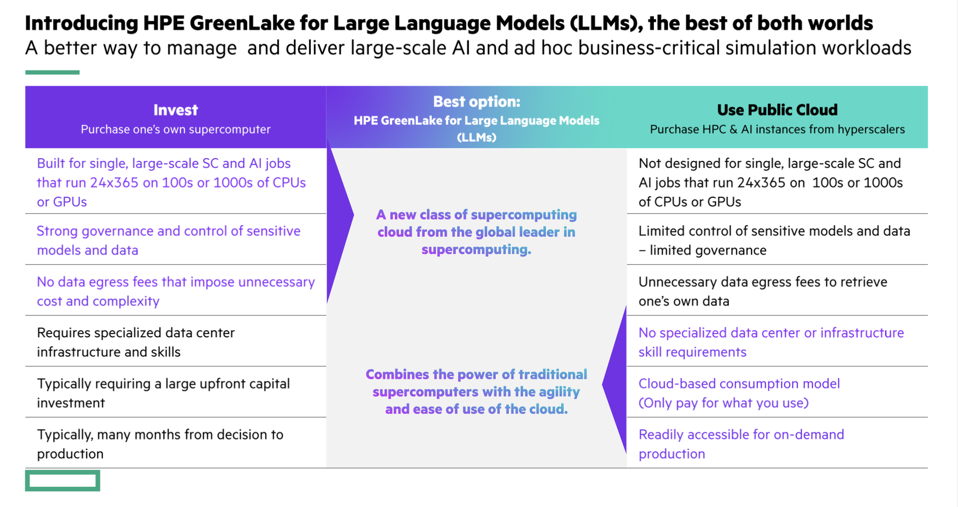

The challenge for IT organizations embracing LLMs is two-fold: the IT infrastructure required to support LLM training is complex and expensive, and existing LLM models are trained on a broad corpus of data that can make it challenging to customize the technology for a particular application.

Solving the second problem, customizing the data set, often leads to the challenges of the first, building and managing a complex AI infrastructure. These challenges must be addressed because for an LLM to be fully relevant to an organization, it must be trained against organization-specific data.

A growing number of cloud services offer ChatGPT-as-a-Service, such as Microsoft’s Azure OpenAI Service and even ChatGPT’s originator, OpenAI. Plenty of cloud providers are also willing to rent out the infrastructure required to train and operate a large language model; just pick your favorite CSP. An enterprise-class consumption-based offering for training and operating LLMs is missing from the mix. That is, until last week, when HPE introduced its new HPE GreenLake for Large Language Models.

HPE GreenLake for Large Language Models

HPE GreenLake for LLMs gives users direct access to a pre-configured LLM stack running on a multi-tenant Cray XD supercomputer. This allows enterprises to train, tune, and deploy large-scale AI privately without worrying about the underlying platform. The infrastructure also scales up to thousands of CPUs and GPUs, matching the customers’ needs. It’s almost supercomputer-as-a-service.

The Aleph Alpha Luminous Model

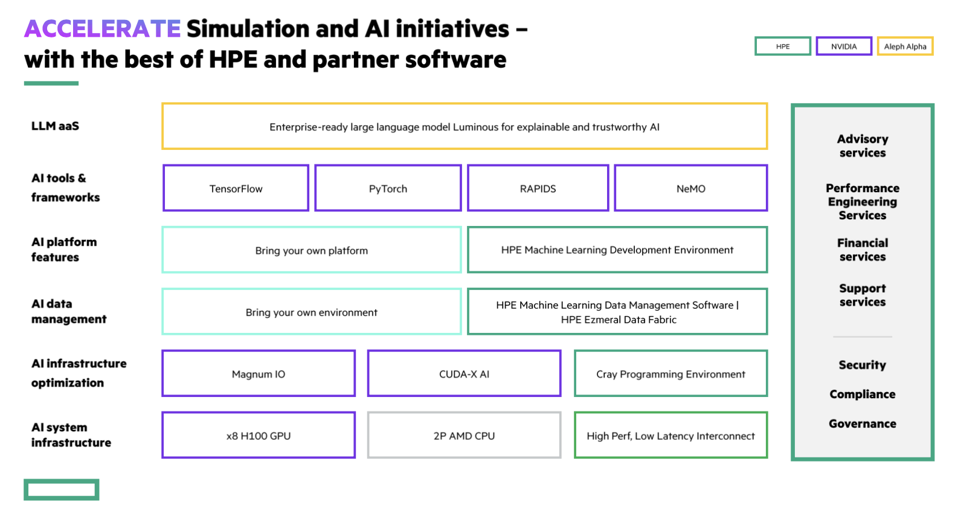

HPE worked with Aleph Alpha, a German-based AI company, to provide users with a pre-trained LLM. Aleph Alpha’s Luminous model allows organizations to leverage their data by training and fine-tuning a customized model. This gives the LLM the benefit of the enterprise’s data. The Luminous model is offered in multiple languages, including English, French, German, Italian, and Spanish.

Based on a Cray XD Supercomputer

For as long as most IT practitioners can remember, the highest-performing computers in the world came from Cray, which became a part of HPE in 2019. The latest generation Cray XD-series supercomputers provide not just an unprecedented amount of raw compute capability, it’s designed for scalability. The Cray XD I/O subsystem ensures that data flows between nodes, and storage devices, at a rate that will keep the GPUs doing LLM training from stalling. It’s almost impossible to deliver this level of performance in a traditional enterprise data center.

HPE GreenLake for LLMs is based on an unspecified Cray XD model powered by a pair of the latest generation AMD EPYC processors. The solution also includes eight NVIDIA H100 Tensor Core GPUs and uses Cray’s high-performance, low-latency interconnect between nodes.

The NVIDIA H100 is the best accelerator available today for LLM training. In a batch of MLPerf benchmarks released yesterday, the NVIDIA H100 set per-accelerator performance record for the MLPerf v3.0 ChatGPT benchmark. You don’t have to know what the underlying hardware is when buying LLM as a service, but it’s nice to know that HPE GreenLake is building its service on industry-best technology.

Fully Tuned AI Software Stack

The software story for LLM is no less complex than the hardware, requiring multiple layers of special-purpose frameworks, tools, and libraries to be expertly configured. It’s a lot to take on. Fortunately, HPE does the heavy lifting for you. HPE GreenLake for LLM includes the current most popular AI tools and frameworks along with the necessary NVIDIA software stack, all tied together with HPE’s own HPE Machine Learning Development Environment, Machine Learning Data Management Software, and HPE Ezmeral Data Fabric.

Analysis

HPE’s story about GreenLake is that it offers both traditional private and hybrid-cloud capabilities, supplemented with what HPE terms “Flex Solutions” that target horizontal and vertical workloads. The new GreenLake for LLMs expands that into target AI solutions.

The company promises its GreenLake for LLMs won’t be its last workload-as-a-service play, teasing upcoming AI-as-a-Service offerings such as climate modeling, drug discovery, financial services, and whatever else its customer base might ask for. This expands HPE GreenLake from its infrastructure-focused beginnings into a range of services that begin to look very cloud-like.

I like where HPE is taking GreenLake. The company’s strategy is sound, and the need for the solution is real. Leveraging its Cray assets for demanding AI workloads is simply smart. HPE is, however, forcing us to think about the company in a different light. Is it a traditional infrastructure company that has a consumption-based offering? Or is HPE a new kind of cloud provider? The lines are rapidly blurring.

No other technology company is offering anything comparable to what HPE is delivering with its expanded GreenLake solutions. HPE is providing the flexible consumption-based infrastructure model promised by the cloud, delivered on-prem or in a co-lo. If you have AI or HPC workloads requiring an infrastructure more complex than you want to buy and maintain, then HPE will offer it as a GreenLake AI-as-a-Service. The new GreenLake for LLMs offering is just the first. I’m excited to see what’s next.