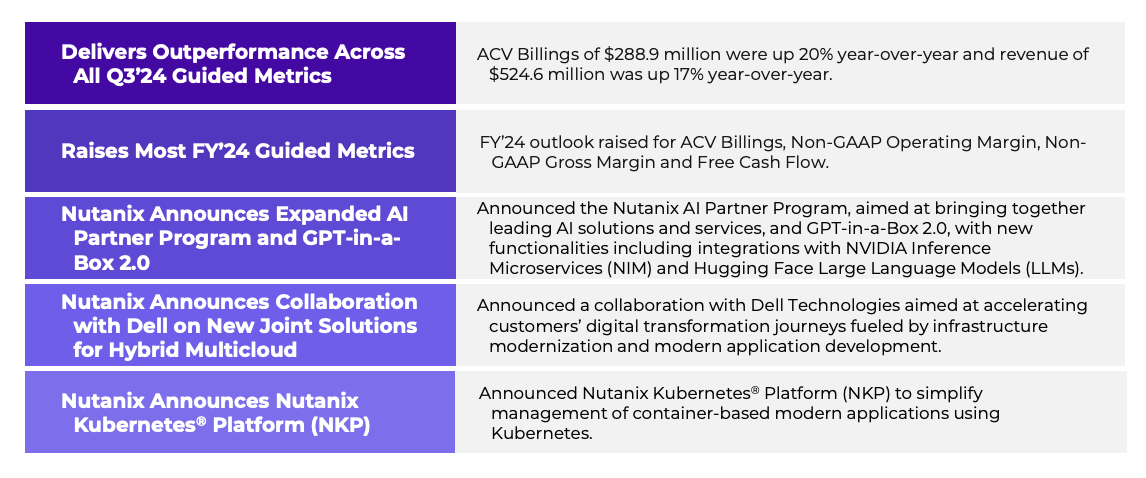

Earlier this month, Nutanix announced its new GPT-in-a-Box™ solution, which promises to simplify the implementation of Generative Pre-trained Transformer (GPT) capabilities. Nutanix’s solution aims to help organizations harness the power of AI while maintaining robust control over their data and applications.

New: Nutanix GPT-in-a-Box

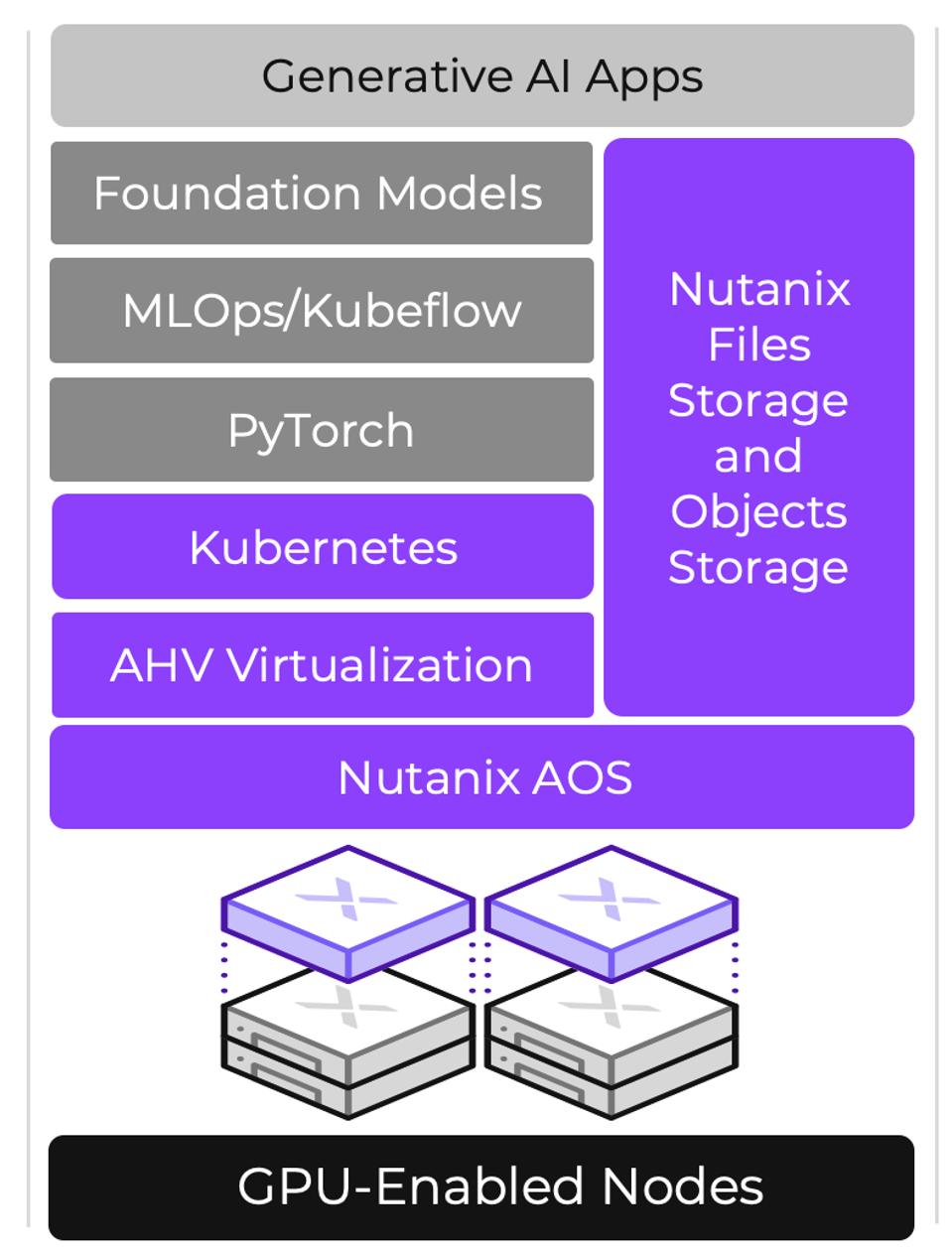

Nutanix’s new GPT-in-a-Box brings together the elements necessary to deploy a large language model (LLM) into production. GPT-in-a-Box includes:

- Nutanix Cloud Platform Infrastructure on GPU-enabled server nodes.

- Nutanix Files and Object storage for running and tuning LLM models.

- An open-source software stack for deploying and running generative AI workloads.

- Support for a curated set of LLMs, including Llam2, Falcon, and MPT.

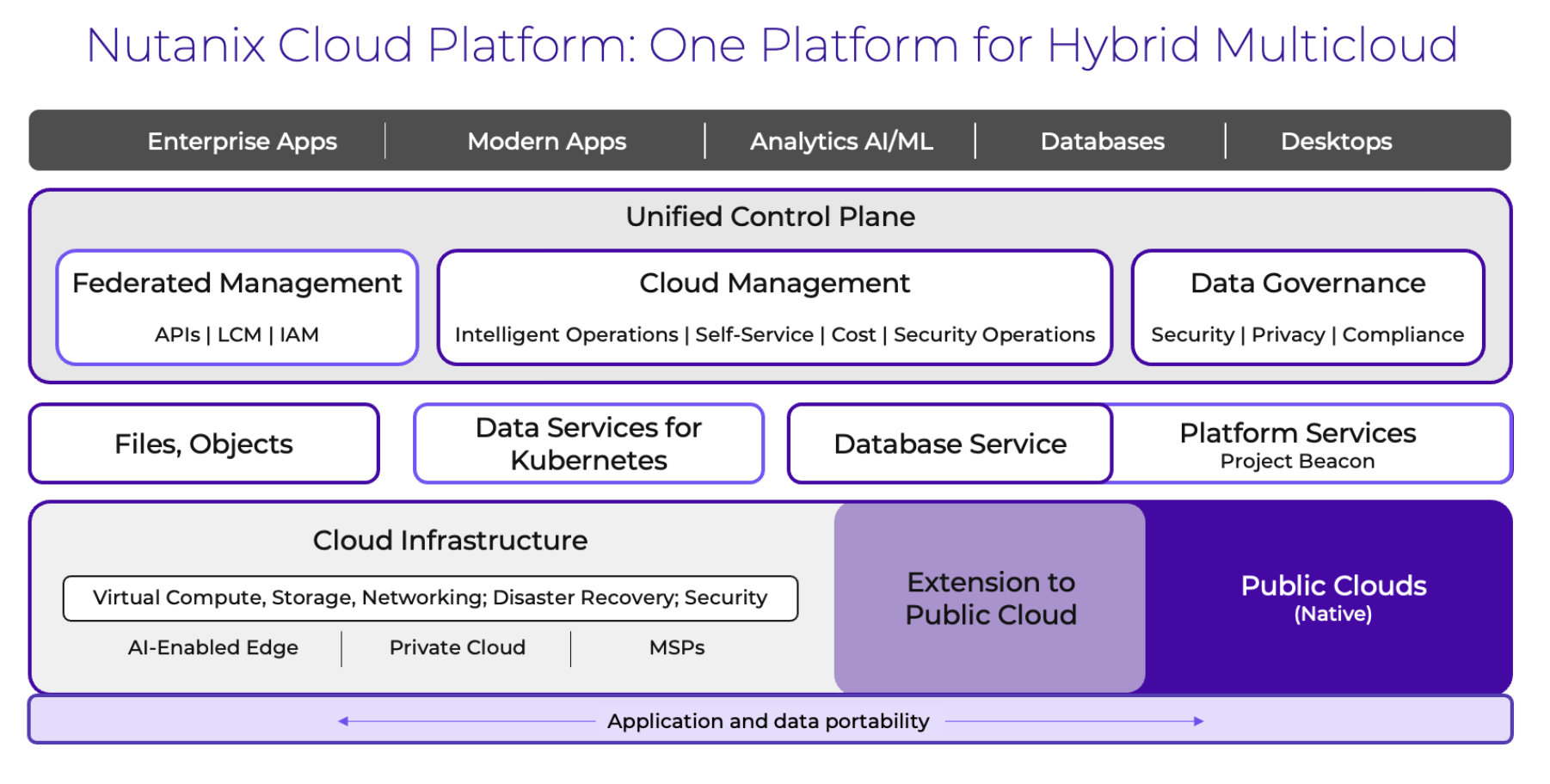

The new solution is based on Nutanix’s software-defined Nutanix Cloud Platform infrastructure, which is adept at accommodating GPU-enabled server nodes to facilitate seamless scalability of virtualized compute, storage, and networking. Notably, Nutanix Cloud Platform accommodates both conventional virtual machines and Kubernetes-managed containers.

With its support for file and object storage, GPT-in-a-Box offers the means to fine-tune and execute a variety of GPT models. Moreover, incorporating open-source software, including the PyTorch framework and KubeFlow MLOps platform, empowers the deployment and management of AI workloads. The management interface, equipped with an enhanced terminal UI or standard CLI, further enhances the user experience. At the same time, compatibility with a curated selection of LLMs ensures a versatile and comprehensive AI solution.

Nutanix GPT-in-a-Box offers a multifaceted approach to address these challenges, presenting an array of compelling features for organizations. One of its key strengths is in allowing enterprises to retain exclusive ownership of AI content, aligning with stringent security, privacy, and compliance mandates.

The solution’s focus on simplification is evident in its ability to facilitate the building, fine-tuning, and execution of models, including GPTs and LLMs, without necessitating extensive retraining of existing teams or the acquisition of entirely new skill sets. This accelerates the AI integration process and optimizes IT expenditures through its cloud operating model, fostering cost-effective scalability.

Analysis

Nutanix’s GPT-in-a-Box promises to revolutionize the implementation of Generative Pre-trained Transformer (GPT) capabilities for organizations seeking to harness the power of AI while maintaining robust control over their data and applications. In a landscape where AI adoption is accelerating at an unprecedented pace, addressing the challenges of ensuring data privacy, sovereignty, and governance become paramount.

The biggest competitors to Nutanix’s new offering are the providers of AI-as-a-Service, such as HPE’s recently announced LLM-as-a-Service, Azure’s ChatGPT-as-a-Service, and related offerings. VMware announced its AI Foundation with NVIDIA this past week, which aims to solve similar issues as Nutanix’s.

Nutanix GPT-in-a-Box comes as a response to the mounting complexity, scalability, and security concerns that often accompany the integration of generative AI and AI/ML applications. By introducing Nutanix GPT-in-a-Box, the company aims to provide a comprehensive, software-defined AI-ready platform with end-to-end services, streamlining and expediting AI initiatives from edge to core.

By extending its enterprise-class data services to AI/ML applications, Nutanix empowers data scientists and IT practitioners with a cloud-based operating model delivered on-prem, eliminating operational complexities. Additionally, the solution enhances cost efficiency for business stakeholders and streamlines the deployment of resilient infrastructure. Nutanix delivers a compelling solution with its GPT-in-a-Box, which should help simplify generative AI’s rollout.