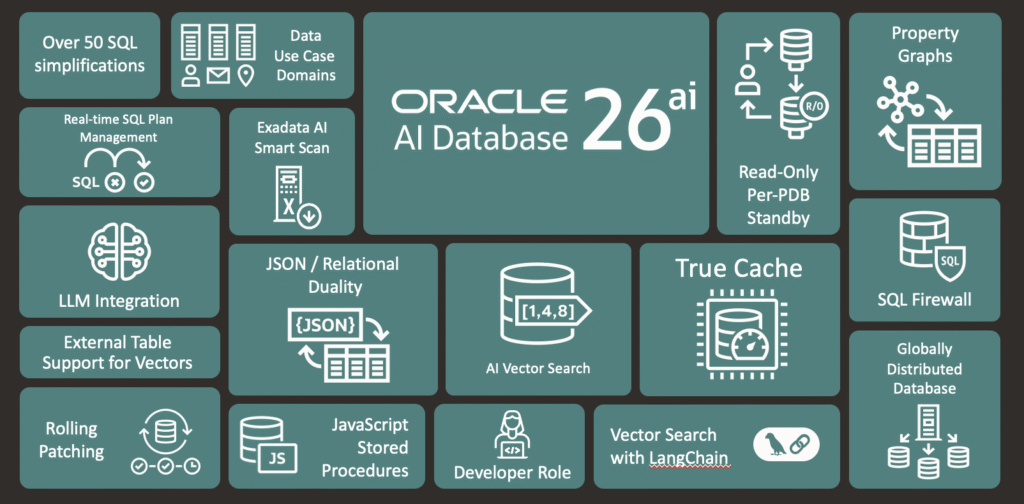

Oracle released its Oracle Database 26ai at its recent AI World event in Las Vegas. The update makes the database an integrated platform that embeds artificial intelligence capabilities across data management, development, and analytics operations.

The platform integrates vector search capabilities with traditional database functions, supports agentic AI workflows through in-database tools and MCP servers, and extends analytics capabilities through Iceberg table format support. Oracle also bundles in advanced AI features, including AI Vector Search, at no additional cost.

The release targets organizations seeking to implement AI capabilities while maintaining data within existing database infrastructure rather than moving data to specialized AI platforms.

Technical Details

Oracle AI Database 26ai consolidates multiple data processing paradigms within a single database engine, reducing the architectural fragmentation that typically characterizes enterprise AI implementations.

The platform unifies relational, document, graph, and vector data models, allowing applications to query identical data through SQL, JSON document APIs, or graph traversals without requiring extract-transform-load operations between systems.

Vector Search and Multimodal Retrieval

The updated platform implements Unified Hybrid Vector Search, which combines vector similarity operations with traditional database predicates in single query statements.

Users can construct queries that blend vector embeddings with relational filters, full-text search conditions, JSON path expressions, graph pattern matching, and spatial predicates. This allows retrieval operations that return diverse content types within unified result sets.

Oracle claims vector search operations benefit from Exadata intelligent storage offload, which pushes computation to storage servers rather than requiring data movement to database compute nodes.

The Exadata Exascale software architecture extends these capabilities to smaller-scale deployments.

The platform supports ONNX embedding model formats, enabling organizations to select from multiple model providers without vendor lock-in. Integration pathways include connections to external LLM providers, NVIDIA NIM containers for embeddings and RAG pipelines, and the Private AI Services Container for on-premises model execution.

Organizations concerned about data exfiltration can deploy embedding and inference operations within their own infrastructure boundaries.

Agentic AI Framework

AI Database 26ai introduces Select AI Agent, an in-database framework for defining, executing, and governing AI agents. The system supports three tool integration patterns: native database functions, external REST APIs, and MCP servers.

Agents operate within database transaction boundaries and security contexts, applying existing row-level security policies, column masking rules, and privilege constraints to AI-initiated operations.

The Private Agent Factory provides a no-code visual builder for agent construction and deployment. This component runs as a containerized application within customer-controlled environments, whether public cloud tenancies, private clouds, or on-premises infrastructure.

This capability addresses data residency and privacy requirements by keeping agent logic and data access within customer perimeters.

The MCP Server integration allows agents to employ iterative reasoning patterns, where the language model can request additional context during analysis rather than operating on a single static prompt.

Oracle says this approach produces more accurate results for complex queries, though comparative accuracy metrics have not been published.

Data Lakehouse Integration

The Autonomous AI Lakehouse component adds Apache Iceberg table format support, enabling Oracle databases to read and write open lakehouse tables stored in object storage across OCI, AWS S3, Azure Blob Storage, and Google Cloud Storage.

Oracle tells us the implementation maintains Exadata performance characteristics when querying Iceberg tables and provides serverless scaling for variable workloads.

There is interoperability with Databricks and Snowflake on shared cloud object storage, allowing multiple systems to access identical Iceberg tables without data replication. This reduces data movement costs and eliminates synchronization delays in multi-platform environments.

However, organizations must manage metadata coordination between systems, handle potential schema evolution conflicts, and address concurrent modification scenarios that Iceberg’s optimistic concurrency model may not fully resolve in high-velocity update scenarios.

Developer Capabilities

The new Data Annotations feature provides metadata tags that describe data purpose, semantics, and constraints at the schema level. These annotations improve AI-generated code quality and response accuracy by providing contextual information that language models can incorporate during code generation or natural language query processing.

The unified data model eliminates structural barriers between relational, document, and graph representations of identical data. Developers can store JSON documents that the platform automatically indexes for relational queries, or query relational tables through graph traversal patterns without duplicating data into specialized graph stores.

This consolidation reduces the ETL complexity common in polyglot persistence architectures.

Oracle plans to deliver an APEX AI Application Generator that translates natural language descriptions into application components.

While such code generation tools can accelerate development for standard CRUD applications, they typically require substantial refinement for complex business logic, security requirements, and performance optimization.

Adopters of the technology should treat AI-generated code as accelerating initial scaffolding rather than producing production-ready applications.

Security and Governance

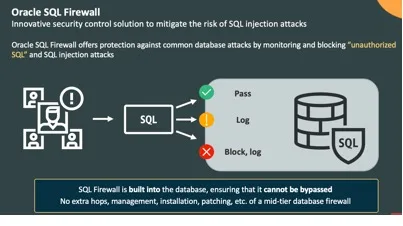

Built-in Data Privacy Protection implements row, column, and cell-level access controls with dynamic data masking, applying these policies uniformly to human users and AI agents. This approach enforces security at the data layer rather than relying on application-level controls that AI agents might bypass.

SQL Firewall provides in-database protection against SQL injection and unauthorized query patterns without requiring intermediate proxy servers. The firewall examines query structures before execution, blocking statements that deviate from approved patterns.

Oracle has also implemented NIST-approved quantum-resistant algorithms (ML-KEM) for data-in-flight encryption, complementing existing quantum-resistant encryption for data-at-rest.

This makes Oracle the only vendor implementing quantum-resistant protection across both database and infrastructure layers.

Mission-Critical Operations

Oracle Database Zero Data Loss Cloud Protect continuously replicates on-premises database changes to OCI’s Zero Data Loss Recovery Service, providing protection against data loss and ransomware.

The service enables point-in-time recovery for on-premises databases using cloud-stored change streams. Organizations gain off-site protection without maintaining duplicate on-premises infrastructure.

Globally Distributed Database implements RAFT-based replication for multi-master, active-active configurations with sub-three-second failover and zero data loss claims. This architecture supports both ultra-scale deployments requiring high transaction throughput and data sovereignty scenarios where data residency requirements mandate geographic partitioning.

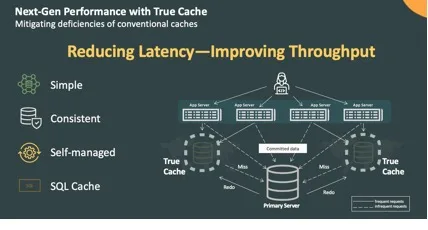

True Cache delivers application-transparent mid-tier caching with transactional consistency guarantees. Unlike external caching layers that require application code to manage cache population and invalidation, True Cache automatically maintains consistency with the database.

The cache supports the full Oracle SQL feature set, including vector, JSON, spatial, and graph queries. This capability can reduce query latency for read-heavy workloads without application.

Analysis

Oracle AI Database 26ai sees the company executing a pragmatic convergence strategy rather than revolutionary innovation, bringing established AI patterns into a production-grade database platform with emphasis on governance, security, and operational consolidation.

The release’s significance lies in its ability to reduce the architectural fragmentation that characterizes many enterprise AI implementations, where data constantly moves between operational databases, specialized AI stores, and analytics platforms.

Organizations evaluating 26ai should assess it against their specific constraints: data governance requirements that favor in-database AI operations, existing Oracle Database deployments where incremental capability addition carries lower risk than platform introduction, and operational teams stretched by managing multiple specialized systems.

The platform will not match specialized alternatives in every performance dimension, but it delivers production-grade operations, security depth, and integration maturity that nascent AI platforms cannot yet provide.

The competitive situation will evolve rapidly as both specialized AI platforms mature their enterprise capabilities and established database vendors continue enhancing AI features.

For Oracle’s substantial installed base, AI Database 26ai offers a low-risk entry point for AI capabilities without abandoning existing database investments. This should drive adoption among conservative enterprises preferring incremental evolution over architectural transformation.

The broader database market’s reception will depend on whether Oracle’s integration depth proves more valuable than specialized platforms’ focused capabilities. This is a question that different organizations will answer differently based on their unique requirements, constraints, and risk tolerances.

Ultimately, Oracle AI Database 26ai delivers meaningful value by enabling organizations to implement AI capabilities where their data already resides, governed by existing security frameworks, and managed by database teams with established operational expertise.

It does this while eliminating the architectural complexity, data movement overhead, and governance fragmentation that hinder AI adoption in regulated, security-conscious enterprises operating mission-critical Oracle Database environments.

It’s a strong release for the current environment.

Competitive Outlook & Advice to IT Buyers

Oracle differentiates AI Database 26ai through integration depth rather than introducing novel AI capabilities. Vector search, embedding model support, and RAG patterns exist across numerous platforms.

Oracle’s distinctive approach lies in implementing these capabilities within a production-grade database that organizations already use for transactional systems, eliminating the architectural pattern where operational data resides in traditional databases while AI workloads operate in specialized vector stores or cloud AI services.

This places Oracle broadens Oracle’s competitive landscape dramaticallhy. Oracle now faces competition from multiple directions in the AI data platform space: specialized vector databases (Pinecone, Weaviate, Milvus), cloud data warehouses with vector capabilities (Snowflake, Databricks), hyperscaler database services (AWS Aurora/RDS with pgvector, Azure Cosmos DB), and established database vendors adding AI features (PostgreSQL with pgvector extensions, MongoDB with vector search).

Let’s look at how the new database stacks up…

These sections are only available to NAND Research clients and IT Advisory Members. Please reach out to [email protected] to learn more.