AWS recently announced the open-source release of Spark History Server MCP, a specialized implementation of the Model Context Protocol (MCP). This server enables AI agents to directly access and analyze historical execution data from Apache Spark workloads through a standardized, structured interface.

The project was developed collaboratively by AWS Open Source and Amazon SageMaker Data Processing teams and is now available under the Apache 2.0 license.

The release targets a persistent pain point for Spark operators and developers: performance tuning and root-cause analysis in distributed data processing environments. By transforming the traditional manual debugging process into a machine-interpretable format accessible via natural language interfaces, the Spark History Server MCP bridges the gap between Spark internals and AI-powered developer tooling.

Spark History Server MCP Capabilities

The new AWS MCP server acts as a middleware layer between AI agents and one or more Spark History Server instances. It does not store or alter the underlying Spark data but exposes it through a structured interface compliant with MCP, which standardizes how AI agents access operational data sources.

The MCP implementation supports two communication protocols:

- Streamable HTTP: Enables full-function integrations with frameworks like LlamaIndex and LangGraph.

- STDIO: Supports command-line interfaces such as Amazon Q CLI and desktop tools like Claude.

The MCP server retrieves and structures Spark telemetry across multiple abstraction levels:

- Application-level: Access to execution summaries, resource utilization patterns, and job success/failure statistics.

- Job and stage-level: Analysis of critical path bottlenecks via timeline visualizations, stage dependencies, and skew detection.

- Task-level: Metrics on executor-specific memory usage, garbage collection, shuffle writes, and task retries.

- SQL-level: Insights into query execution plans, join strategies, and shuffle operations for optimizing analytical workloads.

These capabilities allow AI agents to respond to natural-language prompts such as “Why did job spark-123 fail?” with structured, evidence-based diagnostics and optimization guidance.

Deployment and Integration

The Spark History Server MCP supports both local and cloud deployments:

- Local deployment: Runs on EC2 or EKS, connecting to Spark History Server instances via direct HTTP interfaces.

- Amazon EMR: Uses the Persistent UI feature to retrieve job telemetry. The server can auto-discover the UI via cluster ARN and configure token-based authentication.

- AWS Glue: Integrates with self-managed or container-based Spark History Servers. Developers can run the MCP server locally or on EC2 to access Glue job telemetry.

Installation includes local testing tools and sample Spark History data for rapid evaluation.

Analysis

The Spark History Server MCP fills a longstanding observability gap in the Spark ecosystem by operationalizing Spark telemetry for consumption by AI agents. This enables a shift from reactive, expert-driven debugging to proactive, AI-assisted observability workflows.

From a strategic perspective, this release supports AWS’s broader push to infuse generative AI across developer workflows, particularly via tools like Amazon Q, SageMaker, and Bedrock. It enhances AWS Glue and Amazon EMR by reducing time-to-resolution for Spark performance issues and lowering the skill barrier for Spark operators.

By abstracting Spark History Server telemetry into a machine-consumable protocol, AWS enables AI agents to perform real-time, context-aware performance analysis and diagnostics for Spark workloads across managed and self-managed deployments.

This approach reduces reliance on expert debugging, accelerates root-cause identification, and lowers the barrier for operational excellence in Spark environments. It also reinforces AWS’s positioning at the intersection of open-source data infrastructure and AI-native developer tools.

Going forward, adoption will depend on ecosystem integration, ease of deployment, and compatibility with existing Spark telemetry workflows. However, AWS has laid the groundwork for a new model of AI-augmented data platform operations that other cloud providers may need to emulate.

Competitive Impact & Advice to IT Buyers

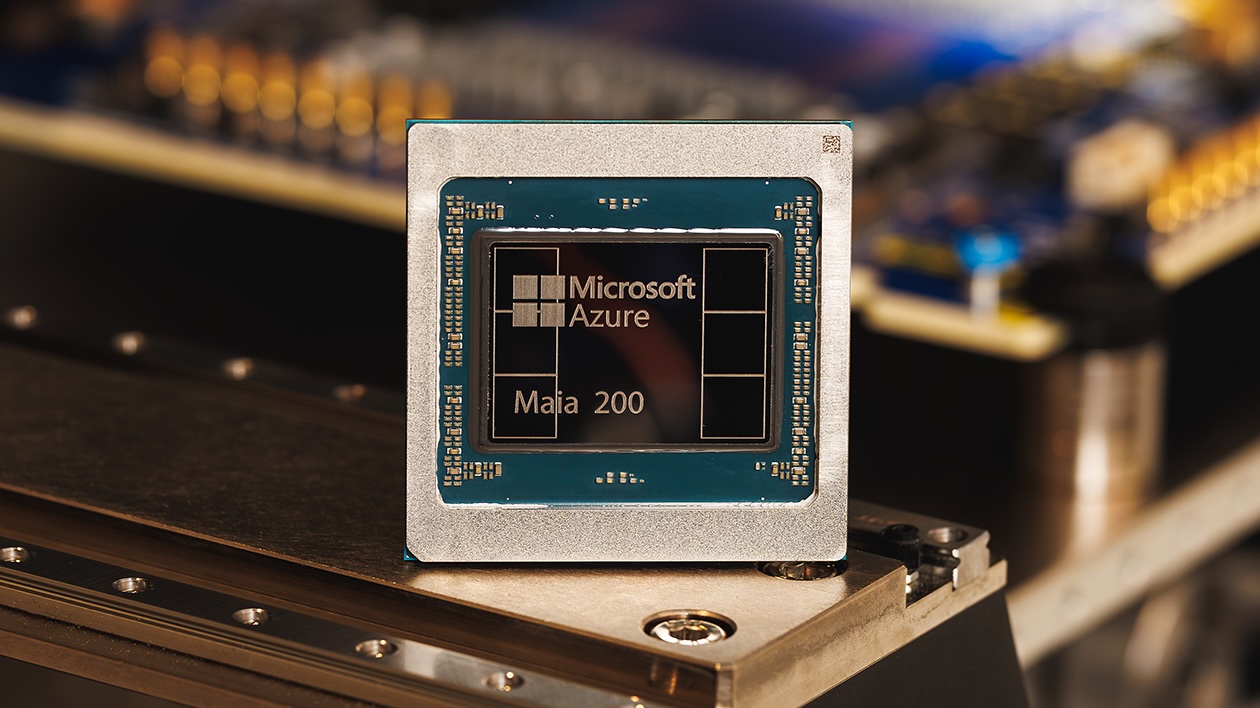

The new solution sees AWS competing against Databricks, Google Cloud’s Dataproc, Microsoft Azure HDINsight, and multiple observability solutions…

These sections are only available to NAND Research clients. Please reach out to [email protected] to learn more.