Chinese AI startup DeepSeek recently introduced an AI model, DeepSeek-R1l that the company claims that it matches or surpasses models from industry leaders like OpenAI, Meta, and Anthropic in various tasks. The company claims that it developed the model with a fraction of the infrastructure that competitors require.

The company has made DeepSeek-R1 freely available for developers to download or access via a cloud-based API, promoting broader adoption and collaboration.

The move created significant buzz in the AI industry. Though the claims remain unverified, the potential to democratize AI training and fundamentally alter industry dynamics is clear.

What DeepSeek Announced

DeepSeek announced a significant breakthrough in AI training methodology, claiming it can achieve performance comparable to leading models like OpenAI’s GPT-4, Meta’s Llama, and Anthropic’s Claude, but with dramatically reduced infrastructure requirements and costs. The key elements of the announcement include:

- Efficient AI Training:

- DeepSeek claims its V3 model was trained using just 2,048 Nvidia H800 GPUs at a total cost of approximately $5.58 million, a fraction of the costs incurred by competitors.

- The approach relies on advanced techniques, such as FP8 precision for reduced memory usage, DualPipe communication optimization to reduce latency, and modular architecture, which activates only relevant parts of the model for specific tasks.

- DeepSeek-R1:

- Building on the V3 model, the company introduced DeepSeek-R1, incorporating additional reinforcement learning and supervised fine-tuning stages, enhancing reasoning capabilities and model efficiency.

- The R1 model reportedly matches or exceeds the performance of leading AI models while maintaining cost efficiency.

- Open Access:

- DeepSeek made the source code for its models publicly available, inviting widespread adoption and collaboration.

- Developers can download the models for free or access them through a cloud-based API. This democratizes AI training, making it accessible to organizations beyond hyperscalers.

- Scalable Approach:

- DeepSeek’s modular design enables efficient training by activating only parts of the model for specific queries, significantly reducing hardware requirements without sacrificing performance.

How Valid are DeepSeek’s Claims?

DeepSeek’s announcement is promising, but many critical questions remain unanswered. Verification through independent replication, clarity on training methods and costs, and evaluations of robustness and generalization will be crucial in determining whether DeepSeek’s claims hold up and how disruptive its innovations will ultimately be.

Here’s what we’re watching:

Verifiability and Reproducibility

- Can the results be independently replicated?

- While DeepSeek has published papers and released its source code, it is unclear if other researchers or organizations can achieve similar results using the same hardware and techniques.

- Replication by major AI labs or independent entities will be key to verifying the validity of their methods.

Infrastructure and Cost Claims

- How accurate are the cost estimates?

- DeepSeek claims to have trained its V3 model for $5.58 million on 2,048 Nvidia H800 GPUs, but the assumptions behind this cost calculation, such as GPU pricing, energy consumption, and other operational costs, remain unclear.

- Was the infrastructure as limited as claimed?

- Skeptics question whether additional unseen infrastructure or pre-trained components were used, which could inflate the actual resource requirements.

Training Techniques

- Did DeepSeek use distillation or shortcuts?

- DeepSeek may have used distillation (training its models on outputs of other AI systems) or fine-tuned on pre-trained models. If so, this could reduce costs but raise questions about originality and scalability.

- What role did proprietary optimizations play?

- Techniques like DualPipe communication and FP8 precision are mentioned as core innovations. Understanding their impact and generalizability to other environments is critical.

Model Generalization

- How robust and generalizable is the model?

- While DeepSeek claims its models match or exceed OpenAI’s GPT-4 or Anthropic’s Claude, broader evaluation across diverse tasks and real-world applications is required to confirm this.

- Are there trade-offs in accuracy or performance?

- Optimizing for cost and efficiency could compromise fine-grained performance, particularly on complex tasks or reasoning capabilities.

Practical Implementation

- How does the model perform at scale?

- While training efficiency is impressive, questions remain about inference efficiency, latency, and operational scalability in real-world applications.

- Can it truly compete with hyperscalers?

- Hyperscalers like OpenAI and Google provide end-to-end ecosystems for AI development. DeepSeek’s ability to offer a comparable ecosystem is uncertain.

Long-Term Sustainability

- Can DeepSeek’s approach scale with growing model sizes?

- As AI models grow in size and complexity, it’s unclear if DeepSeek’s cost and efficiency advantages can hold for larger-scale systems.

- What is the business model?

- Offering free downloads and open-source models raises questions about how DeepSeek plans to sustain its operations and monetize its technology over the long term.

Impact on the AI Infrastructure Ecosystem

DeepSeek’s approach could profoundly impact AI infrastructure by enabling smaller players to participate in AI training without relying on hyperscalers.

This democratization mirrors historical shifts in computing, such as the move from mainframes to mini-computers, signaling a new era of decentralized AI development:

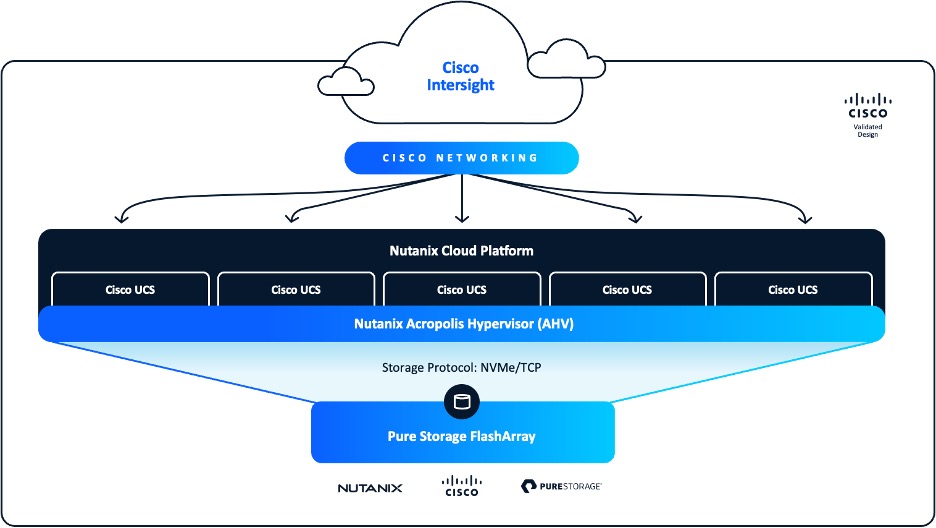

- Core Infrastructure Requirements: While DeepSeek reduces GPU reliance, high-performance storage, low-latency networking, and robust data management remain critical. Issues like governance, compliance, and checkpointing still present challenges, ensuring sustained demand for supporting technologies.

- Implications for Infrastructure Providers: Companies like Pure Storage, server OEMs, and networking firms stand to benefit as the customer base for AI training expands beyond hyperscalers. Enterprises can now train tailored models on-premises, reducing dependence on cloud-first solutions.

- Shifting Paradigms: DeepSeek could redefine the AI server market by enabling enterprises to train models more efficiently. Integrated accelerators and cost-effective architectures will likely gain traction as businesses adopt these new training methods.

Impact on NVIDIA

Nvidia’s stock price sharply dropped following DeepSeek’s announcement, reflecting market fears about potential disruptions to its dominance in the AI GPU market. While DeepSeek’s use of mid-tier GPUs like the H800 highlights an alternative path, Nvidia remains well-positioned due to its entrenched ecosystem, which includes its CUDA platform and investments in AI systems like DGX and Mellanox networking solutions.

Nvidia built a hedge against its GPU dominance with its robust data center business that extends beyond its GPU offerings. While it doesn’t break out specific revenue numbers for networking, software, and services, it doesn’t offer hints.

In its most recent earnings, the company reported that networking revenue increased 20% year over year, with significant growth in platforms like Spectrum-X, up 3x year over year.

Software revenue is annualizing at $1.5 billion, about 4% of its total revenue, and Nvidia expects that to exceed $2 billion by year-end, driven by offerings like NVIDIA AI Enterprise, Omniverse, and AI microservices. Software and services contribute higher-margin revenues, enhancing overall profitability, and shouldn’t be impacted by DeepSeek’s announcement.

On the GPU front, DeepSeek’s democratization of AI training could shift the Nvidia’s approach to the market in significant ways:

- Broader Market Adoption: The democratization of training may expand the overall GPU market, even if individual customers purchase fewer high-end units. Increased demand for mid-tier GPUs like the H800 could offset reduced reliance on flagship models.

- Resilience Through Ecosystem Control: Nvidia’s software lock-in, including CUDA, and its investments in system-level solutions like DGX and Mellanox position the company to adapt to changing market dynamics.

- Strategic Adjustments: Nvidia’s ability to manage product flow and pricing and its control over the GPU supply chain ensures its continued relevance despite emerging competition.

Nvidia’s adaptability will be key. Its ability to manage product flow, adjust pricing, and maintain leadership in software tools ensures it will remain a significant player in the evolving market.

Political Fall-Out

DeepSeek’s emergence raises several geopolitical concerns, particularly given its Chinese origin. These concerns center around national security, market dynamics, and broader implications for global AI leadership:

1. National Security Risks

- Data Privacy and Surveillance:

- Chinese technology firms, including AI developers, often face scrutiny over potential ties to the Chinese government. There is concern that data processed by DeepSeek models could be accessed or monitored by Chinese authorities under national security laws.

- Similar to the bans and restrictions on TikTok, there is fear that AI tools like DeepSeek could be used as vectors for espionage or influence.

2. Technological Competition

- Threat to U.S. AI Dominance:

- DeepSeek’s ability to rival leading U.S. AI models (e.g., OpenAI’s GPT-4, Meta’s Llama) signals a significant leap in China’s AI capabilities. This could challenge the U.S.’s position as the leader in advanced AI development.

- Policymakers in the U.S. may view this as a wake-up call to invest more heavily in domestic AI innovation and infrastructure.

- Export Controls and Trade Restrictions:

- The U.S. has imposed restrictions on the export of advanced semiconductor technologies such as Nvidia’s A100 and H100 GPUs to China. DeepSeek’s success with limited hardware like the H800 could undermine these efforts, showcasing that Chinese firms can innovate around such constraints.

3. Market Access and Restrictions

- Potential for Regulatory Bans:

- As DeepSeek gains attention, Western governments may consider banning or limiting its use due to national security concerns, similar to actions taken against Huawei and TikTok.

- Restrictions on deploying DeepSeek models in sensitive industries (e.g., defense, finance) could further exacerbate global tech decoupling.

- Uneven Regulatory Environment:

- Unlike in the U.S., where AI tools face scrutiny over ethical guidelines and compliance, DeepSeek may operate with fewer regulatory restrictions in China, allowing it to innovate more aggressively. This disparity raises concerns about fairness in global AI competition.

4. Ethical and Cultural Differences

- Censorship and Model Behavior:

- DeepSeek’s models are reportedly censored to align with Chinese political guidelines. For example, they avoid responding to politically sensitive topics like Tiananmen Square or criticism of Chinese leadership.

- Such limitations could hinder global adoption in regions that prioritize free expression and transparency.

Analysis

It’s important to distinguish between DeepSeek’s DeepSeek-R1 LLM and the methods by which that model was trained – the disruptive element is the training technique, which changes how we think about AI infrastructure

If substantiated, DeepSeek’s claims will drive a shift in the AI training landscape by lowering costs and democratizing access to advanced model training. While this disrupts traditional reliance on hyperscalers, it introduces opportunities for infrastructure providers and enterprises to innovate.

While DeepSeek’s methods may directly threaten companies like OpenAI and force companies like Nvidia to evolve, the overall net impact on the IT industry is positive:

- Storage, Networking, and Server Providers: Storage providers like Pure Storage and NetApp, along with server OEMs like Dell Technologies, HPE, and Lenovo, will all benefit from expanded adoption of AI training across enterprises—AI training is no longer a “cloud first” undertaking.

- Cloud Service Providers: Broader accessibility to AI training drives increased usage of public cloud platforms, benefiting CSPs and their partners.

- OpenAI and SaaS Providers: Reduced training costs allow firms to shift focus to value-added integration services and model safety.

- GPUs, Nvidia’s margins on flagship hardware could face pressure.

For Nvidia, the news reinforces the need to adapt to evolving market dynamics by leveraging its ecosystem and diversifying its product offerings. Though margins on flagship GPUs may be pressured, the overall expansion of the AI market could sustain long-term growth.

Ultimately, the broader implications of DeepSeek’s announcement highlight the ongoing evolution of AI infrastructure, with an emphasis on efficiency, accessibility, and decentralization. Stakeholders across the ecosystem should prepare for an increasingly dynamic and competitive market.