At the recent GTC 2025 event, NetApp announced, in collaboration with NVIDIA, a comprehensive set of product validations, certifications, and architectural enhancements to its intelligent data products.

The announcements include NetApp’s integration with the NVIDIA AI Data Platform, support for NVIDIA’s latest accelerated computing systems, and expanded availability of enterprise-grade AI infrastructure offerings, including NetApp AFF A90 and NetApp AIPod.

NVIDIA DGX SuperPOD AFF A90 Certification

NetApp’s AFF A90 storage systems now support validated deployments with NVIDIA DGX SuperPOD. This combination provides a converged infrastructure for large-scale training and inferencing, enabling:

- Integrated data management via NetApp ONTAP.

- Support for secure, scalable, multi-tenant AI environments.

- Enterprise-grade resilience, data protection, and anti-ransomware capabilities.

- Compatibility with NVIDIA DGX B200, delivering what NetApp claims is 3× training performance and 15× inference performance relative to prior generations.

Certification for NVIDIA Cloud Partner Reference Architectures

NetApp now has certification for NVIDIA Cloud Partners operating HGX H200 and B200-based platforms. These environments deliver infrastructure for AI-as-a-service providers offering managed AI and private cloud workloads. This includes:

- High-performance block and file storage from NetApp AFF A90 systems.

- Scalable throughput across secure multi-tenant environments.

- Full ONTAP feature set including SnapMirror® replication, storage efficiency, and automation tools.

AIPod Integration with NVIDIA-Certified Systems

NetApp AIPod now supports the NVIDIA-Certified Storage program, providing pre-validated enterprise storage solutions for a wide range of AI workloads. The latest updates to AIPod include:

- Support for NVIDIA L40S and HGX H200 compute platforms.

- Integration with NVIDIA AI Enterprise software stack, including NIM microservices.

- Infrastructure alignment with RAG and agentic AI use cases.

- Enhanced version with Lenovo infrastructure, supporting Red Hat OpenShift and Lenovo Xclarity.

NVIDIA AI Data Platform

NetApp aligned its architecture with the NVIDIA AI Data Platform reference design, offering a composable infrastructure for data-intensive AI applications. The integration includes:

- A global metadata namespace enabling centralized data discovery and feature extraction.

- An integrated AI data pipeline capable of tracking incremental data changes, classifying unstructured data, and generating compressed vector embeddings for semantic search and RAG inferencing.

- A disaggregated storage architecture optimizing compute and flash storage utilization across hybrid cloud deployments.

Analysis

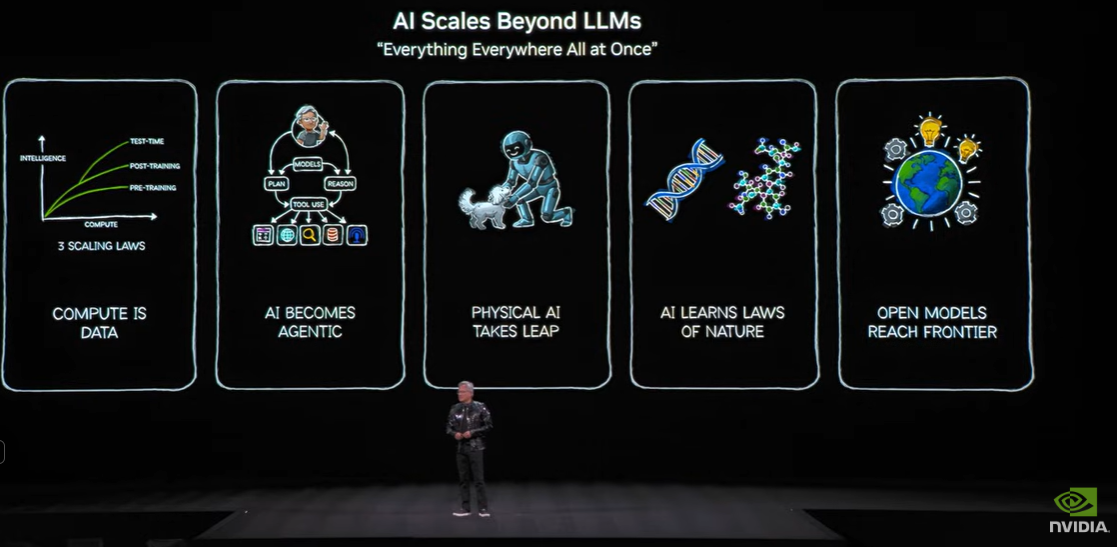

Its latest announcements show that NetApp can serve as the primary storage and data management for enterprise AI workloads. Aligning its product portfolio with NVIDIA’s reference architectures and AI software ecosystem strengthens its relevance across training, inferencing, and RAG-based agentic AI pipelines.

Deep integration with NVIDIA’s AI infrastructure stack, including support for new generative AI agents and microservices, allows NetApp to differentiate through its mature data services, hybrid cloud operability, and enterprise-grade security posture.

As enterprises adopt and deploy AI workloads, NetApp’s ability to unify high-performance storage with mature data management and hybrid cloud capabilities creates a durable advantage for the company. The company’s announcements at GTC show that its keeping pace and continues to be a strong contender for AI-focused enterprise needs.

Competitive Outlook & Advice to IT Buyers

These sections are only available to NAND Research clients. Please reach out to [email protected] to learn more.