At its annual GTC event in San Jose, NVIDIA announced an expansion of its NVIDIA-Certified Systems program, including the new NVIDIA AI Data Platform reference design, to include enterprise storage certification to help streamline AI factory deployments.

The new NVIDIA-Certified Storage program validates enterprise storage systems for AI and HPC workloads, ensuring they meet stringent performance and scalability requirements. The program integrates with NVIDIA’s existing ecosystem, including NVIDIA DGX systems, NVIDIA Cloud Partner (NCP) storage, and NVIDIA Enterprise Reference Architectures (RAs).

AI training and inference require vast amounts of high-speed, reliable, and scalable storage. The increasing complexity of agentic AI models, real-time reasoning, and multimodal data processing means that enterprises need optimized storage architectures that can efficiently handle structured, semi-structured, and unstructured data.

The new certifications and reference design recognizes this, amplifying the importance of storage across the AI lifecycle and recognizing that traditional approaches to storage may not be sufficient.

NVIDIA Storage Certifications

NVIDIA’s storage certification initiative consists of multiple levels that align with different AI deployment architectures:

- DGX BasePOD and DGX SuperPOD Storage Certification: Supports AI factory deployments using NVIDIA DGX systems.

- NCP Storage Certification: Provides validated storage solutions for large-scale cloud-based AI infrastructure using NCP Reference Architectures.

- NVIDIA-Certified Storage Certification: Validates storage for enterprise AI factory deployments using NVIDIA-Certified servers, based on NVIDIA Enterprise RAs.

Each certified storage system undergoes performance testing and validation against best practices for enterprise AI workloads. The certification ensures compatibility with NVIDIA’s AI software stack, networking, and computing infrastructure.

NVIDIA AI Data Platform

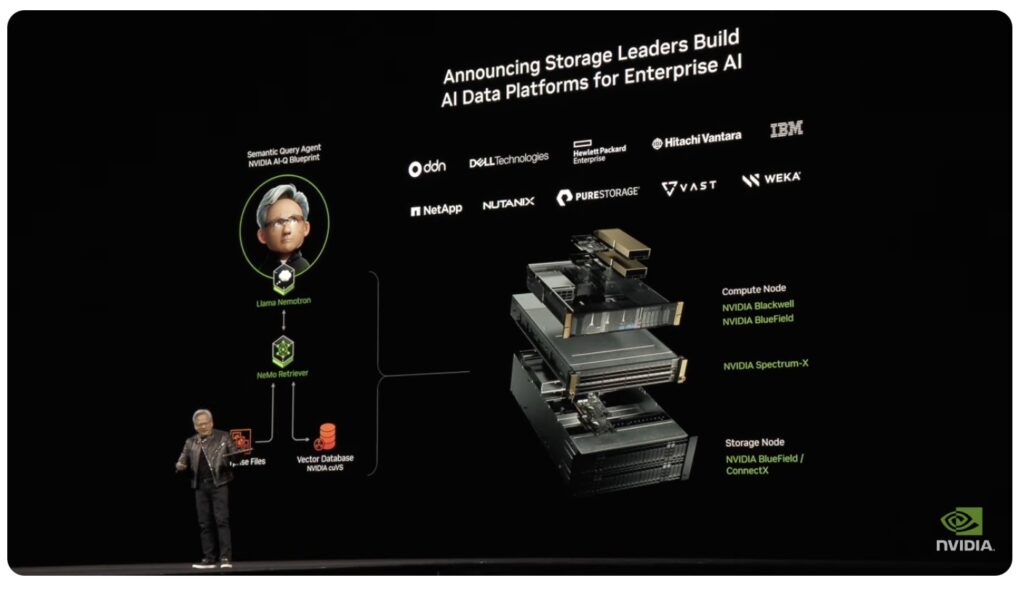

NVIDIA introduced the NVIDIA AI Data Platform, a customizable reference design for enterprise AI infrastructure. Storage providers can integrate this platform to support agentic AI workloads that leverage AI query agents for reasoning over enterprise data. The platform includes:

- NVIDIA AI-Q Blueprint: Optimizes AI query agent inference by enabling direct data connectivity.

- NVIDIA NeMo Retriever Microservices: Accelerates data retrieval by what NVIDIA claims is up to 15x when running on NVIDIA GPUs.

- NVIDIA Blackwell GPUs, BlueField DPUs, and Spectrum-X Networking: Enhances AI data processing performance, reducing latency and increasing efficiency.

- BlueField DPUs: Delivers up to 1.6x higher performance than CPU-based storage solutions, reducing power consumption by 50% and improving performance per watt by 3x.

- Spectrum-X Networking: Optimizes AI storage traffic, providing up to 48% acceleration over traditional Ethernet.

Enterprise Storage Partners

Nearly every leading enterprise storage vendor has partnered with NVIDIA to integrate certified storage solutions with the AI Data Platform:

- DDN: Integrating AI Data Platform capabilities into DDN Infinia AI.

- Dell Technologies: Developing AI storage solutions for PowerScale and Project Lightning.

- Hewlett Packard Enterprise: Incorporating AI Data Platform into HPE Private Cloud for AI, HPE Alletra, and HPE GreenLake for File Storage.

- Hitachi Vantara: Expanding AI Data Platform capabilities within the Hitachi IQ ecosystem.

- IBM: Integrating AI Data Platform with IBM Fusion and Storage Scale for RAG applications.

- NetApp: AI-driven storage with NetApp AIPod.

- Nutanix: Enabling AI inference and agentic workflows with Nutanix Unified Storage.

- Pure Storage: Delivering AI storage capabilities with its FlashBlade family, including the new FlashBlade//EXA.

- VAST Data: Developing real-time AI insights with VAST InsightEngine.

- WEKA: Optimizing agentic AI reasoning with WEKA Data Platform.

Analysis

NVIDIA’s expanded storage certification and AI Data Platform reinforces its position in the AI infrastructure market by enabling tightly integrated, performance-optimized storage solutions.

These initiatives lower the complexity of deploying AI factories and support AI reasoning workloads at scale:

- Enterprise AI Stack Differentiation: NVIDIA’s AI Data Platform enhances its AI stack by ensuring optimized storage performance, reducing AI model training and inference bottlenecks.

- Market Expansion: Integrating certified storage into NVIDIA’s broader AI infrastructure strengthens its ecosystem, reducing reliance on general-purpose storage solutions.

- Cloud and On-Premises AI Deployments: NVIDIA’s certification model supports both on-premises and hybrid cloud AI deployments, competing with cloud hyperscalers’ AI solutions while offering enterprises more control over infrastructure.

By certifying storage solutions alongside compute (GPUs) and networking (Spectrum-X), NVIDIA is moving beyond just AI acceleration into full-stack AI infrastructure. This means enterprises can build AI factories faster and with validated hardware, reducing integration complexity and deployment risks.

The NVIDIA AI Data Platform and Certified Storage will help enterprises deploy AI faster by eliminating guesswork in configuring compute, storage, and networking for AI workloads.

NVIDIA’s expansion into AI-certified storage is a strategic move to lock in its dominance in AI infrastructure. By integrating high-performance storage with GPUs, DPUs, and networking, NVIDIA remains solidly positioned as the go-to provider for enterprise AI workloads.