Red Hat announced a definitive agreement to acquire Neural Magic, an AI company specializing in software solutions to optimize generative AI inference workloads. The acquisition supports Red Hat’s strategy of advancing open-source AI technologies deployed across various environments within hybrid cloud infrastructures.

Neural Magic’s technology focuses on inference acceleration through sparsification and quantization techniques. These techniques enable the efficient deployment of AI models on CPU-only systems, offering potential cost and resource savings.

Who is Neural Magic?

Neural Magic, founded in 2018 and headquartered in Somerville, Massachusetts, delivers a solution to optimize and accelerate machine learning inference workloads. The company focuses on enabling high-performance execution of deep learning models on commodity CPU hardware, eliminating the need for specialized accelerators like GPUs or TPUs.

Key Aspects of Neural Magic’s Technology

- Sparsification and Quantization:

- Sparsification involves strategically pruning neural network connections to reduce model size and computational requirements without significantly impacting accuracy.

- Quantization further reduces model size by representing model parameters with lower-precision data types, reducing memory and computational needs, which allows AI models to run on CPUs without requiring specialized GPU hardware.

- Combined, these techniques allow for optimized inference on cost-effective, widely available hardware, thus lowering entry barriers for deploying AI applications.

- vLLM (Virtual Large Language Model):

- vLLM, an open-source project developed by Neural Magic in collaboration with UC Berkeley, focuses on delivering efficient, scalable inference for LLMs.

- Supports diverse hardware, including AMD GPUs, AWS Neuron, Google TPUs, Intel Gaudi, and NVIDIA GPUs, enhancing cross-platform compatibility.

- vLLM accelerates inference by managing model execution on various backends, optimizing performance for enterprise-grade deployment in hybrid cloud environments.

- Open-Source Model Portfolio:

- Neural Magic offers pre-optimized models that support sparsity and quantization techniques for enhanced deployment on CPUs.

- Red Hat plans to leverage Neural Magic’s work on vLLM and pre-optimized models to offer scalable, adaptable LLMs to organizations needing flexible AI deployment.

- Open-Source Development and Community Contributions:

- Neural Magic’s commitment to open source aligns with Red Hat’s approach, enabling collaborative development of gen AI tools and promoting community-driven innovation.

- The acquisition supports Red Hat’s InstructLab project, which allows enterprises to fine-tune and customize LLMs, enabling proprietary and domain-specific applications in a low-code environment.

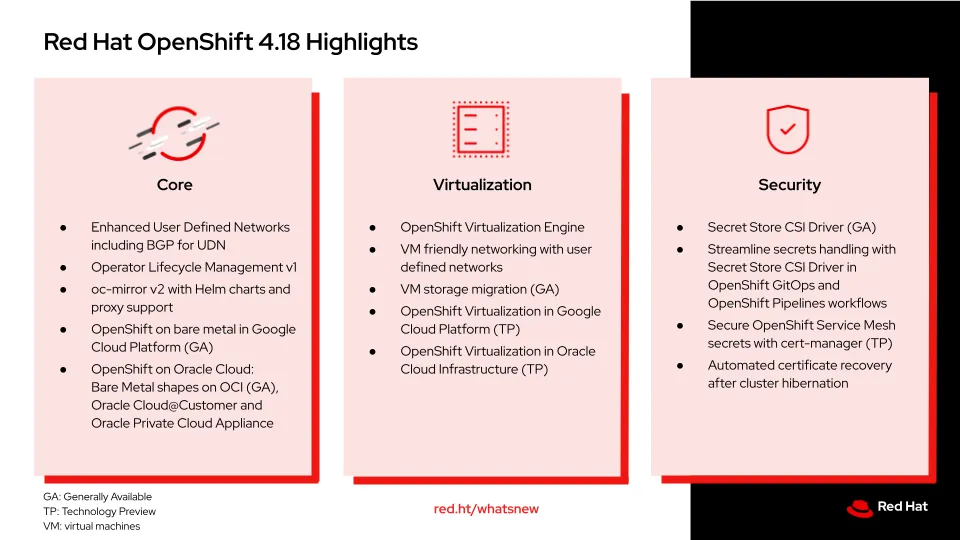

- This partnership expands Red Hat’s ecosystem, contributing to projects like OpenShift AI, its Kubernetes-based AI lifecycle management platform designed for deployment across hybrid cloud environments.

Analysis

Red Hat’s acquisition of Neural Magic is well-aligned with its AI strategy, which centers on making AI accessible, flexible, and efficient across hybrid cloud environments through open-source innovation.

This alignment is evident in several key aspects:

- Open Source-Driven AI Innovation: Red Hat has long championed open source as the foundation for scalable technology solutions. Neural Magic’s commitment to open-source projects aligns directly with Red Hat’s strategy to leverage community-driven innovation in AI. The acquisition enhances Red Hat’s ecosystem by adding a high-performance, open-source AI stack that enterprises can adapt and deploy with full transparency and control, a fundamental principle in Red Hat’s open-source philosophy.

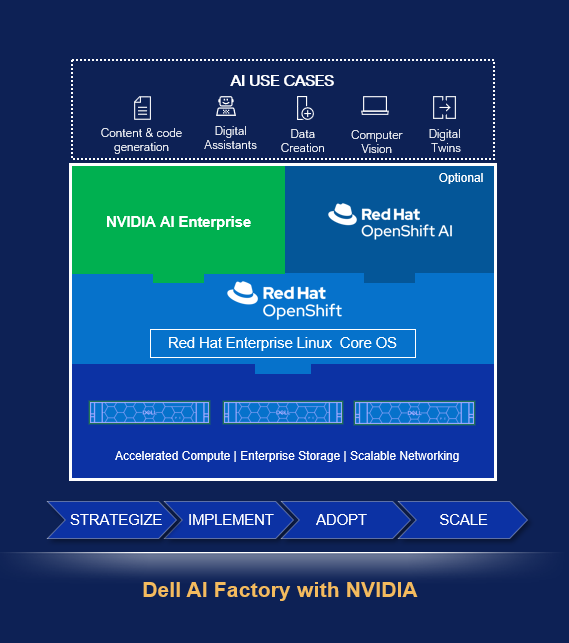

- Cost-Effective AI Deployment Across Hybrid Cloud: A critical part of Red Hat’s AI strategy is enabling cost-effective and infrastructure-flexible AI deployments. Neural Magic’s technology optimizes AI models to run on CPUs, eliminating the dependence on GPUs, making AI more affordable and easier to deploy across a range of hardware configurations, from cloud data centers to edge devices.

- Flexibility for Enterprise Customization: Red Hat’s AI strategy emphasizes adaptability, recognizing that enterprises require AI solutions tailored to their unique datasets, business processes, and infrastructure. Neural Magic’s expertise in model optimization and Red Hat’s InstructLab for fine-tuning LLMs create a powerful combination

- Empowering AI Model Lifecycle Management: Red Hat’s OpenShift AI platform streamlines the entire AI model lifecycle, from development and deployment to monitoring and maintenance, especially across Kubernetes environments. Neural Magic’s performance engineering expertise complements this by providing optimized inference capabilities that improve resource efficiency and operational scalability.

Overall, Red Hat’s acquisition of Neural reinforces Red Hat’s AI strategy of advancing open-source AI tools that are adaptable, cost-efficient, and deployable across diverse infrastructures. Neural Magic’s optimization technology directly supports Red Hat’s vision of democratizing AI for enterprise use by making AI deployment more accessible and affordable in the hybrid cloud landscape.

Competitive Positioning & Advice to IT Buyers

This content is only for NAND Research clients and subscribers. For access, please email [email protected]