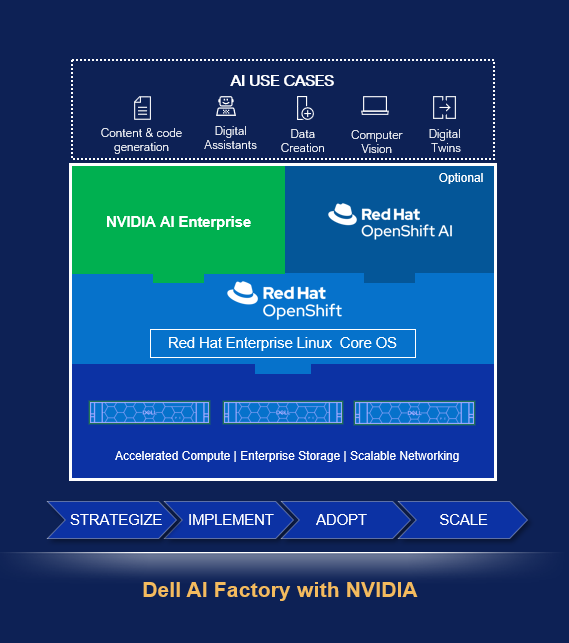

Dell Technologies recently announced that its integrated Red Hat OpenShift with its Dell AI Factory with NVIDIA platform is now generally available to customers. The solution was previewed earlier this year at Dell Tech world. The updated solution combines Dell PowerEdge infrastructure, NVIDIA GPU acceleration, Red Hat container orchestration, and NVIDIA AI Enterprise software into a validated stack.

Technical Overview

The integrated platform builds on Dell’s existing AI Factory foundation with enhancements for container-based AI workloads. Dell employs a multi-cluster approach separating compute and management functions for operational efficiency and security isolation.

Compute

The solution uses Dell PowerEdge R760xa server nodes as the primary compute platform. Each node supports up to NVIDIA L40S GPU accelerators (48GB GDDR6 memory) or H100 NVL configurations (188GB HBM3 memory). A minimum of two-nodes is required for high availability.

In its announcement, Dell said that that it will soon support its upcoming PowerEdge XE9680 server, which will provide significantly expanded capabilities, accommodating up to eight NVIDIA H200 SXM5 GPU accelerators per node.

The NVIDIA H200 units provide 141GB HBM3e memory per GPU, promising to deliver over 15TB of aggregate GPU memory and 15,832 TOPS of INT8 performance per fully configured node. However, Dell has not provided timeline commitments or pricing for these higher-density configurations.

Network Architecture

The Dell AI Factory relies on NVIDIA Spectrum-X Ethernet switches designed specifically for AI workloads, featuring adaptive routing and congestion control optimizations for distributed training.

NVIDIA Bluefield-3 SuperNICs provide hardware-accelerated networking with integrated ARM cores for offloading network processing tasks from primary CPUs.

Management and Control Plane

Management operates on a dedicated four-node cluster powered by Dell PowerEdge R660 servers, providing operational isolation from AI compute workloads.

This separation enables independent scaling, maintenance, and security policies for control plane functions while preventing management overhead from impacting AI model training and inference performance.

The management cluster hosts Red Hat OpenShift cluster operations, monitoring, logging, and potentially AI-specific management tools. This architecture follows established enterprise Kubernetes patterns, but does add operational complexity requiring additional hardware investment and management overhead compared to converged approaches.

Software Stack Integration

Red Hat OpenShift provides the Kubernetes orchestration foundation, supporting container lifecycle management, resource scheduling, and service mesh capabilities across the AI infrastructure. The solution supports OpenShift versions 4.12 and later, though Dell has not specified supported upgrade paths or long-term version compatibility commitments.

The NVIDIA AI Enterprise software suite includes curated container images, AI development frameworks, and optimization libraries specifically validated for the hardware configuration. This includes support for popular AI/ML frameworks like TensorFlow, PyTorch, and RAPIDS, along with NVIDIA’s Triton Inference Server for model serving capabilities.

Impact to IT Organizations

The Dell AI Factory with NVIDIA, supporting Red Hat OpenShift, brings several operational benefits to IT organizations:

- Reduces integration complexity through pre-validated component combinations.

- Extends container-based deployment practices to AI workloads for companies deploying OpenShift, reducing training requirements and operational overhead.

- Simplifies vendor management for enterprise procurement teams with a unified support model through the Dell and Red Hat partnership.

For enterprises deploying on-premises AI infrastructure, several factors strengthen the attractiveness of Dell’s solution:

- Regulated industries with strict data residency requirements can maintain compliance while accessing enterprise-grade AI capabilities typically available only through cloud providers.

- Organizations with substantial existing data investments can avoid costly and time-intensive cloud data migration while still accessing modern AI development toolchains.

The platform also addresses latency-sensitive AI applications where real-time inference requirements make cloud-based serving impractical. Manufacturing predictive maintenance, financial fraud detection, and healthcare diagnostic applications often require response times that favor on-premises deployment over cloud-based alternatives, regardless of cost considerations.

While cloud AI services provide attractive unit economics for experimental and variable workloads, sustained production AI applications with consistent resource utilization can justify capital infrastructure investment, particularly when factoring in data transfer costs and cloud egress charges.

Analysis

Dell’s integration of Red Hat OpenShift with its AI Factory platform addresses legitimate enterprise challenges around AI infrastructure complexity and vendor management. The solution provides clear value for organizations with substantial on-premises AI requirements, existing Dell-Red Hat relationships, and predictable AI workload patterns.

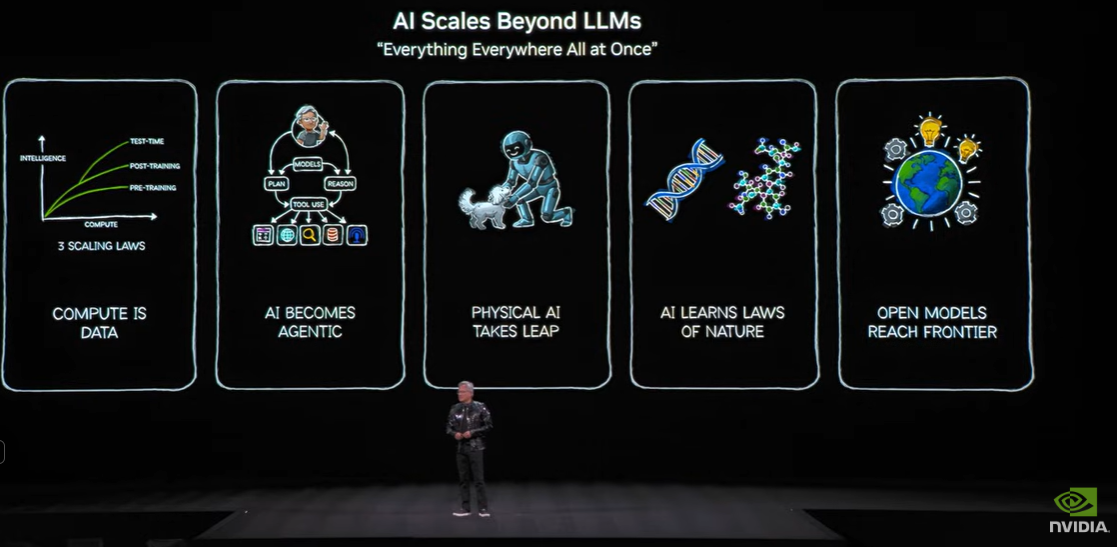

Modern AI development relies on containerized model training, distributed inference serving, and microservices-based AI application architectures. Major AI frameworks including TensorFlow, PyTorch, and emerging MLOps platforms have followed NVIDIA’s lead and standardized around container-based deployment patterns. This makes Kubernetes orchestration capabilities essential rather than optional for enterprise AI initiatives.

This container-native trend amplifies the value proposition for organizations already invested in Red Hat OpenShift environments. With Dell’s updated AI factory offerings, these organizations can now leverage existing container management expertise, security policies, and operational procedures across traditional applications and AI workloads, reducing the operational overhead that typically accompanies AI infrastructure deployment.

Dell has been the industry’s number one server vendor for as many years as most followers remember. Evolving from a server and storage provider to a full-stack AI solutions player isn’t easy, but there’s no OEM better positioned than Dell to do so.

The company continues to capitalize on the accelerating adoption of enterprise AI across while also managing to differentiate itself in the market. Dell has always been a critical partner for enterprise IT, something that continues as enterprise customers bring AI capabilities on-prem. These latest updates simply reinforce that.

Competitive Outlook & Advice to IT Buyers

Dell’s primary competition includes established converged infrastructure vendors offering similar NVIDIA-based AI platforms. HPE’s GreenLake edge-to-cloud platform, Cisco’s validated designs with NVIDIA, and NetApp’s AI infrastructure solutions all target similar enterprise use cases with comparable technical capabilities.

These sections are only available to NAND Research clients. Please reach out to [email protected] to learn more.