Background

Artificial Intelligence is having a moment. It’s impossible to escape the flurry of activity around generative AI, with nearly every technology company hurrying to incorporate large language models into their offerings. Microsoft, a long-time investor in OpenAI, the firm behind ChatGPT, last week showed us just how significantly large language models are going to change the user experience for its office products. It’s compelling.

But AI is more than just large language models, as we’ll be reminded this week at Nvidia’s annual GTC conference. AI is changing how we think about everything from retail loss prevention to medical diagnosis. Anywhere that the interpretation of data can lead to enhanced value is ripe for experimentation with AI.

NVIDIA lives at the center of this revolution. Its GPUs are the most common, and most powerful, in the industry. Beyond its hardware, NVIDIA is enabling the adoption of AI with software tools that span the gamut from edge inference to autonomous driving to medical imaging. The list truly is limitless. The wonderful thing is that much of Nvidia’s software is free for the taking. It just needs an NVIDIA GPU to truly deliver.

The Pull of Generative AI

Generative AI, such as OpenAI’s ChatGPT, drives strong demand for the latest generation of NVIDIA accelerators. NVIDIA CEO Jensen Huang told us that accelerator sales directly tied to inference for large language models have “gone through the roof” to the point where NVIDIA’s view of the technology has “dramatically changed” as a result of the past three months of activity in the space.

It takes a lot of resources to handle even inference tasks for Generative AI, making it cost-prohibitive for smaller companies, and leading to large CapEx spend for enterprises. This is where the cloud makes sense. Jensen agrees, saying that CSPs are driving tremendous demand and that we’re just getting started.

In Jensen’s view, the CSPs enable AI “factories” where the final product is intelligence. These factories will be a blend of on-prem and cloud, but the cloud is leading the charge.

News: Nvidia in the Cloud

One of the challenges of working with state-of-the-art machine learning is that the hardware is relatively expensive. NVIDIA doesn’t publish pricing for its high-end accelerators, but estimates suggest the price of its latest generation Hopper-based GH100 is near $30,000. That’s a lot of capital to invest, assuming you can even find one of these accelerators. This is where the public cloud really shines.

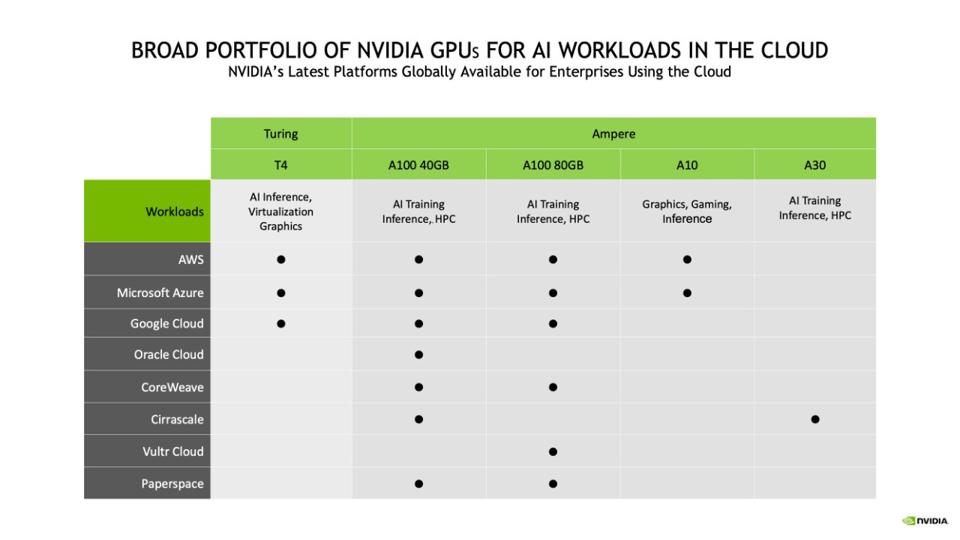

Every cloud service provider (CSP) offers accelerated instances based on NVIDIA products, many of which date back multiple generations. At the high end, Azure, Amazon Web Services, Google Cloud, and Oracle Cloud offer instance types based on the Nvidia A100 accelerator. Each of these CSPs will also very soon provide the newest generation H100. Azure is already in preview with the new instance types.

According to Liftr Insights, the leading data service that tracks cloud instances across the top six global CSPs, Nvidia GPUs account for over 93% of GPU-accelerated instance types in the public cloud. Of those, the vast majority are based on NVIDIA’s Ampere architecture; these include NVIDIA’s A100, A10G, and A10 accelerators. Soon, this will include the new H100 accelerator.

The market is responding to the cloud-driven AI model. During NVIDIA’S latest earnings call, CFO Colette Kress told us that “Revenue growth from CSP customers last year significantly outpaced that of Data Center as a whole, as more enterprise customers moved to a cloud-first approach. On a trailing 4-quarter basis, CSP customers drove about 40% of our Data Center revenue.” That’s significant.

Ms. Kress went on to say that, on a revenue basis, demand for the new H100 accelerator in the fourth quarter of 2022 was already “much higher” than that of the A100.

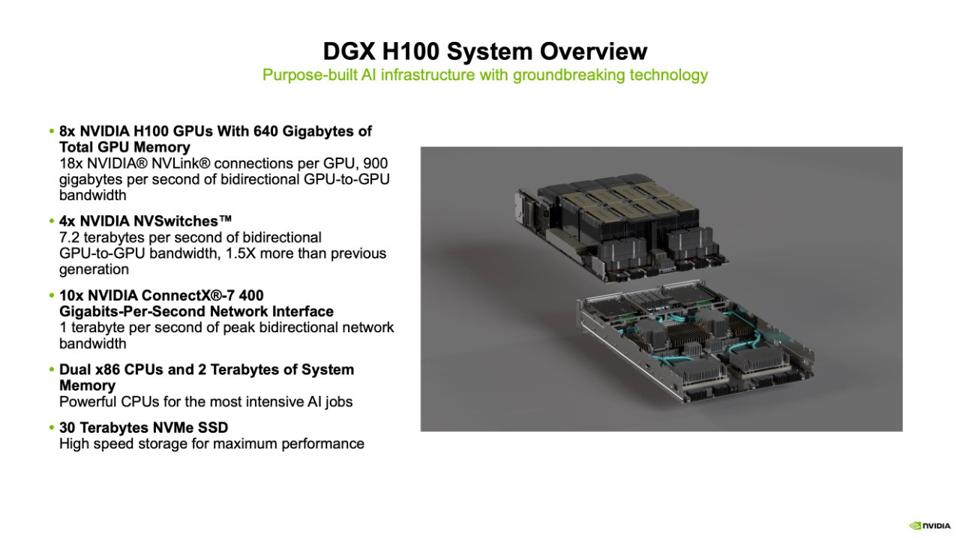

NVIDIA DGX Cloud

NVIDIA introduced its turnkey DGX “deep learning supercomputer” back in 2017, and has continuously updated it as new generation accelerators are introduced. The latest generation, the NVIDIA DGX H100, is a powerful machine. Incorporating eight NVIDIA H100 GPUs with 640 Gigabytes of total GPU memory, along with two 56-core variants of the latest Intel Xeon processor, and all tied together with NVIDIA’s NVLink interconnects, the machine can deliver 32 petaFLOPS of performance.

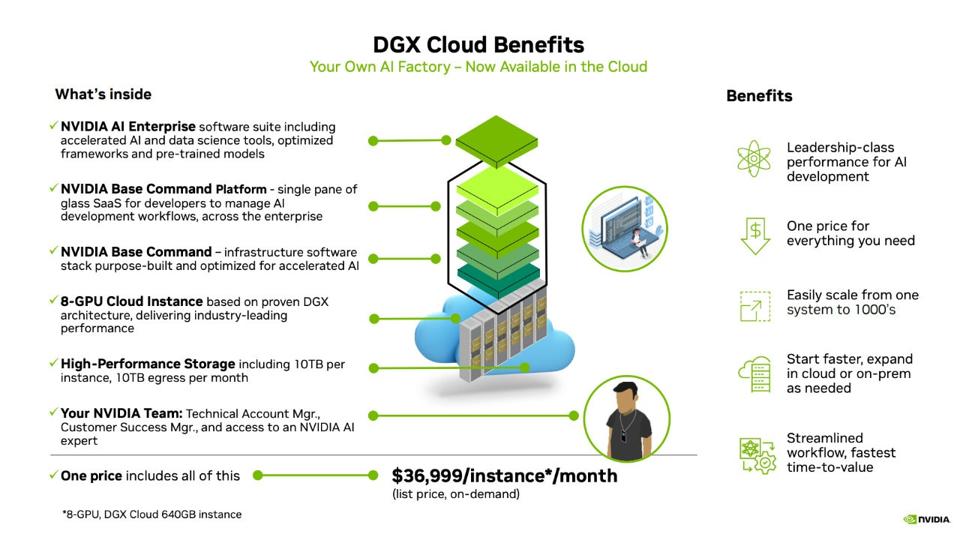

During its most recent earnings call, Jensen announced that the DGX machine will be available directly through the CSP community as NVIDIA’s “DGX Cloud.” He said that “NVIDIA DGX is an AI supercomputer and a blueprint of AI factories being built around the world. AI supercomputers are hard and time consuming to build, and today we’re announcing the Nvidia DGX Cloud, the fastest and easiest way to have your own DGX AI supercomputer. Just open your browser.”

This powerful option lowers the entry barrier for both AI researchers and commercial entities looking to take advantage of leading-edge AI technology. Before DGX Cloud, IT administrators were forced to assemble their AI infrastructure from the public cloud offerings. DGX cloud removes a level of complexity from that process.

NVIDIA’s DGX comes in several a range of options. The offerings include the stand-alone NVIDIA DGX A100 and H100 systems, the NVIDIA DGX BasePOD reference architecture, and the NVIDIA DGX SuperPOD turnkey AI data center solution. Our current understanding of DGX cloud is that it is the DGX H100 that the CSPs are offering.

NVIDIA and Oracle Cloud

DGX Cloud will be available first through Oracle Cloud. Its OCI Supercluster provides a purpose-built RDMA network, bare-metal compute and high-performance local and block storage that can scale to superclusters of over 32,000 GPUs. Azure will follow. NVIDIA told us that DGX Cloud instances will start at $36,999 per instance per month.

Beyond DGX Cloud, Oracle is also embracing NVIDIA’s BlueField 3 DPUs to help accelerate its infrastructure. BlueField-3 is NVIDIA’s third-generation data processing unit that enables enterprises to build software-defined, hardware-accelerated IT infrastructures from cloud to data center to edge

The DPUs support Ethernet and InfiniBand connectivity at up to 400 gigabits per second and provide 4x more compute power, up to 4x faster crypto acceleration, 2x faster storage processing and 4x more memory bandwidth compared to the previous generation of BlueField.

Oracle is making BlueField-accelerated networking available to its OCI customers. This provides a powerful new option for Oracle’s cloud customers to offload data center tasks from CPUs.

Analysis

NVIDIA’s data center business now eclipses its gaming business. The company spent the past two decades strategically investing in enabling enterprise AI. Its remarkably simple to leverage the tools provided by NVIDIA to build a system for a range of AI-enabled applications. This is true at the industrial edge. It’s equally valid in the highest-performance deep learning clusters that you imagine.

NVIDIA’s strength is equal parts hardware engineering and software enablement. The company’s diverse range of cloud-native software is what makes it easy. However, it’s NVIDIA’s hardware that makes it performant.

Delivering DGX directly to users through public partners who are already bringing goodness to NVIDIA’s bottom-line makes a lot of sense. This model brings a cloud-like efficiency to high-performance AI. The CSPs absorb the capital expense while amortizing it over a broad customer base, while end-customers gain affordable access.