Quick Take: AWS re:Invent AI Services & Infrastructure Announcement Wrap-Up

AWS has made close to 50 announcements in the first 2 days of re:Invent. This blog takes a look at the most interesting AI services & infrastructure related ones.

Quick Take: AWS re:Invent Day 1

AWS unveiled a range of new features and services, reflecting its continued focus on innovation across generative AI, compute, and storage. These announcements include enhancements to Amazon Bedrock for improved testing and data integration, new capabilities for the generative AI assistant Amazon Q, high-performance storage-optimized EC2 instances, and advanced storage solutions like intelligent tiering and a dedicated data transfer terminal

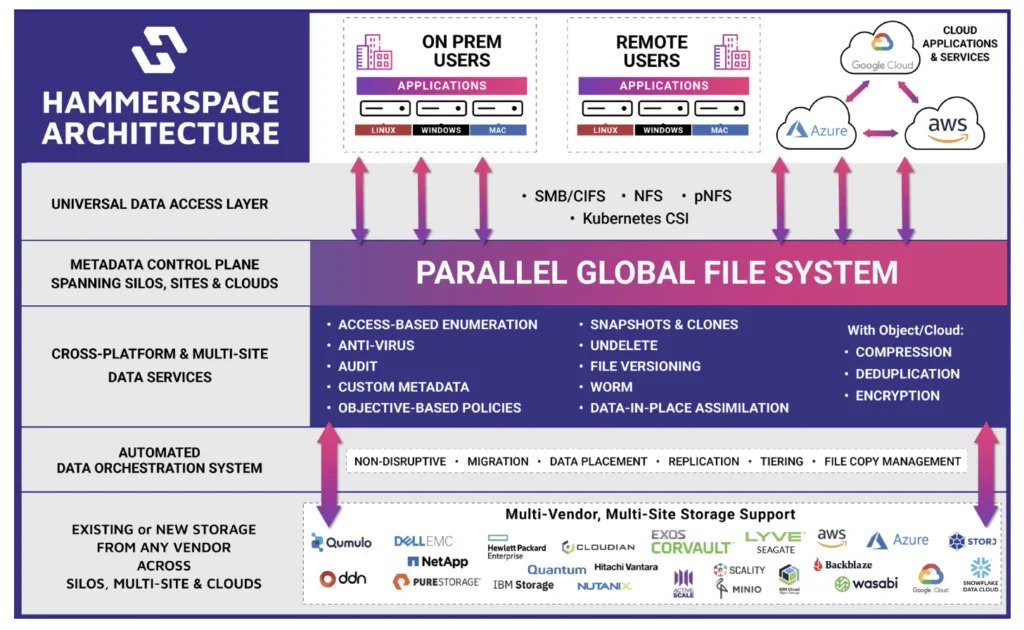

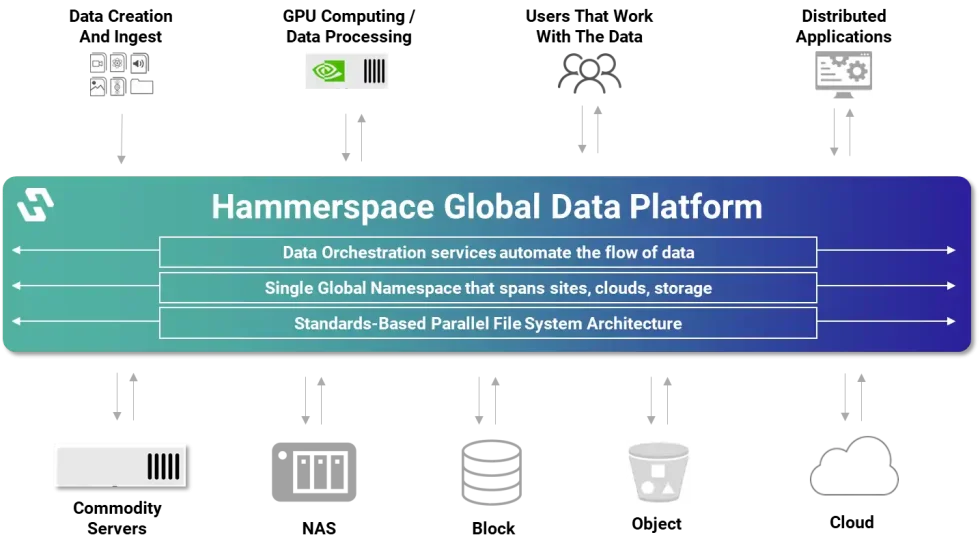

Research Note: Hammerspace Global Data Platform v5.1 with Tier 0

Hammerspace recently announced the version 5.1 release of its Hammerspace Global Data Platform. The flagship feature of the release its new Tier 0 storage capability, which takes unused local NVMe storage on a GPU server and uses it as part of the global shared filesystem. This provides higher-performance storage for the GPU server than can be delivered from remote storage nodes – ideal for AI and GPU-centric workloads.

Quick Take: Snowflake Acquires Datavolo

Snowflake recently announced its acquisition of Datavolo, a data pipeline management company, to enhance its capabilities in automating data flows across enterprise environments.

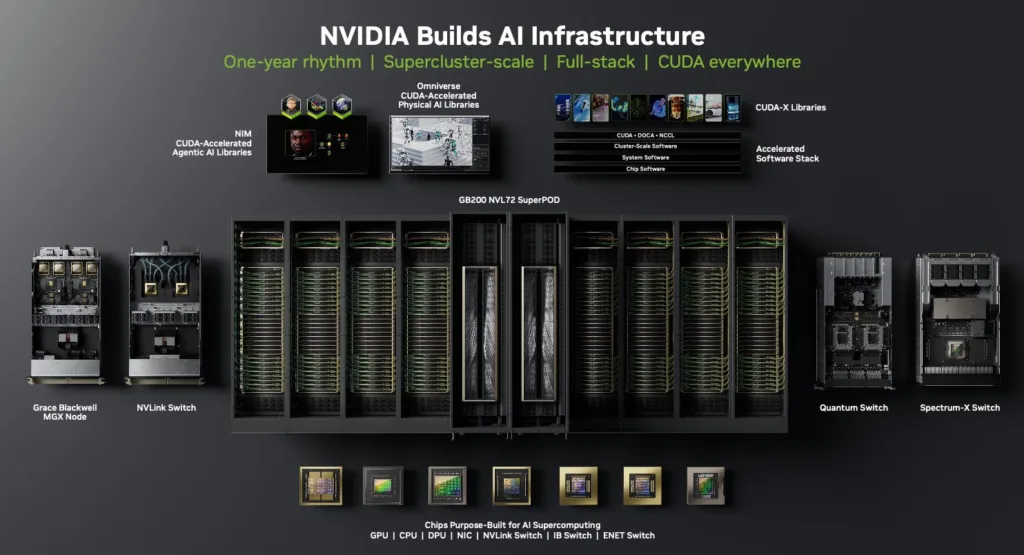

Research Note: NVIDIA SC24 Announcements

At the recent Supercomputing 2024 (SC24) conference in Atlanta, NVIDIA announced new hardware and software capabilities to enhance AI and HPC capabilities. This includes the new GB200 NVL4 Superchip, the general available of its H200 NVL PCIe, and several new software capabilities.

IT Infrastructure Round-Up: Emerging Trends in Infrastructure and Connectivity (Nov 2024)

November was a busy month of announcements in infrastructure and connectivity, revealing a convergence of innovation to meet the stringent demands of AI-driven workloads, scalability, and cost efficiency.

Are IT Organizations Ready for the GenAI Revolution? Let’s Ask.

Over the past few months, we’ve seen surveys published by tech companies across the spectrum that show us how gen AI is forcing IT organizations to assess their readiness for its adoption and deployment. The surveys offer a comprehensive view of the industry’s current stance, showing enthusiasm balanced by caution around organizational and infrastructure challenges.

Let’s examine recent surveys from the tech industry itself to see what they say about IT’s readiness to tackle the challenges of generative AI.

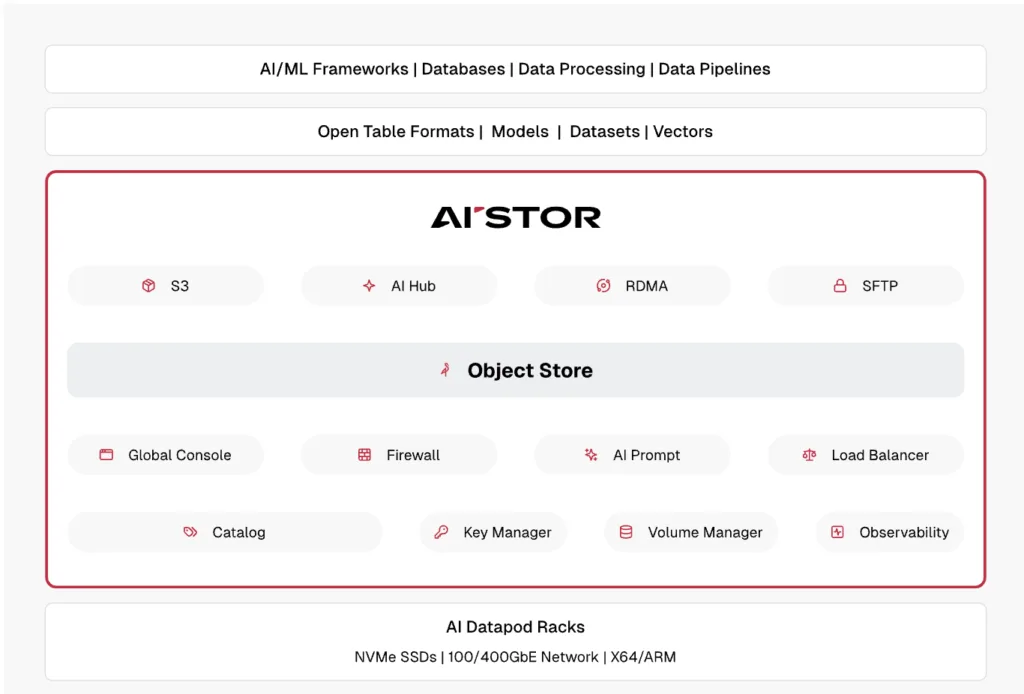

Research Note: Minio AIStor Object Storage

MinIO recently introduced AIStor, its new object storage solution designed for AI/ML workloads. AIStor leverages insights from large-scale customer environments, some exceeding 1 EiB of data, to address the unique challenges of managing and scaling data infrastructure for AI applications.

SC24: Shaping the Future of IT with High-Performance Computing and AI

Last week’s Supercomputing 2024 (SC24) conference in Atlanta brought together IT leaders, researchers, and industry innovators to unveil advancements in HPC and AI, with even a little quantum computing thrown in.

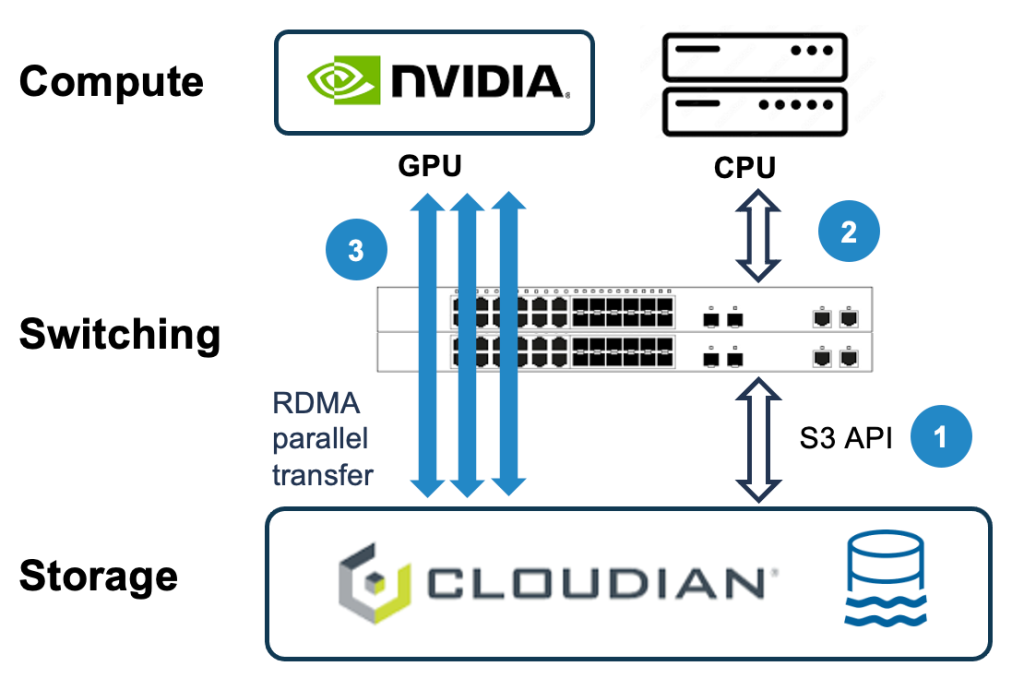

Research Brief: Cloudian Tackles AI Storage Complexity with NVIDIA GPUDirect for Object Storage

Cloudian’s new HyperStore with NVIDIA GPUDirect for Object Storage is a significant step forward in high-performance data management. The seamless integration of GPUDirect with HyperStore allows users to benefit from Cloudian’s scalability and ease of use while enabling a dedicated high-performance data path for GPU workloads.

Research Note: Red Hat Acquires Neural Magic

Red Hat announced a definitive agreement to acquire Neural Magic, an AI company specializing in software solutions to optimize generative AI inference workloads. The acquisition supports Red Hat’s strategy of advancing open-source AI technologies deployed across various environments within hybrid cloud infrastructures.

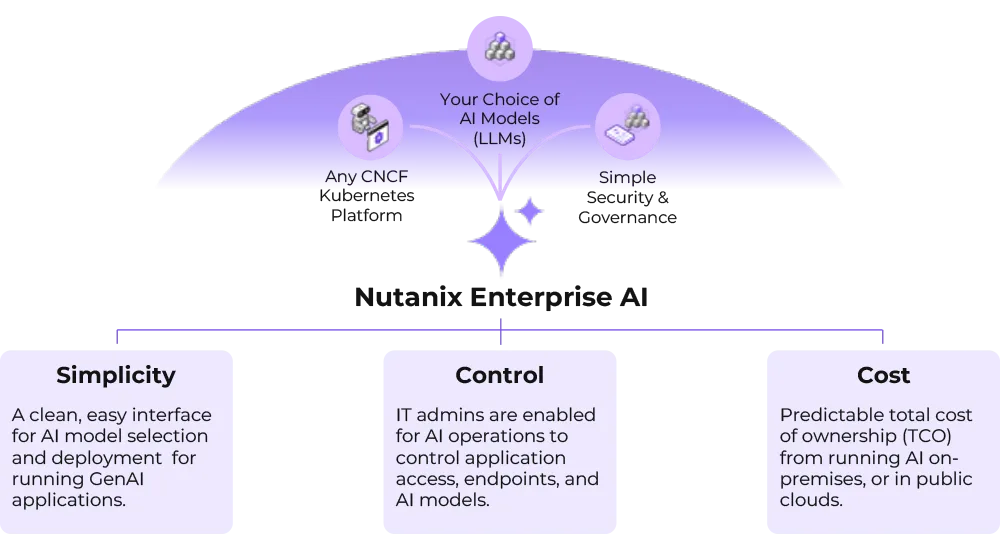

Research Note: Nutanix Enterprise AI

Nutanix recently introduced Nutanix Enterprise AI, its new cloud-native infrastructure platform that streamlines the deployment and operation of AI workloads across various environments, including edge locations, private data centers, and public cloud services like AWS, Azure, and Google Cloud.

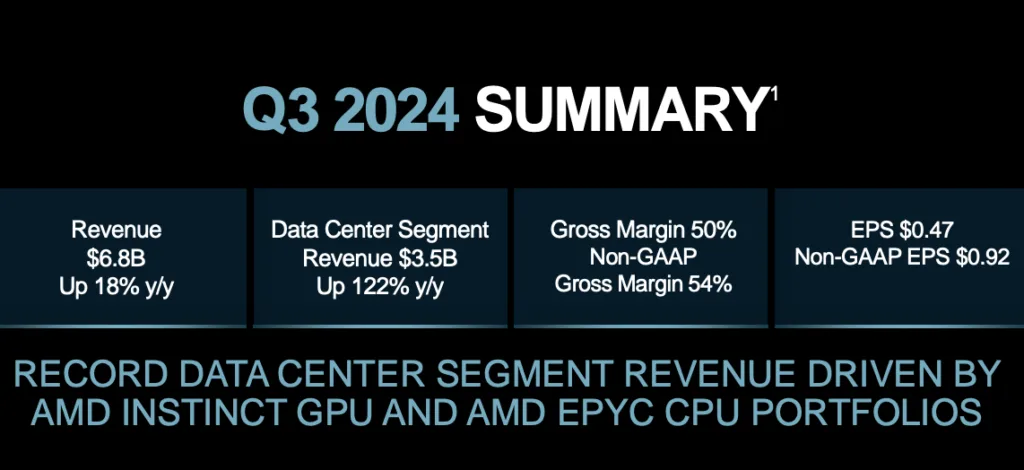

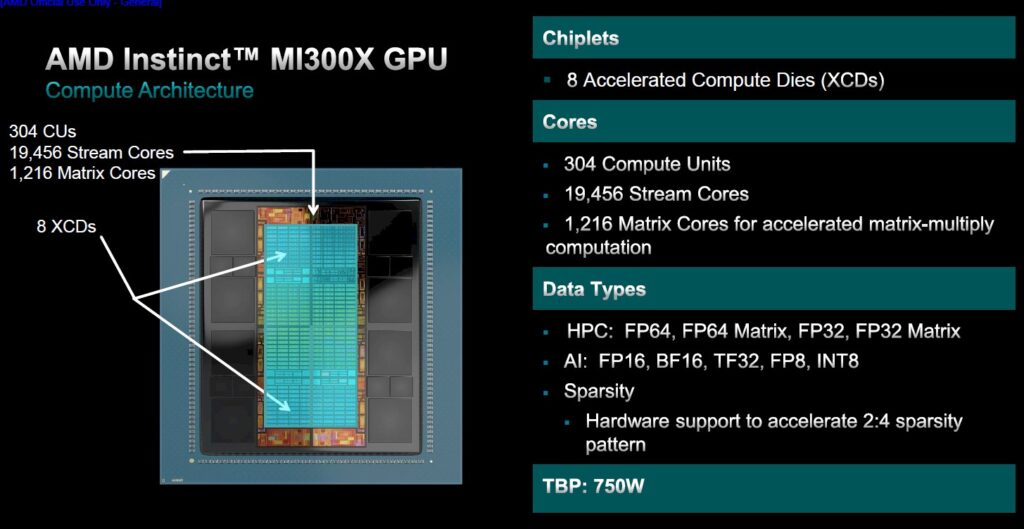

Quick Take: AMD Data Center Group Earnings – Q3 2024

AMD this week announced significant revenue and earnings growth in Q3 2024, driven primarily by exceptional performance in the Data Center segment. AMD’s Data Center revenue increased by 122% year-over-year, reaching a record $3.5 billion and marking over half of AMD’s total revenue this quarter. CEO Lisa Su, on the earnings call, attributed this growth to the success of the EPYC CPUs and MI300X GPUs, which experienced strong adoption across cloud, enterprise, and AI applications.

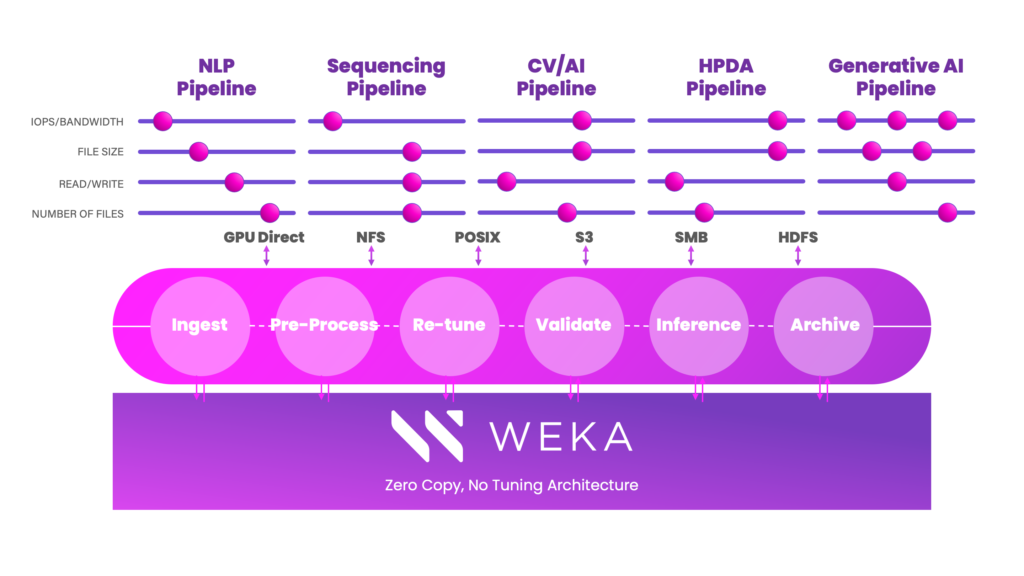

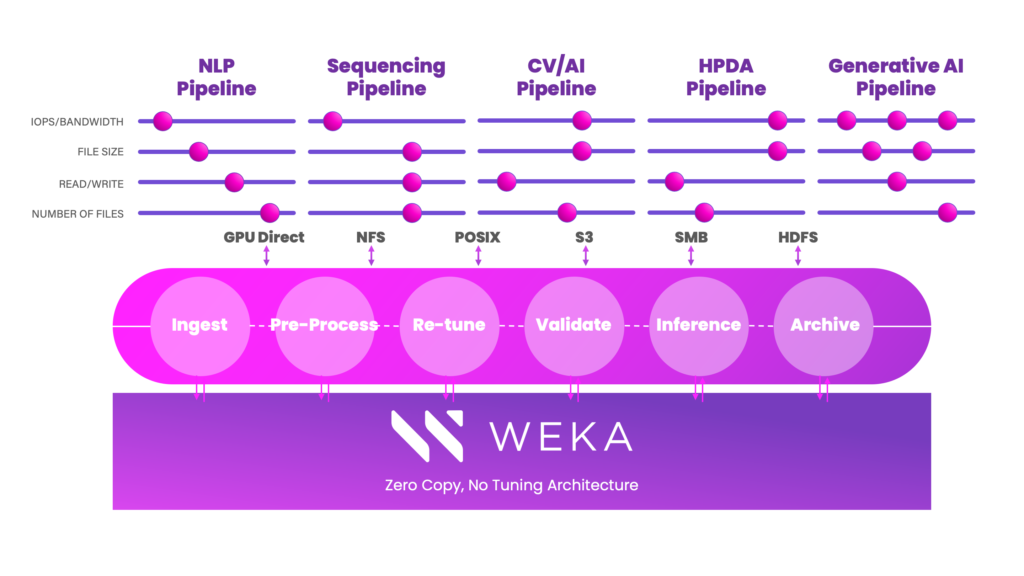

Research Note: WEKA’s New WEKApod Nitro & Extreme

WEKA this week expanded its footprint in the AI data infrastructure space with the release of two new data platform appliances designed to meet diverse AI deployment needs.

The new products, WEKApod Nitro and WEKApod Prime, are WEKA’s latest offerings for high-performance data solutions that support accelerated AI model training, high-throughput workloads, and enterprise AI demands. The new solutions address the rapid growth of generative AI, LLMs, and RAG and fine-tuning pipelines across industries.

Research Note: IBM Granite 3.0 Models

IBM recently released Granite 3.0, its third generation of LLMs, designed to balance performance with safety, speed, and cost-efficiency for enterprise use. Its flagship model, Granite 3.0 8B Instruct, is a dense, instruction-tuned LLM optimized for enterprise tasks, trained on 12 trillion tokens across multiple languages and programming languages.

Research Note: Google Cloud Database & Related GenAI Announcements

Google Cloud recently announced a series of significant upgrades to its database solutions, emphasizing its commitment to supporting enterprise generative AI (gen AI) applications. The new capabilities focus on enhancing developer tools, simplifying database management, and modernizing database infrastructure.

OCP Global Summit 2024: Key Announcements

At the recent OCP Global Summit 2024, the organization unveiled several major initiatives highlighting OCP’s commitment to driving innovation and fostering collaboration in the tech ecosystem. This includes expanding its AI Strategic Initiative with contributions from NVIDIA and Meta, new alliances for sustainability and standardization, and the launch of an open chiplet marketplace.

Research Note: Dell AI Portfolio Updates

Dell Technologies made significant strides in AI infrastructure with its Integrated Rack 7000 (IR7000) launch and associated platforms for AI and HPC. The announcements introduce enhancements in computing density, power efficiency, and data management, catering specifically to AI workloads.

Raspberry Pi Integrates the Sony Semi Solutions Intelligent Aitrios IMX500 Image Sensor

Sony Semiconductor Solutions Corporation (SSS) and Raspberry Pi Ltd recently announced the release of a co-developed AI Camera designed to accelerate the development of AI solutions for edge processing.

Quick Take: Cisco’s Investment in CoreWeave and its AI Infrastructure Strategy

Cisco is reportedly on the verge of investing in CoreWeave, one of the hottest neo-cloud providers specializing in AI infrastructure, in a transaction valuing the GPU cloud provider at $23 billion. CoreWeave has rapidly scaled its AI capabilities by utilizing NVIDIA GPUs for data centers, becoming a key player in the AI infrastructure market.

Research Note: VAST Data’s New AI Updates & Partnerships

VAST made a wide-ranging set of AI-focused announcements that extend it’s already impressive feature set to directly address the needs of enterprise AI. Beyond the new features, VAST also highlighted new strategic relationships and a new user community that help bring VAST technology into the enterprise.

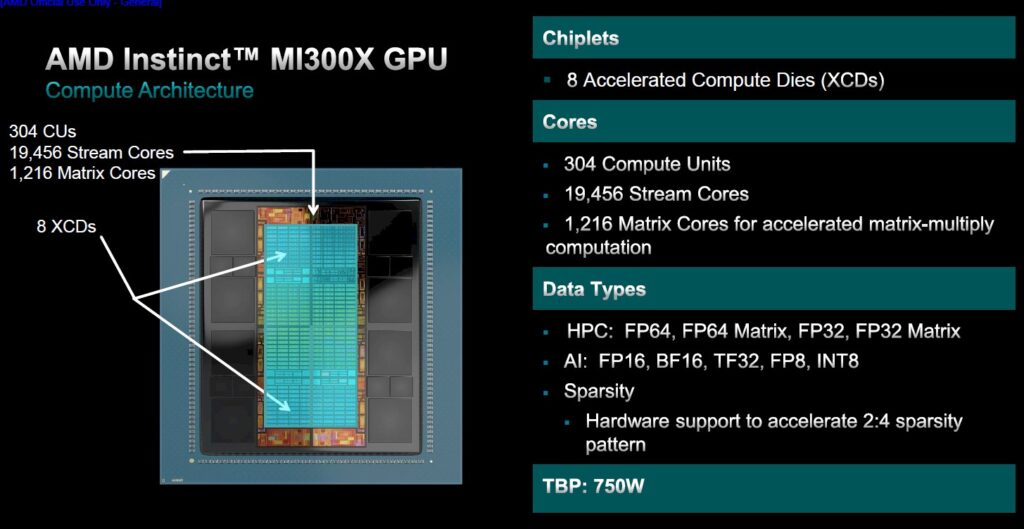

AMD MI300 Gains Momentum with New Vultr & Oracle Cloud Wins

The AMD MI300’s advanced architecture, featuring high memory capacity, low power consumption, and solid performance, is finding a home among cloud providers. While Microsoft Azure previously announced MI300-based instances, there are new announcements from specialty GPU cloud provider Vultr and the mainstream CSP Oracle Cloud Infrastructure of new integrations with AMD’s MI300 accelerator.

Research Note: NetApp’s New AI Vision

At its recent Insight customer event, NetApp shared its vision for how its solutions will evolve to address these challenges. NetApp’s approach combines its existing ONTAP-based products with a new disaggregated architecture and new data manipulation capabilities that promise to deliver the efficiencies demanded by enterprise AI.

In Conversation: NetApp’s Intelligent Data Infrastructure

Earlier this summer I had the opportunity to talk with NetApp CMO Gabie Boko and NetApp VP of Product Marketing Jeff Baxter in a wide-ranging conversation about the power of data infrastructure, which extends well beyond simple enterprise storage.

As NetApp Insight, its premier customer event, kicks off this week in Las Vegas, I thought we’d revisit the discussion, as it provides nice context for what the company is expected to announce this week.

Research Note: NetApp & AWS Expand Strategic Collaboration Agreement

NetApp recently announced the expansion of its long-standing partnership with Amazon Web Services. The new Strategic Collaboration Agreement (SCA) strengthens the relationship between the two companies, paving the way for enhanced generative AI capabilities and streamlined CloudOps for joint customers.

Research Note: IBM Boosts Oracle Consulting with Accelalpha Acquisition & Consulting Expansion

IBM recently announced its intent to acquire Accelalpha, a global provider of Oracle Cloud consulting services, as part of its strategy to expand its Oracle consulting expertise and enhance its broader consulting capabilities. Accelalpha’s specialized knowledge in supply chain, logistics, finance, and enterprise performance management bolsters IBM’s ability to help clients accelerate their digital transformations.

Research Note: Lenovo Introduces Range of New AI Services

Lenovo unveiled a new suite of services aimed at accelerating businesses’ AI transformation. These services include GPU resources on demand, AI-driven systems management, and advanced liquid cooling services.

Quick Take: Intel Gaudi 3 on IBM Cloud

IBM and Intel announced a partnership to integrate Intel’s Gaudi 3 AI accelerators into IBM Cloud, which will be available in early 2025. This collaboration aims to enhance the scalability and affordability of enterprise AI, focusing on performance, security, and energy efficiency. IBM Cloud will be the first cloud provider to offer Gaudi 3, which […]

Research Note: The Hammerspace Appliance

Hammerspace recently expanded its offerings by introducing a new line of appliances. Delivering Hammerspace’s data management technology as an appliance simplifies deployment and streamlines the process of purchasing and configuring its solutions.

Research Note: GenAI Enhancements to Oracle Autonomous Database

Oracle recently introduced significant Generative AI enhancements to its Autonomous Database to simplify the development of AI-driven applications at enterprise scale. The enhancements leverage Oracle Database 23ai technology to empower organizations with tools that make integrating AI, streamlining data workflows easier, and modernizing application development.

JFrog Introduces Comprehensive Runtime Security Solution & Nvidia Integration

Announced this week at its annual swampUp event, the new JFrog Runtime is a robust runtime security solution that offers end-to-end protection for applications throughout their lifecycle. Alongside this launch, JFrog also revealed a new product integration with NVIDIA, which will enable users to secure and manage AI models more effectively using NVIDIA’s AI infrastructure.

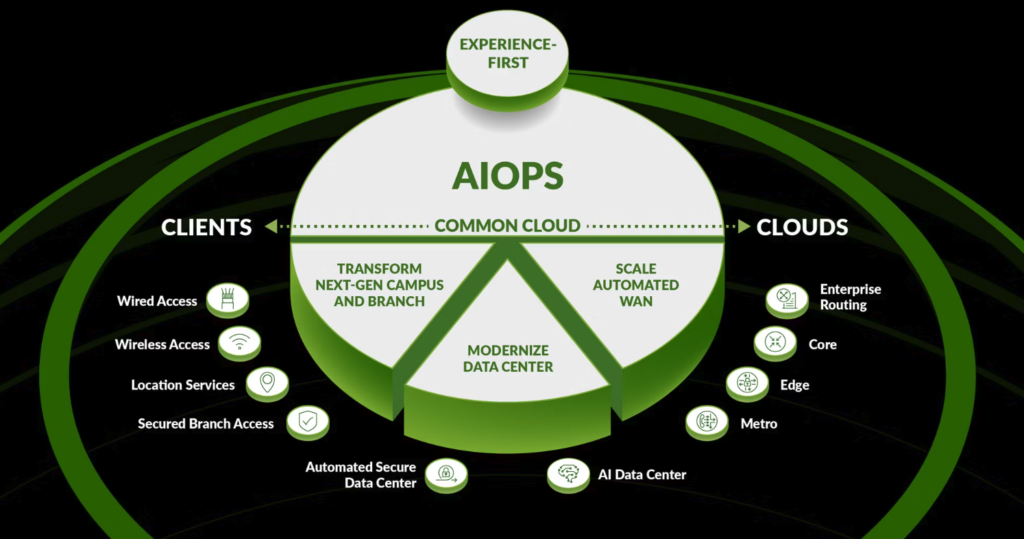

Research Note: Juniper Expands AIOps Portfolio

Juniper Networks expanded its AI-driven networking portfolio by introducing new Ops4AI initiatives. These solutions integrate AI-driven automation and optimization to enhance network performance, particularly focusing on the growing demands of AI workloads within data centers.

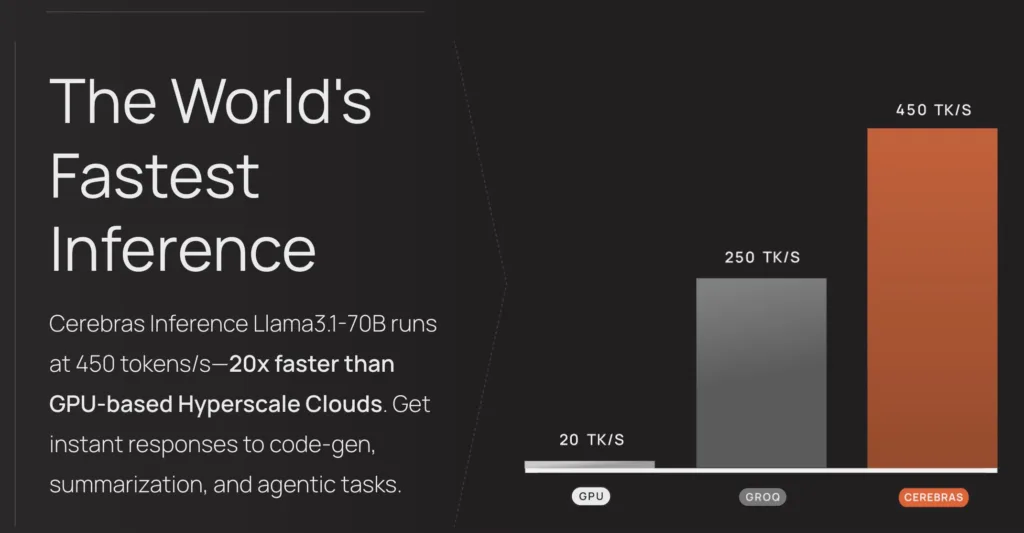

Research Note: Cerebras Inference Service

Cerebras Systems recently introduced Cerebras Inference, a high-performance AI inference service that delivers exceptional speed and affordability. The new service achieves 1,800 tokens per second for Meta’s Llama 3.1 8B model and 450 tokens per second for the 70B model, which Cerebras says makes it 20 times faster than NVIDIA GPU-based alternatives.

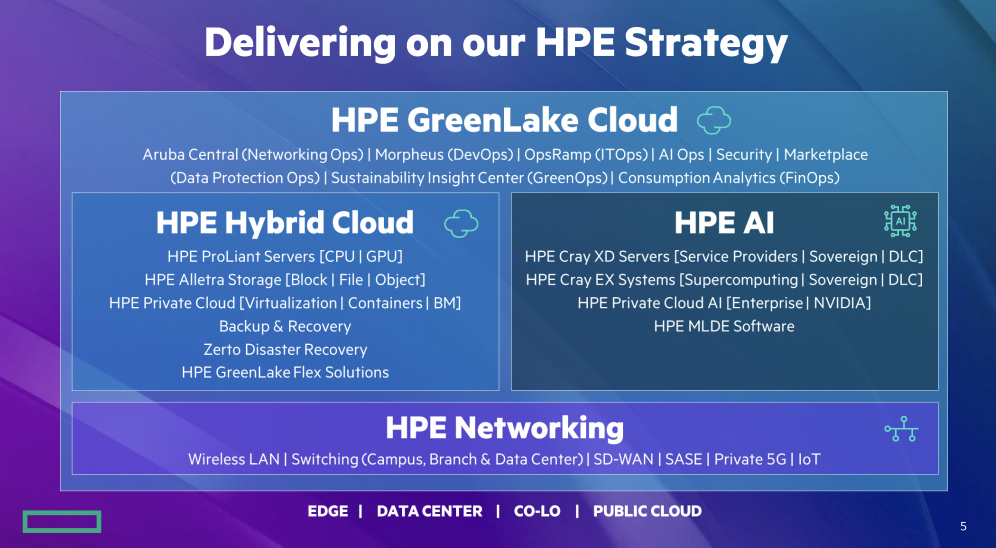

Research Note: HPE Q3 FY2024 Earnings

Hewlett Packard Enterprise (HPE) delivered a strong Q3 FY2024 earnings report, beating Wall Street estimates with its $7.7 billion in net revenue, up 10% year over year and exceeding guidance. The company saw growth across AI systems, hybrid cloud, and networking.

Research Note: NVIDIA NIM Agent Blueprints

NVIDIA launched its new NIM Agent Blueprints, a catalog of pre-trained, customizable AI workflows to help enterprise developers quickly build and deploy generative AI applications for critical use cases, such as customer service, drug discovery, and data extraction from PDFs.

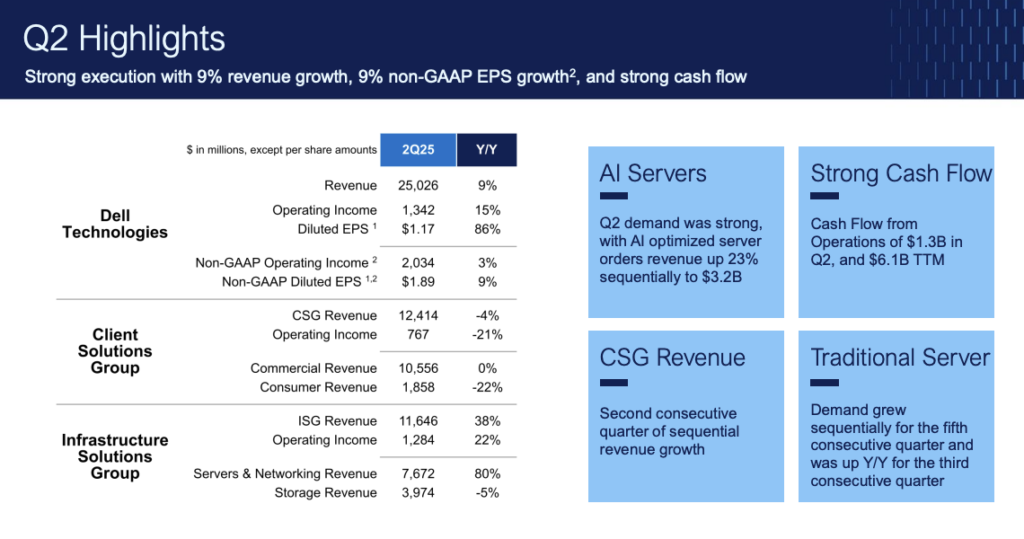

Research Note: Dell Q2 FY2025 Data Center Earnings

Dell Technologies’ latest earnings report paints a picture of a company amid a transformative shift, fueled by increasing demand for AI-driven solutions. In its second quarter of fiscal 2025, Dell delivered strong revenue growth, underscored by the rapid expansion of its Infrastructure Solutions Group (ISG) and a notable surge in AI server sales.

Research Note: IBM Telum II & Spyre AI Accelerators

At the Hot Chips 2024 conference in Palo Alto, California, IBM unveiled the next generationj of enterprise AI solutions: the IBM Telum II processor and the IBM Spyre Accelerator. These new technologies should meet the demands of the AI era, providing enhanced performance, scalability, and AI capabilities. Both are expected to be available in 2025.

Research Note: AMD Acquires ZT Systems

AMD announced its strategic acquisition of ZT Systems, a specialty provider of AI and general-purpose compute infrastructure for major hyperscale companies, in a deal valued at $4.9 billion. The acquisition aligns with AMD’s AI strategy to enhance its capabilities in AI training and inferencing solutions for data centers.

Research Note: Palantir Q2 2024 Earnings

Palantir Technologies delivered strong Q2 2024 earnings, underlining its position as a leader in enterprise AI solutions. The company’s strategic focus on moving from AI prototypes to full-scale production has driven significant growth and expanded its customer base.

Research Note: Qualcomm FQ3 2024 Earnings

In its fiscal third quarter, Qualcomm demonstrated strong financial performance, driven by its continued success in diversifying its business beyond mobile handsets into sectors like automotive, IoT, and PCs. With its focus on innovation, particularly in AI and advanced computing, Qualcomm is well-positioned to sustain its leadership across various industries.

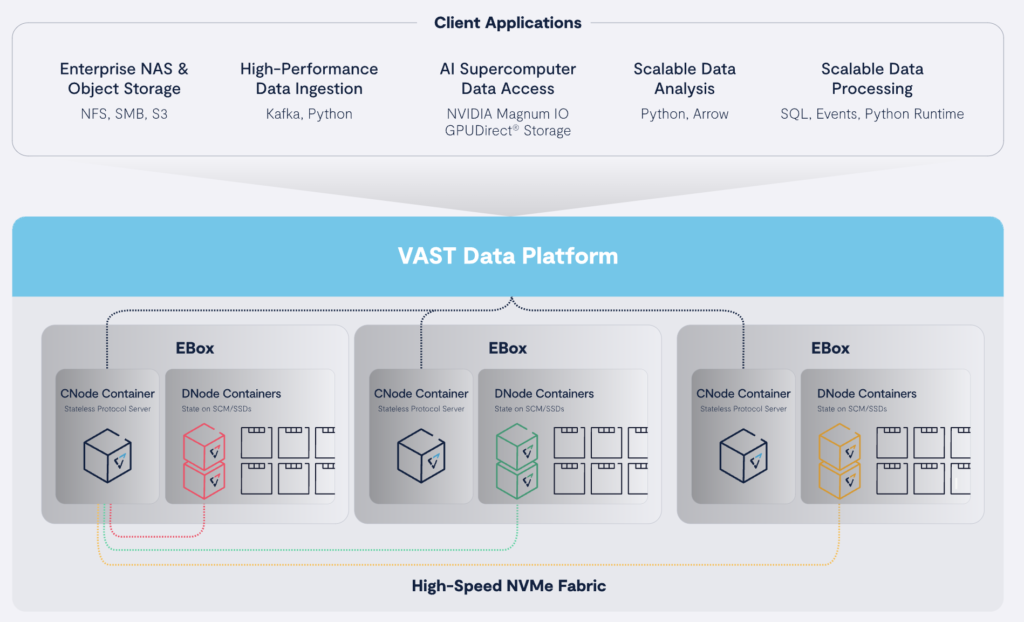

Research Report: Solving AI Data Pipeline Inefficiencies, the VAST Data Way

While AI is foundational to the next wave of digital transformation, traditional data and storage infrastructure -even many parallel file systems- isn’t prepared for the demands required to support today’s AI lifecycle, which places unprecedented demands on storage and data infrastructure.

Developing an effective data infrastructure for AI requires a holistic approach, considering data, database, processing, and storage as a unified entity. This is how VAST Data approaches the challenge.

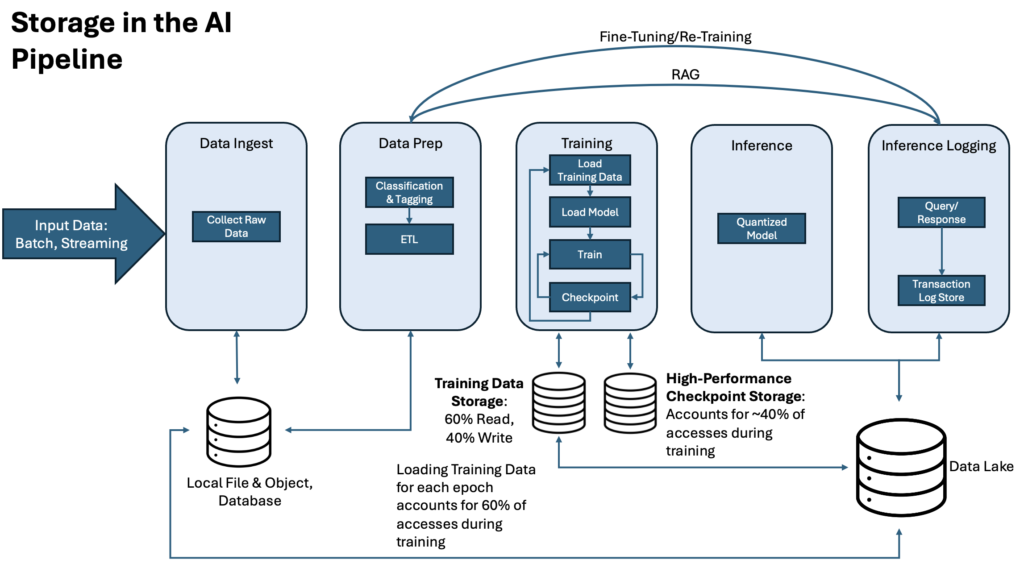

Research Report: Impact of Storage Architecture on the AI Lifecycle

Traditional storage solutions, whether on-premises or in the cloud, often fail to meet the varying needs of each phase of the AI lifecycle. These legacy approaches are particularly ill-suited for the demands of distributed training, where keeping an expensive AI training cluster idle has a real economic impact on the enterprise.

Let OpenAI GPT-4o Mini Introduce Itself

We usually write our own content, using LLMs to help refine our research, but in honor of OpenAI releasing its new GPT-4o Mini language model just a day after Meta released its Llama 3.1, and on the same day Mistral releases its new Mistral Large 2, we’ll let GPT 4o Mini tell you about itself.

Research Note: Meta Llama 3.1

Meta recently released its new Llama 3.1 large language model, setting a new benchmark for open-source models. This latest iteration of the Llama series enhances AI capabilities while underscoring Meta’s continuing commitment to democratizing advanced technology. Along with the updated model, Meta also released a new suite of ethical AI tools.

Research Note: NVIDIA AI Factory & NIM Inference Microservices

NVIDIA announced its new NVIDIA AI Foundry, a service designed to supercharge generative AI capabilities for enterprises using Meta’s just-released Llama 3.1 models, along with its new NIM Inference microservices.

The new offerings significantly advance the ability to customize and deploy AI models for domain-specific applications.

Research Note: Mistral NeMo 12B Small Language Model

Mistral AI and NVIDIA launched Mistral NeMo 12B, a state-of-the-art language model for enterprise applications such as chatbots, multilingual tasks, coding, and summarization. The collaboration combines Mistral AI’s training data expertise with NVIDIA’s optimized hardware and software ecosystem, offering high performance across diverse applications.

Research Note: NetApp Updates Cloud Offerings

NetApp announced new capabilities designed for strategic cloud workloads, including GenAI and VMware, to help reduce the resources and risks for managing these workloads in hybrid multi-cloud environments.

Research Note: AWS Updates Bedrock

Amazon Web Services (AWS) announced a series of significant enhancements to its Bedrock platform aimed at bolstering the capabilities and reliability of generative AI applications. These enhancements focus on improved data connectivity, advanced safety features, and robust governance mechanisms.

Research Note: AMD Acquires Silo AI

AMD announced a definitive agreement to acquire Silo AI, Europe’s largest private AI lab, for approximately $665 million in an all-cash transaction. This acquisition aligns with AMD’s strategy to deliver end-to-end AI solutions based on open standards, enhancing its partnership with the global AI ecosystem.

Quick Take: VAST Data Platform Achieves NVIDIA Partner Network Certification

VAST Data announced that its VAST Data Platform has been certified as a high-performance storage solution for NVIDIA Partner Network cloud partners. The certification highlights VAST’s position as a leading data platform provider for AI cloud infrastructure and enhances its collaboration with NVIDIA in building next-generation AI factories.

Research Note: Lenovo AI Updates

Lenovo recently introduced new enterprise AI solutions designed to simplify AI adoption. These include turnkey services, business-ready vertical solutions, and energy-efficient innovations to accelerate practical AI applications.

Oracle Releases APEX 24.1 Low-Code Development Platform

Oracle recently announced that its APEX 24.1 low-code development platform is now available for download. It is being rolled out globally across OCI APEX Application Development and Autonomous Database Cloud Service regions.

In Conversation: Extending HCI to the Edge

I recently sat with Nutanix’s Greg White, Senior Director of Product Marketing, to understand the challenges of deploying resources, including AI, to the edge. Greg also talked in-depth about how hyper-converged architectures (HCI) can be leveraged to address many of these challenges.

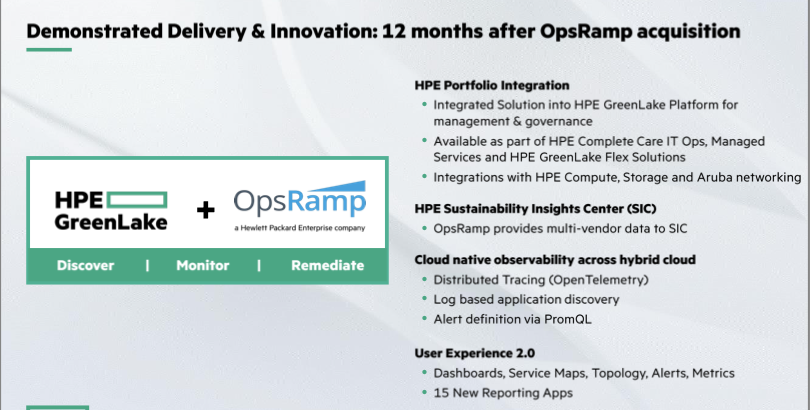

Research Note: HPE OpsRamp Enhancements

Hewlett Packard Enterprises introduced several enhancements to its OpsRamp solution at its recent HPE Discover event in Las Vegas that bolster its autonomous IT operations vision.

HPE Updates Partner Programs for AI

At HPE Discover 2024, HPE announced an ambitious new AI enablement program in collaboration with NVIDIA to boost profitability and deliver new revenue streams for partners. This program includes enhanced competencies and resources across AI, compute, storage, networking, hybrid cloud, sustainability, and HPE GreenLake offerings.

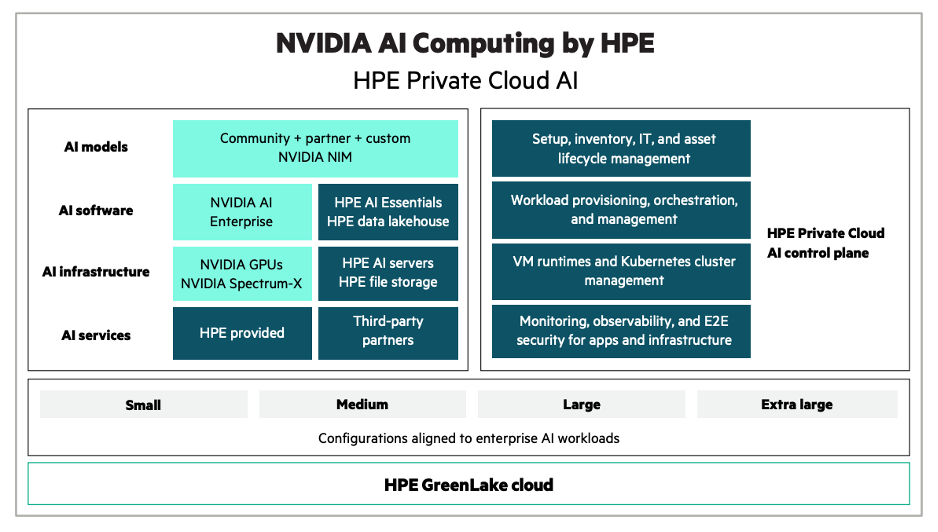

Research Note: NVIDIA AI Computing by HPE

At its HPE Discover event in Las Vegas, Hewlett Packard Enterprise and NVIDIA announced a collaborative effort to accelerate the adoption of generative AI across enterprises.

The collaboration, branded as NVIDIA AI Computing by HPE, introduces a portfolio of co-developed AI solutions and joint go-to-market integrations to address the complexities and barriers of large-scale AI adoption.

Research Note: VAST Data/Cisco AI Collaboration

VAST Data and Cisco announced a new collaboration to deliver a robust, high-performance AI data infrastructure that integrates seamlessly with an Ethernet-based AI fabric designed to handle data at an exabyte scale. Each company brings specialized expertise to create a unified, high-performance AI data platform.

Research Note: AMD Computex MI325x & MI350 Accelerator Announcements

At the 2024 Computex event in Taiwan, AMD CEO Lisa Su revealed details about AMD’s upcoming MI350 and MI325X accelerators, follow-ons to its current MI300x products, highlighting significant advancements in AI performance and memory capacity. The new products are positioned as key components in AMD’s strategy to lead the AI accelerator market.

Quick Take: Lenovo & Cisco Expand Relationship for AI Innovation

Ahead of Cisco Live, Lenovo and Cisco announced a global strategic partnership to deliver fully integrated infrastructure and networking solutions that accelerate digital transformation and AI innovation for businesses of all sizes.

Quick Take: IBM & AWS Collaborate on Responsible AI

At the IBM Think conference, IBM announced a collaboration with Amazon Web Services (AWS) to integrate the full suite of IBM’s watsonx AI and data platform with AWS services. The partnership helps streamline enterprise AI scaling through an open, hybrid approach with comprehensive governance.

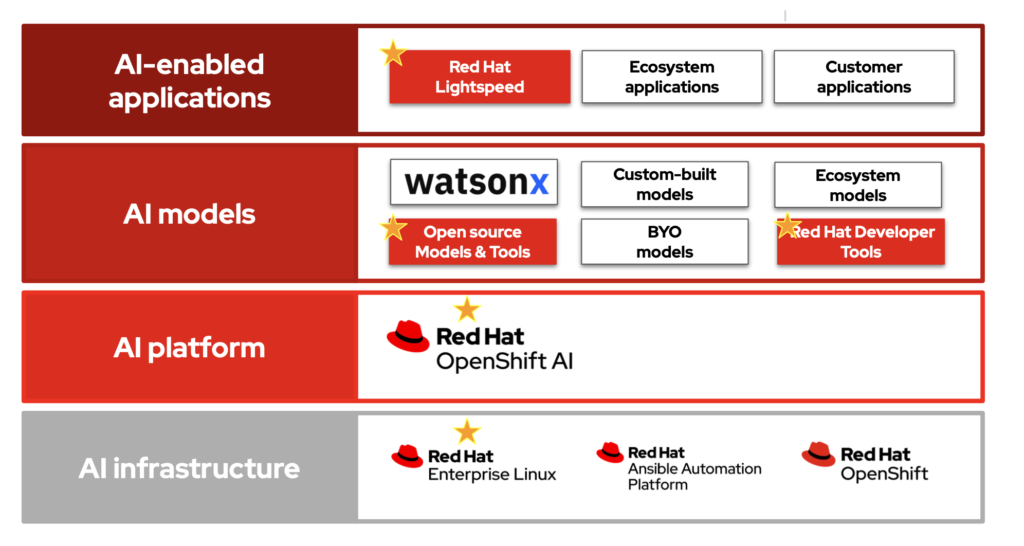

Research Note: Red Hat Summit 2024 AI Announcements

At its recent Red Hat Summit, Red Hat announced several new products and enhancements, many of which simplify or enable the use of AI within the enterprise. These include its new OpenShift AI, OpenShift LightSpeed enhancements, and a new RHEL AI release.

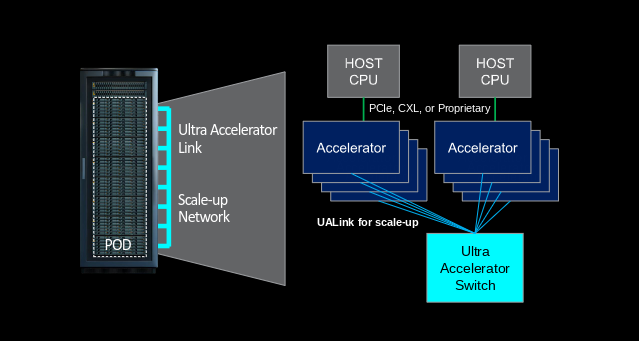

Research Note: UALink Alliance & Accelerator Interconnect Specification

UALink is a new open standard designed to rival NVIDIA’s proprietary NVLink technology. It facilitates high-speed, direct GPU-to-GPU communication crucial for scaling out complex computational tasks across multiple graphics processing units (GPUs) or accelerators within servers or computing pods.

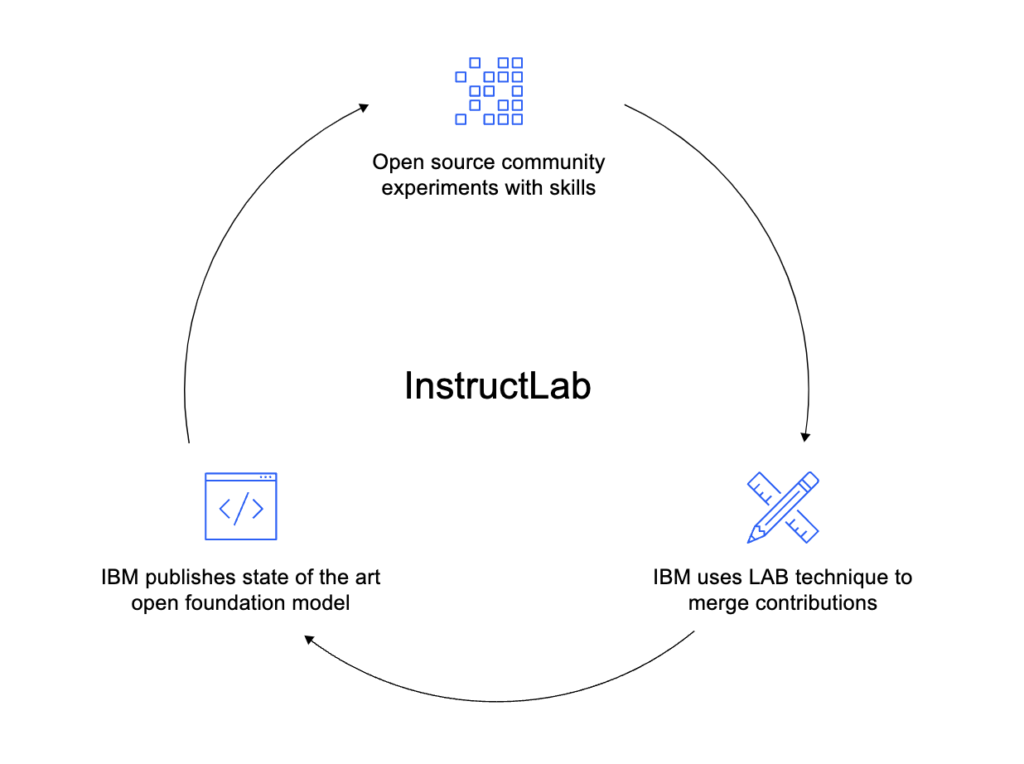

Research Note: IBM InstructLab LLM Tool Kit

At IBM’s 2024 Think conference in Boston, IBM Research unveiled InstructLab, developed in collaboration with Red Hat, to address the inefficiencies of existing training approaches by enabling collaborative, cost-effective model customization.

Quick Take: Microsoft Corp. and G42 Digital Investments in Kenya

Microsoft and G42 announced a substantial digital investment initiative in Kenya, partnering with the Republic of Kenya’s Ministry of Information, Communications, and the Digital Economy.

The initiative, supported by an initial $1 billion investment arranged by G42, aims to enhance Kenya’s digital infrastructure, AI capabilities, and connectivity, fostering the region’s digital transformation and economic growth.

Quick Take: CoreWeave $7.5B Debt Financing to Expand AI Infrastructure

GPU-cloud provider CoreWeave announced a definitive agreement for a $7.5 billion debt financing round. The funds will be used to expand CoreWeave’s high-performance computing infrastructure to meet the demands of existing contracts with enterprise customers and AI innovators.

Microsoft New Phi-3 Model Additions

This week at the Microsoft Build 2024 conference, the tech giant announced an exciting set of updates to its Phi-3 family of small, open models. The news includes the introduction of Phi-3-vision, a multimodal model that combines language and vision capabilities, providing developers with powerful tools for generative AI applications.

Quick Take: IBM and Salesforce Strategic Partnership

At IBM Think in Boston this week, IBM and Salesforce announced an expanded strategic partnership integrating IBM’s watsonx AI and Data Platform capabilities with the Salesforce Einstein 1 Platform. This collaboration gives enterprise customers great choice and flexibility in AI and data deployment.

Research Brief: Dell AI Factory

At NVIDIA GTC earlier this year, Dell announced a collaboration with NVIDIA to deliver the Dell AI Factory with NVIDIA that was heavily based on NVIDIA technology. At this year’s Dell Tech World, Dell went further, introducing its own Dell AI Factory, while also updating the Dell AI Factory with NVIDIA.

Research Note: Google Trillium TPU

The Trillium TPU, Google’s sixth-generation TPU, was announced at Google I/O. It promises unprecedented compute performance, memory capacity, and energy efficiency for generative AI training and inference.

Research Note: Palantir Q1 2024 Earnings

Panatir Technologies reported its Q1 2024 earnings, surpassed revenue expectations with its robust 21% year-over-year growth, achieving $634 million. While the company met consensus estimates on EPS, it delivered weaker-than-expected guidance.

Research Note: IBM Open Sources Granite Code Models

IBM is releasing its Granite code models to the open-source community, aiming to simplify coding for a broad range of developers. The Granite models, part of IBM’s wider initiative to harness AI in software development, range from 3 to 34 billion parameters and include base and instruction-following variants.

Quick Take: Panasas Rebrands as VDURA, Shifts to SaaS Model

Legacy parallel file system provider Panasas is transitioning from hardware sales to focusing on the public cloud under its new brand, VDURA. Traditionally, Panasas offered the PanFS software platform, which supports high-capacity drives and diverse connectivity options targeting the HPC market.

Research Report: Oracle Database Vector Search

Oracle introduced full support for vectors, including vector search, in its just-released Oracle Database 23ai. Known as AI Vector Search, this capability represents a significant advancement in how databases can store, index, and search data semantically.

Nvidia And Dell Build An AI Factory Together

At last month’s Nvidia GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including options for liquid-cooling, which allows the server to deliver the full capabilities of Nvidia’s newly announced AI accelerators.

Research Note: NVIDIA H100 Confidential Computing

This week, NVIDIA made its confidential computing capabilities for its flagship NVIDIA Hopper H100 GPU, previewed in August 2023, generally available. This makes NVIDIA’s H100 the first GPU with these capabilities, which are critical for protecting data as it is being processed.

This Research Note looks at confidential computing and how it works on the NVIDIA H100 GPU.

AWS And Nvidia Expand AI Relationship

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate AI innovation and infrastructure capabilities globally.

The joint announcements included the introduction of Nvidia Grace Blackwell GPU-based Amazon EC2 instances, Nvidia DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Quick Take: Apple’s OpenELM Small Language Model

Apple this week unveiled its OpenELM, a set of small AI language models designed to run on local devices like smartphones rather than rely on cloud-based data centers. This reflects a growing trend toward smaller, more efficient AI models that can operate on consumer devices without significant computational resources.

Research Note: Lenovo’s AMD-based AI Portfolio Update

Lenovo announced a comprehensive set of AMD-based updates to its AI infrastructure portfolio, which include GPU-rich and thermal-efficient systems designed for compute-intensive workloads in various industries, including financial services and healthcare.

The new offerings, designed in partnership with AMD, address the growing demand for compute-intensive workloads across industries, providing the flexibility and scalability required for AI deployments.

Research Note: NVIDIA Acquires Run:AI

NVIDIA announced an agreement to acquire Run:ai, a startup specializing in chip management and orchestration software based on Kubernetes. The acquisition is part of CEO Jensen Huang’s strategy to diversify Nvidia’s revenue streams from chips to software.

Quick Take: Vultr Launches Global Inference Cloud

Vultr recently announced the launch of Vultr Cloud Inference, a new serverless platform aimed at transforming AI scalability and reach. The new solution facilitates AI model deployment and inference capabilities worldwide.

Research Note: Microsoft Phi-3 Small Language Model

Microsoft recently announced its Phi-3 small language models (SLMs), designed to deliver powerful performance at a reduced cost. These SLMs offer a compelling option for developers and businesses looking to harness the potential of generative AI.

This Research Note looks at what Microsoft announced.

Research Note: AWS Bedrock GenAI Enhancements

Amazon announced updates to its Bedrock generative AI platform that expands its capabilities while improving the user experience. These enhancements focus on helping developers create AI applications quickly and securely.

Quick Take: SAP’s New Business AI Capabilities

At the recent NVIDIA GTC event, SAP and NVIDIA announced an expanded partnership to enhance generative AI integration across SAP’s cloud solutions and applications. The collaboration focuses on developing SAP Business AI, which integrates scalable, business-specific generative AI capabilities within various SAP offerings, including SAP Datasphere, and SAP BTP.

Quick Take: VAST Data’s Nvidia DPU-Based AI Cloud Architecture

VAST Data recently introduced a new AI cloud architecture based on Nvidia’s BlueField-3 DPU technology. The architecture is designed to improve performance, security, and efficiency for AI data services. The approach seeks to enhance data center operations and introduce a secure, zero-trust environment by integrating storage and database processing into AI servers.

Research Note: Intel Gaudi 3

Intel announced its long-anticipated new Intel Gaudi 3 AI accelerator at its Intel Vision event. The new accelerator offers significant improvements over the previous generation Gaudi 3 processor and promises to challenge Nvidia’s current generation accelerators in training and inference for LLMs and multimodal models.

Research Note; Arm Ethos U-65 microNPU

Arm introduced its new Ethos-U65 microNPU (Neural Processing Unit). This state-of-the-art AI accelerator facilitates machine learning (ML) inference in many embedded systems and high-performance devices.

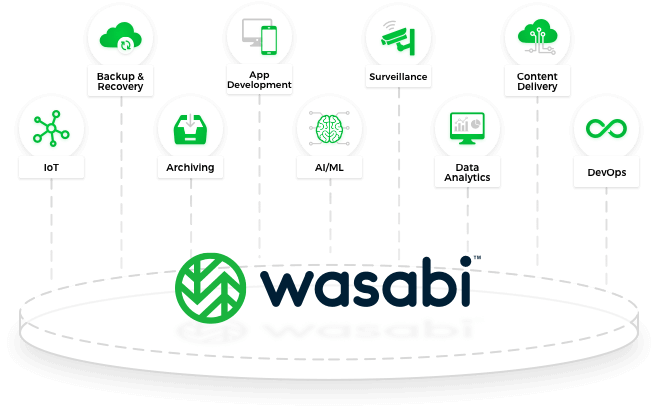

Quick Take: Wasabi AiR Intelligent Media Storage

Wasabi AiR integrates artificial intelligence to transform how video content is stored, accessed, and utilized. The new offering combines the cost-effectiveness and high performance of Wasabi’s object storage with sophisticated AI capabilities, including automatic metadata tagging and multilingual searchable speech-to-text transcription.

Quick Take: Hammerspace Hyperscale NAS For AI & HPC

Hammerspace unveiled its new high-performance NAS architecture, Hyperscale NAS, to cater to the growing demands of enterprise AI, machine learning, deep learning initiatives, and the increasing use of GPU computing both on-premises and in the cloud.

Quick Take: Datastax Acquires Langflow

DataStax announced the acquisition of Logspace, the company behind Langflow, a low-code tool for building applications based on Retrieval-Augmented Generation (RAG). The terms of the deal were not disclosed.

Research Note: MLPerf Inference 4.0 Results

MLCommons released the results of its MLPerf Inference v4.0 benchmarks, which introduced two new workloads, Llama 2 and Stable Diffusion XL.

Since its inception in 2018, MLPerf has established itself as a crucial benchmark in the accelerator market. The benchmarks offer detailed comparisons across a variety of system configurations for specific use cases.

Research Note: Databricks DBRX LLM

Databricks launched DBRX, a new open, general-purpose Large Language Model (LLM) that sets a new benchmark for performance and efficiency.

DBRX surpasses the capabilities of existing models like GPT-3.5 while also demonstrating competitive performance with closed models such as Gemini 1.0 Pro, making it a formidable player in general-purpose applications and specialized coding tasks.

Is NVIDIA Lagging in Lucrative Automotive Segment?

Nvidia’s most recent earnings release is a tremendous achievement for the company, with reported revenue of $22.1 billion, up an incredible 265% year-on-year. Earnings grew an equally unbelievable 765% year-on-year.

Its automotive revenue was $281 million.

Research Note: Dell AI Factory with NVIDIA

At the 2024 NVIDIA GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including introducing Dell’s first liquid-cooled server solution, which allows the server to deliver the full capabilities of NVIDIA’s newly announced AI accelerators.

Research Note: NVIDIA & AWS’s Broad AI-Focused Collaboration

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate global AI innovation and infrastructure capabilities.

The joint announcements included the introduction of NVIDIA Grace Blackwell GPU-based Amazon EC2 instances, NVIDIA DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Quick Take: VAST Data and Supermicro Collaborate on Scalable AI Solution

Supermicro/VAST Data’s new solution provides innovative parallel architecture and unified global namespace ensure optimal GPU utilization, scalability, and smooth data access from edge to cloud, eliminating the usual trade-offs between performance and capacity.

Quick Take: IBM & the GSMA’s Collaboration for GenAI Adoption in Telecom

The GSMA and IBM recently announced a significant new collaboration to promote the adoption and development of generative artificial intelligence (AI) skills within the telecom industry.

This partnership launches through two main initiatives: the GSMA Advance’s AI Training program and the GSMA Foundry Generative AI program.

Quick Take: Juniper Network’s AI-Native Networking Platform

Juniper Networks announced its AI-Native Networking Platform, designed to fully integrate AI into network operations to enhance experiences for users and operators. The platform, a first in the industry, is built to use AI to make network connections more reliable, secure, and measurable.

Quick Take: WEKA Brings Data Platform to NexGen Cloud

WekaIO is partnering with NexGen Cloud, the leading UK-based sustainable infrastructure-as-a-service provider, to establish a high-performance foundation for NexGen Cloud’s upcoming AI Supercloud. This Supercloud and NexGen Cloud’s Hyperstack GPU-as-a-Service platform will leverage WEKA’s technology.

Quick Take: Oracle’s OCI Generative AI Service

Oracle announced the general availability of its OCI Generative AI Service, along with several substantial enhancements to its data science and cloud offerings. Let’s take a look at what Oracle announced.

Research Note: Inside Juniper’s Next-Gen AI Networking

Juniper Networks introduced its AI-Native Networking Platform, designed to fully integrate AI into network operations to enhance experiences for users and operators. This platform, a first in the industry, is built to use AI to make network connections more reliable, secure, and measurable.