Quick Take: Informatica Q1 2024 Earnings

Informatica released its Q1 2024 earnings, showing strong financial performance in the first quarter, reflecting the successful execution of its strategic initiatives.

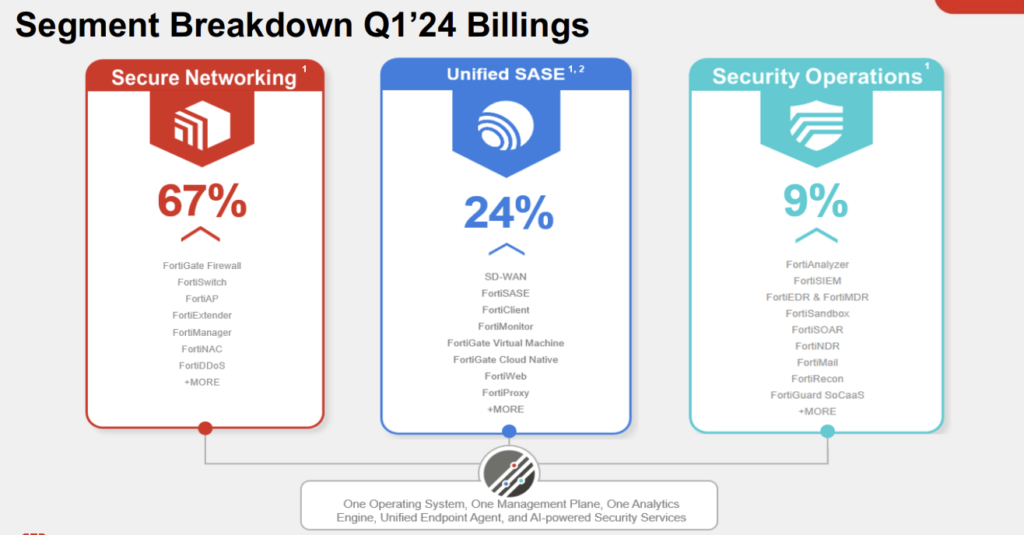

Quick Take: Fortinet Q1 2024 Earnings

Fortinet released its earnings for its fiscal Q1 2024, demonstrating robust financial performance across several key metrics.

Research Brief: Oracle Database 23ai

Oracle announced the release of Oracle Database 23ai. As its name implies, this long-term support version integrates seamlessly with artificial intelligence. Beyond new GenAI-focused features, it includes more than 300 new features that enhance app development, support mission-critical workloads, and simplify the integration of AI with data.

Quick Take: IBM’s Storage Assurance Program

IBM announced its new IBM Storage Assurance program. IBM’s new IT lifecycle management option aims to maximize performance and provide clients with enhanced choice and control within the data center.

Quick Take: Sygnia & NVIDIA’s Edge Security Play

Sygnia and NVIDIA announced a strategic collaboration to enhance cybersecurity in the energy and industrial sectors using AI-powered edge solutions. The aim is to directly improve data collection, threat detection, and response capabilities at the edge of industrial and critical infrastructure networks.

Research Note: Snyk AppRisk Pro

Snyk launched Snyk AppRisk Pro, a new tool designed to enhance application security by integrating AI with application context from various third-party integrations. The new tool helps application security (AppSec) and development teams prioritize and accelerate the remediation of business-critical risks throughout the full development lifecycle by providing a comprehensive view of application risk by assessing how the application is built, its code, its business impact, and team responsibilities.

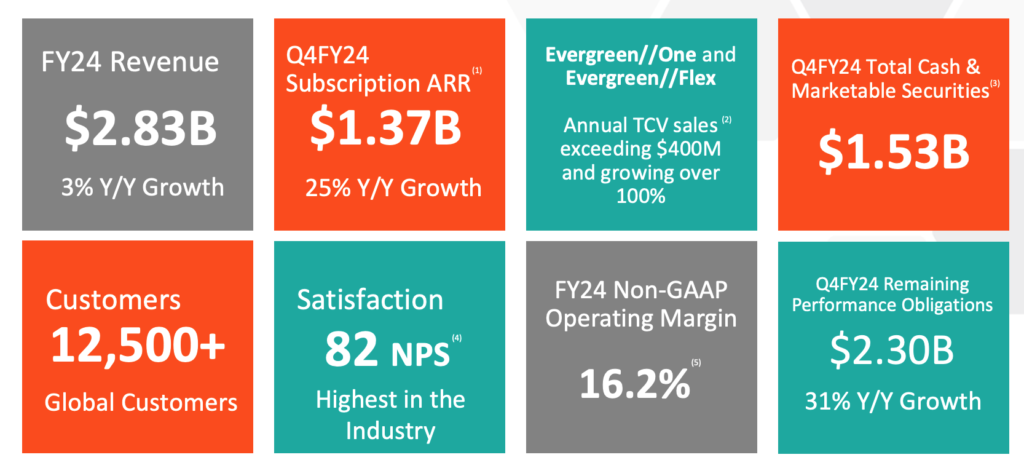

Research Note: Commvault FQ4 2024 Earnings

Commvault released its fiscal Q4 2024 earnings results, closing out its fiscal year ’24 on a high note with substantial growth across key financial metrics.

Sovereign Cloud Explained

As enterprises increasingly migrate to the cloud, ensuring that data remains secure, private, and within legal jurisdictional boundaries is paramount. Sovereign cloud solutions have emerged as a pivotal technological innovation designed to address these complex requirements.

Research Note: MongoDB AI Applications Program

MongoDB announced the launch of the MongoDB AI Applications Program (MAAP). This comprehensive initiative enables organizations to effectively integrate generative AI technologies into their modern applications at an enterprise scale.

Research Note: Atlassian Rovo

Atlassian, a long-time leader in enterprise collaboration and IT workflow management tools has taken a bold step forward with the launch of Atlassian Rovo. Its new cutting-edge product, powered by Atlassian Intelligence, transforms how organizations manage, search, and utilize data across various platforms and tools.

Amdocs Survey Says: Telecom Slow to Embrace Generative AI

Amdocs, one of the giants in the telecommunications industry, together with analytics company Analysys Mason, released the results of a global survey of communications service providers to understand how generative AI impacts those CSPs. The results were surprising.

While the IT industry rapidly embraces generative AI to understand and manage infrastructure, telecom is moving much more slowly. The Amdocs survey showed that less than a quarter of CSPs use GenAI today. The surprising statistic is that nearly half of the respondents haven’t even begun exploring the technology.

Nvidia And Dell Build An AI Factory Together

At last month’s Nvidia GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including options for liquid-cooling, which allows the server to deliver the full capabilities of Nvidia’s newly announced AI accelerators.

Research Note: NVIDIA H100 Confidential Computing

This week, NVIDIA made its confidential computing capabilities for its flagship NVIDIA Hopper H100 GPU, previewed in August 2023, generally available. This makes NVIDIA’s H100 the first GPU with these capabilities, which are critical for protecting data as it is being processed.

This Research Note looks at confidential computing and how it works on the NVIDIA H100 GPU.

AWS And Nvidia Expand AI Relationship

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate AI innovation and infrastructure capabilities globally.

The joint announcements included the introduction of Nvidia Grace Blackwell GPU-based Amazon EC2 instances, Nvidia DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Google Cloud Transforming Healthcare With AI

At the recent HIMSS24 event in Orlando, Google Cloud unveiled a set of new solutions designed to enhance interoperability, establish a robust data foundation, and deploy generative AI tools within the healthcare and life sciences sectors, all of which promise to improve patient outcomes.

Research Note: IBM FlashSystem 5300

IBM launched its new IBM FlashSystem 5300, a new entry-level storage solution with exceptional price performance, high availability, and enterprise-class data services within a compact 1U rack unit. The new array meets the needs of both small and large organizations facing the challenges of resource-constrained data centers and demanding workloads.

Quick Take: SPDX 3.0 Release

The SPDX community, in collaboration with the Linux Foundation, recently released SPDX 3.0, marking a significant milestone in the Software Bill of Materials (SBOM) communication format.

SPDX 3.0 provides a comprehensive set of updates, including an overhauled model, specification, and license list and the addition of SPDX profiles designed to handle modern system use cases. This release improves the versatility and adaptability of the SBOM format.

Research Note: IBM FQ1 2024 Earnings

IBM’s recently announced fiscal Q1 2024 earnings show a steady trajectory of growth as IBM successfully executes its strategic shift towards hybrid cloud and AI. The technology giant’s software and consulting segments led the charge, driving revenue increases and demonstrating the ongoing success of IBM’s focus on enterprise solutions.

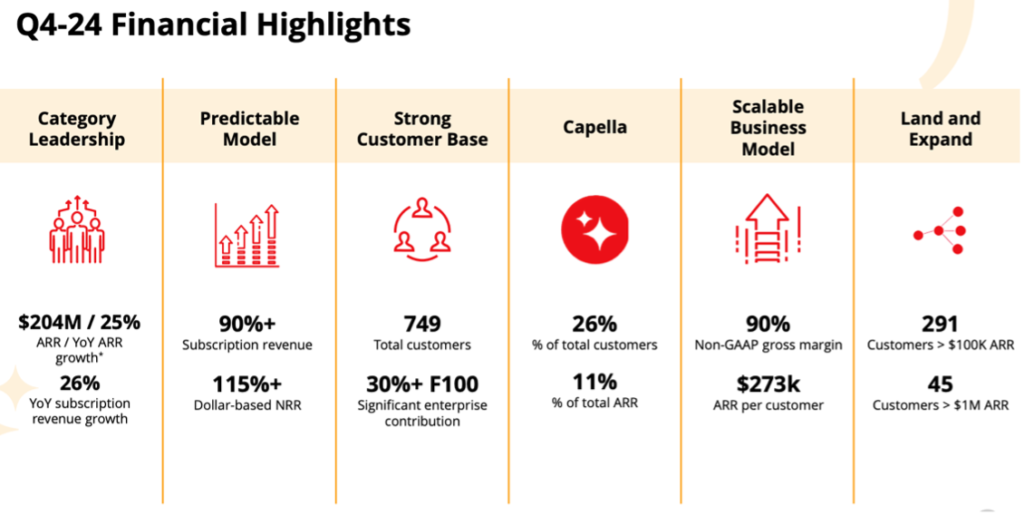

Research Note: CrowdStrike FQ4 2024 Earnings

CrowdStrike released consensus-beating results for its fiscal Q4 2024, showing strong growth and record-breaking performance. The company also delivered better-than-expected guidance.

Research Note: Western Digital FQ4 2024 Earnings

Western Digital released its FQ4 2024 financial results. The company exceeded expectations with revenue of $3.5 billion, a non-GAAP gross margin of 29.3%, and non-GAAP earnings per share of $0.63. The success was attributed to a diversified portfolio of products and structural changes to the business that improved profitability.

What Microsoft’s FQ3 2024 Earnings Reveal About Azure

Microsoft announced its fiscal Q3 2024 results, demonstrating robust growth. The company reported a total revenue of $61.9 billion, a 17% increase year-over-year, primarily driven by the cloud segment.

Microsoft’s Cloud business, which includes Azure and other cloud properties such as LinkedIn, generated over $35 billion in revenue, up 23%. During its earnings call, Microsoft said that Azure’s expansion and the increasing demand for AI infrastructure propelled much of its growth.

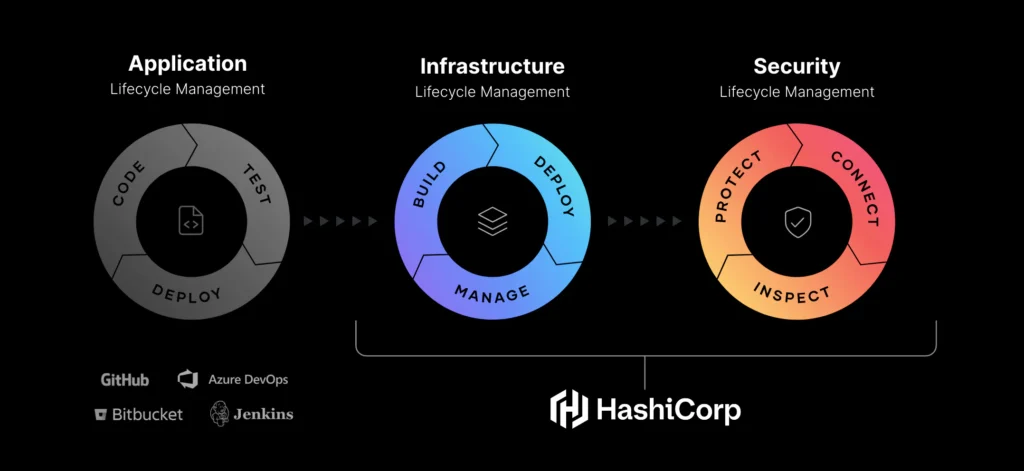

Research Note: IBM Acquires HashiCorp

IBM announced its intention to acquire HashiCorp, a multi-cloud infrastructure automation company, for $35 per share in cash, totaling an enterprise value of $6.4 billion.

HashiCorp specializes in Infrastructure Lifecycle Management and Security Lifecycle Management, crucial for hybrid and multi-cloud environments. This move aligns with IBM’s focus on hybrid cloud and AI, which are vital for today’s businesses.

What Alphabet Earnings Tell Us About Google Cloud

Google Cloud showed significant growth during the quarter, driven by the increasing demand for AI and cloud-based solutions. The business is positioned for continued growth, with a focus on infrastructure leadership, AI-driven innovations, and strong customer adoption.

Quick Take: Western Digital’s FQ3 2024 Earnings

Western Digital reported strong earnings for its third quarter of fiscal 2024, with revenue reaching $3.5 billion, a non-GAAP gross margin of 29.3%, and non-GAAP earnings per share at $0.63. WD exceeded consensus estimates.

Quick Take: Apple’s OpenELM Small Language Model

Apple this week unveiled its OpenELM, a set of small AI language models designed to run on local devices like smartphones rather than rely on cloud-based data centers. This reflects a growing trend toward smaller, more efficient AI models that can operate on consumer devices without significant computational resources.

Quick Take: Intel FQ1 2024 Earnings Results

Intel announced solid Q1 earnings, with revenue meeting expectations and EPS exceeding guidance. The company’s results reflect a disciplined approach to cost reduction and steady progress toward long-term goals.

Research Note: Lenovo’s AMD-based AI Portfolio Update

Lenovo announced a comprehensive set of AMD-based updates to its AI infrastructure portfolio, which include GPU-rich and thermal-efficient systems designed for compute-intensive workloads in various industries, including financial services and healthcare.

The new offerings, designed in partnership with AMD, address the growing demand for compute-intensive workloads across industries, providing the flexibility and scalability required for AI deployments.

Research Note: NVIDIA Acquires Run:AI

NVIDIA announced an agreement to acquire Run:ai, a startup specializing in chip management and orchestration software based on Kubernetes. The acquisition is part of CEO Jensen Huang’s strategy to diversify Nvidia’s revenue streams from chips to software.

Research Report: Oracle Database@Azure

Oracle and Microsoft Azure recently announced Oracle@Azure, making Microsoft Azure the only cloud provider besides Oracle itself capable of running Oracle’s database services with the performance, reliability, and security of Oracle Exadata and OCI

Quick Take: Vultr Launches Global Inference Cloud

Vultr recently announced the launch of Vultr Cloud Inference, a new serverless platform aimed at transforming AI scalability and reach. The new solution facilitates AI model deployment and inference capabilities worldwide.

Research Note: Microsoft Phi-3 Small Language Model

Microsoft recently announced its Phi-3 small language models (SLMs), designed to deliver powerful performance at a reduced cost. These SLMs offer a compelling option for developers and businesses looking to harness the potential of generative AI.

This Research Note looks at what Microsoft announced.

Research Note: AWS Bedrock GenAI Enhancements

Amazon announced updates to its Bedrock generative AI platform that expands its capabilities while improving the user experience. These enhancements focus on helping developers create AI applications quickly and securely.

Research Note: HashiCorp Infrastructure Cloud

HashiCorp introduced “The Infrastructure Cloud” to aid organizations in maximizing their cloud investments, focusing on enabling teams to ship code quickly while minimizing costs and security risks.

Quick Take: SAP’s New Business AI Capabilities

At the recent NVIDIA GTC event, SAP and NVIDIA announced an expanded partnership to enhance generative AI integration across SAP’s cloud solutions and applications. The collaboration focuses on developing SAP Business AI, which integrates scalable, business-specific generative AI capabilities within various SAP offerings, including SAP Datasphere, and SAP BTP.

Quick Take: NetApp’s Updates to Google Cloud Offering & GenAI Toolkit Reference

NetApp is expanding its collaboration with Google Cloud to enhance data utilization for generative AI and hybrid cloud workloads, introducing its Flex service level for Google Cloud NetApp Volumes.

Additionally, NetApp previewed its GenAI toolkit reference architecture that facilitates retrieval-augmented generation (RAG) using Google Cloud’s Vertex AI platform.

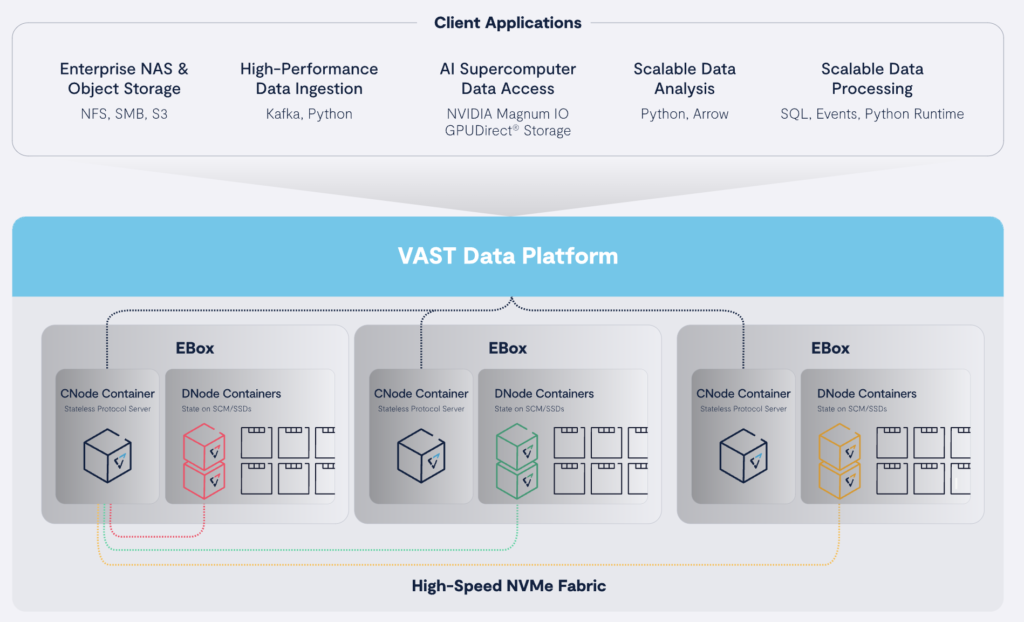

Quick Take: VAST Data’s Nvidia DPU-Based AI Cloud Architecture

VAST Data recently introduced a new AI cloud architecture based on Nvidia’s BlueField-3 DPU technology. The architecture is designed to improve performance, security, and efficiency for AI data services. The approach seeks to enhance data center operations and introduce a secure, zero-trust environment by integrating storage and database processing into AI servers.

Quick Take: Oracle GA’s Globally Distributed Autonomous Database

Oracle recently announced the general availability of its Globally Distributed Autonomous Database, marking a significant enhancement in its database technology.

Research Note: Dell APEX Files for Microsoft Azure

Dell recently introduced its new APEX File Storage for Microsoft Azure, designed to enhance businesses’ multi-cloud capabilities. The new solution integrates Dell PowerScale OneFS, its high-performance storage system, into the Azure cloud, allowing customers to manage and consolidate data efficiently, reduce costs, and improve data security and protection. It also utilizes Azure’s AI tools to provide quicker insights.

Quick Take: NetApp’s Real-Time Ransomware Detection For ONTAP

NetApp announced new cyber-resiliency capabilities to help customers better protect against and recover from ransomware attacks. Integrating AI and ML into its enterprise primary storage solutions, NetApp offers real-time ransomware protection for primary and secondary data, regardless of whether it’s stored on-premises or in the cloud.

CrowdStrike’s Earnings Beat + Flow Acquisition

CrowdStrike released its fiscal Q4 2024 results, beating consensus on revenue and earnings while providing strong guidance for the coming year. The company also announced the acquisition of Flow, which offers unique capabilities for protecting data within cloud environments.

Research Note: SAP HANA Cloud Vector Engine

SAP recently introduced its new SAP HANA Cloud Vector Engine, designed to bridge the gap between LLMs’ advanced capabilities and enterprise-specific business needs.

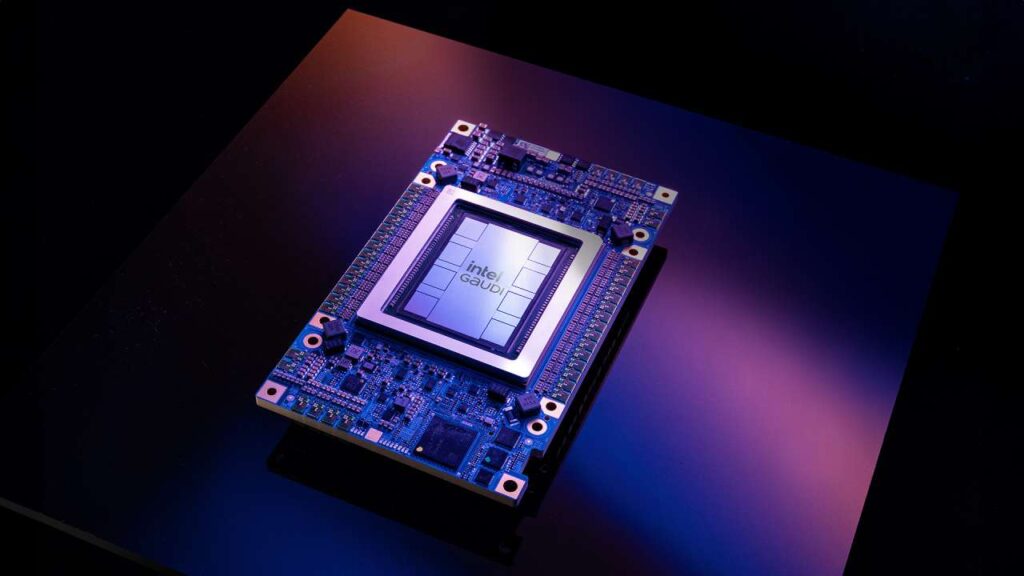

Research Note: Intel Gaudi 3

Intel announced its long-anticipated new Intel Gaudi 3 AI accelerator at its Intel Vision event. The new accelerator offers significant improvements over the previous generation Gaudi 3 processor and promises to challenge Nvidia’s current generation accelerators in training and inference for LLMs and multimodal models.

Quick Take: Google Axion Arm-based Processor

At its Google Cloud Next event, Google announced its new in-house designed Axion processor, a series of custom Arm-based CPUs explicitly designed for data center applications. These processors are part of Google’s continued investment in custom silicon to enhance its cloud computing services’ performance and energy efficiency.

Research Note; Arm Ethos U-65 microNPU

Arm introduced its new Ethos-U65 microNPU (Neural Processing Unit). This state-of-the-art AI accelerator facilitates machine learning (ML) inference in many embedded systems and high-performance devices.

Research Note: AMD Versal Edge Series Gen 2

AMD expands its Versal portfolio with the introduction of its Versal AI Edge Series Gen 2 and Versal Prime Series Gen 2 adaptive SoCs. These next-generation solutions cater to the increasing demands for AI-driven and classic embedded systems, providing a balanced mix of performance, power efficiency, functional safety, and security within a single chip.

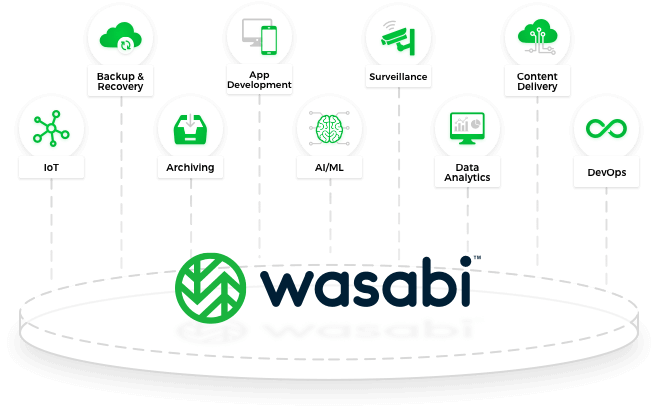

Quick Take: Wasabi AiR Intelligent Media Storage

Wasabi AiR integrates artificial intelligence to transform how video content is stored, accessed, and utilized. The new offering combines the cost-effectiveness and high performance of Wasabi’s object storage with sophisticated AI capabilities, including automatic metadata tagging and multilingual searchable speech-to-text transcription.

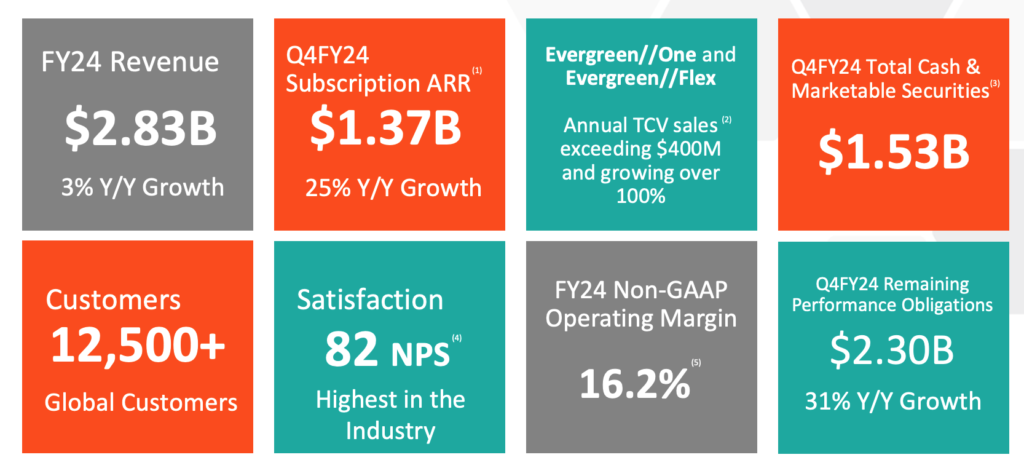

Subscription Growth Fuels Pure Storage Earnings Beat

In its fiscal Q4 2024 earnings release, Pure Storage exceeded revenue and operating profit guidance, demonstrating strong financial performance and market demand for its products and services. The most interesting aspect of Pure’s earnings is the growth of its subscription-based business, now accounting for more than 40% of its total revenue.

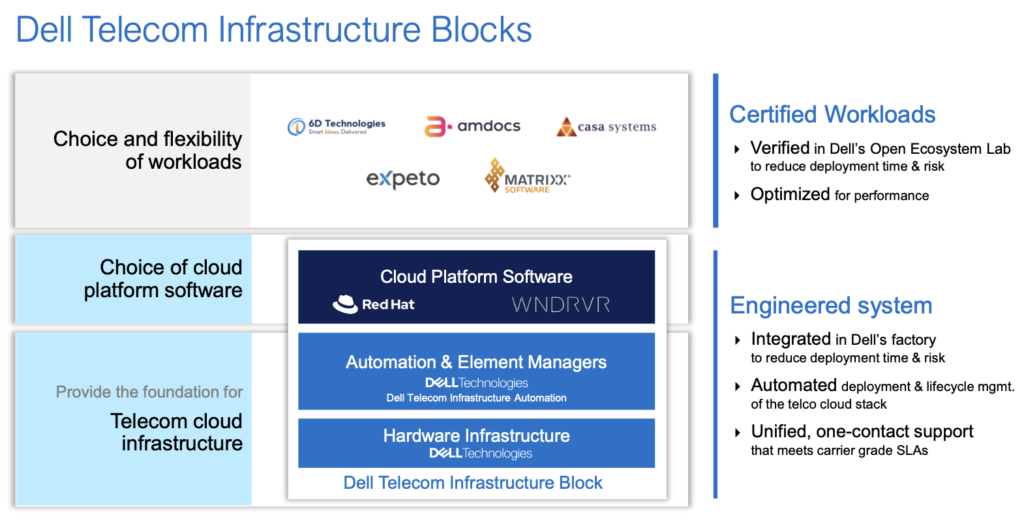

Quick Take: Dell Technologies New 5G Solutions

Dell Technologies introduced several new offerings designed to simplify the deployment of advanced 5G solutions by communication service providers.

The new offerings center around the Dell Telecom Infrastructure Automation Suite, which automates the management and orchestration of a CSP’s network infrastructure. The new suite also sits at the center of Dell’s Telecom Infrastructure Blocks for Red Hat update.

Quick Take: Hammerspace Hyperscale NAS For AI & HPC

Hammerspace unveiled its new high-performance NAS architecture, Hyperscale NAS, to cater to the growing demands of enterprise AI, machine learning, deep learning initiatives, and the increasing use of GPU computing both on-premises and in the cloud.

Quick Take: Lacework Expands Enterprise Capabilities

Cloud security company Lacework announced new platform capabilities to enhance efficiency for security stakeholders.

The enhancements include the introduction of Lacework Explorer, a combination of a security graph and resource explorer for better asset visibility and relationship analysis. New dashboards have been introduced to provide in-depth insights into the performance of security programs against set goals.

Quick Take: Datastax Acquires Langflow

DataStax announced the acquisition of Logspace, the company behind Langflow, a low-code tool for building applications based on Retrieval-Augmented Generation (RAG). The terms of the deal were not disclosed.

Research Note: MLPerf Inference 4.0 Results

MLCommons released the results of its MLPerf Inference v4.0 benchmarks, which introduced two new workloads, Llama 2 and Stable Diffusion XL.

Since its inception in 2018, MLPerf has established itself as a crucial benchmark in the accelerator market. The benchmarks offer detailed comparisons across a variety of system configurations for specific use cases.

Research Note: Databricks DBRX LLM

Databricks launched DBRX, a new open, general-purpose Large Language Model (LLM) that sets a new benchmark for performance and efficiency.

DBRX surpasses the capabilities of existing models like GPT-3.5 while also demonstrating competitive performance with closed models such as Gemini 1.0 Pro, making it a formidable player in general-purpose applications and specialized coding tasks.

Quick Take: Observe’s $115M Series B

Observe, a SaaS observability company focused on transforming the management and analysis of machine-generated data, has secured $115 million in Series B funding. The round closed with a valuation 10x of its Series A.

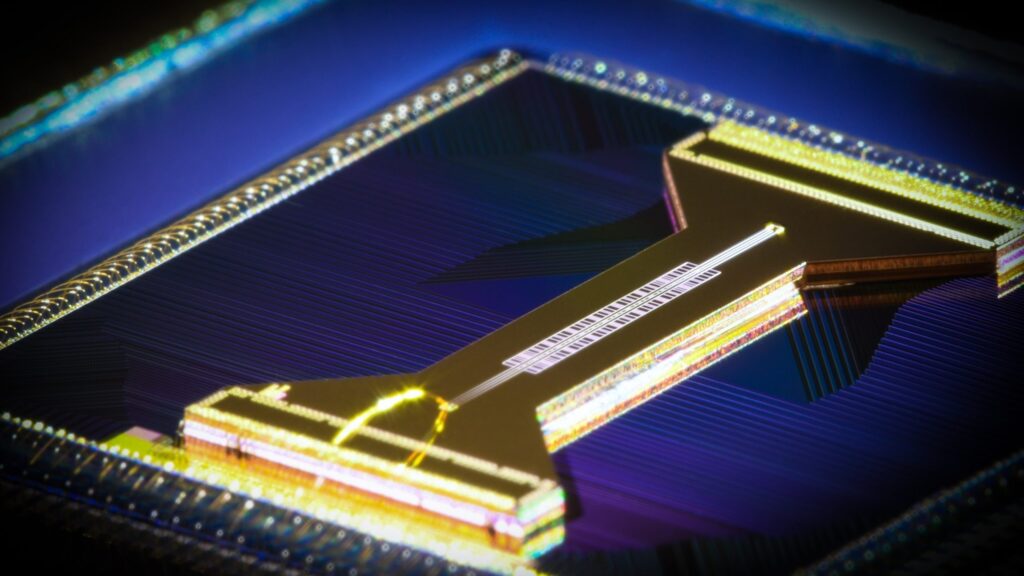

Quick Take: Microsoft & Quantiniuum Achieve Quantum Error Correction Breakthrough

Microsoft and Quantinuum announced an advancement in quantum computing that significantly reduces quantum error correction, a significant achievement that addresses one of the major hurdles in quantum computing.

Is NVIDIA Lagging in Lucrative Automotive Segment?

Nvidia’s most recent earnings release is a tremendous achievement for the company, with reported revenue of $22.1 billion, up an incredible 265% year-on-year. Earnings grew an equally unbelievable 765% year-on-year.

Its automotive revenue was $281 million.

Research Note: Arm’s New Automotive Cores

Arm recently launched new safety-enabled AE processors incorporating Armv9 technology and server-class performance. These processors are tailored for AI-driven applications to enhance autonomous driving and advanced driver-assistance systems (ADAS).

Quick Take: Microsoft Cloud for Sustainability Gains Data & AI Capabilities

Microsoft introduced new data and AI solutions in its Cloud for Sustainability at its recent AI for Sustainability event. The new offerings will assist organizations transitioning from sustainability pledges to tangible sustainability progress.

Research Note: Dell AI Factory with NVIDIA

At the 2024 NVIDIA GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including introducing Dell’s first liquid-cooled server solution, which allows the server to deliver the full capabilities of NVIDIA’s newly announced AI accelerators.

Research Note: NVIDIA & AWS’s Broad AI-Focused Collaboration

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate global AI innovation and infrastructure capabilities.

The joint announcements included the introduction of NVIDIA Grace Blackwell GPU-based Amazon EC2 instances, NVIDIA DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Quick Take: VAST Data and Supermicro Collaborate on Scalable AI Solution

Supermicro/VAST Data’s new solution provides innovative parallel architecture and unified global namespace ensure optimal GPU utilization, scalability, and smooth data access from edge to cloud, eliminating the usual trade-offs between performance and capacity.

Quick Take: Cisco Completes Splunk Acquisition

Cisco announced that it’s completed its acquisition of Splunk, positioning Cisco as one of the world’s largest software companies, with the resulting organization promising to bring a new level of visibility and insights across organizations’ entire digital landscapes.

Research Note: Google Cloud AI-Enabled Healthcare Announcements

At the recent HIMSS24 event in Orlando, Google Cloud unveiled a set of new solutions designed to enhance interoperability, establish a robust data foundation, and deploy generative AI tools within the healthcare and life sciences sectors. These solutions promise to improve patient outcomes.

Research Note: Oracle Globally Distributed Autonomous Database

Oracle released its Globally Distributed Autonomous Database. The new offering enhances the management and processing of distributed data, ensuring top-tier scalability, availability, and adherence to data sovereignty laws for users of Oracle Database.

Research Note: NetApp Autonomous Ransomware Protection

NetApp recently announced enhanced cyber-resiliency capabilities to help customers better protect against and recover from ransomware attacks. Integrating AI and ML into its enterprise primary storage solutions, NetApp offers real-time malware protection for both primary and secondary data, irrespective of whether it’s stored on-premises or in the cloud.

Research Note: CrowdStrike FQ4 2024 Earnings

CrowdStrike released its fiscal Q4 2024 results, beating consensus on revenue and earnings while providing strong guidance for the coming year. The company also announced the acquisition of Flow, which offers unique capabilities for protecting data within cloud environments.

Quick Take: CrowdStrike Acquires Flow Security

CrowdStrike announced its pending acquisition of Flow Security, a company specializing in cloud data security with an approach emphasizing runtime analysis for real-time data discovery, classification, risk detection, and policy enforcement.

Research Note: Couchbase FQ4 2024 Earnings

Couchbase announced consensus beating earnings in its fiscal Q4 2024 release. The company grew revenue by 20% year-over-year to a respectable $50.1M, while its ARR grew 25%.

Research Note: Lenovo’s MWC 2024 Telco Edge Announcements

Lenovo unveiled its latest Edge AI Solutions for the telecom industry at the 2024 MWC in Barcelo, which is aimed at enhancing AI applications across networks. The new solutions are designed to deliver efficient AI computing at scale, supporting the industry’s shift towards 5G and AI innovations.

Lenovo also announced collaborations with key telecom partners like Deutsche Telekom, Orange, and Telefonica to deploy AI use cases and achieve faster insights through Lenovo’s AI-ready infrastructure.

Research Note: HashiCorp FQ4 2024 Earnings

Hashicorp announced its fiscal Q4 2024 results, beating consensus estimates for both revenue and earnings with solid growth in all the right areas. Let’s take a deeper look at what the company announced.

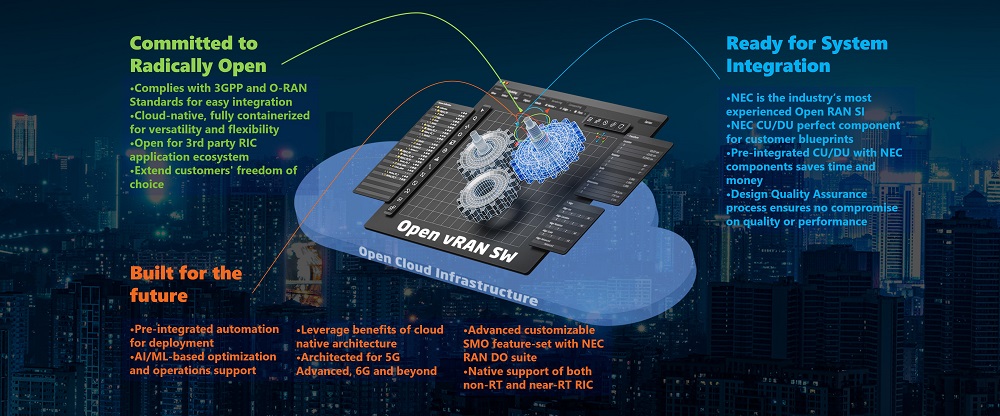

The Breakthrough Verification of vRAN by SoftBank, NEC, and VMware

SoftBank Corp., NEC Corporation, and VMware (recently acquired by Broadcom Inc.) have jointly verified the virtualization of the Radio Access Network (RAN), demonstrating a significant step towards RAN modernization through the convergence of O-RAN architecture and Telco Cloud.

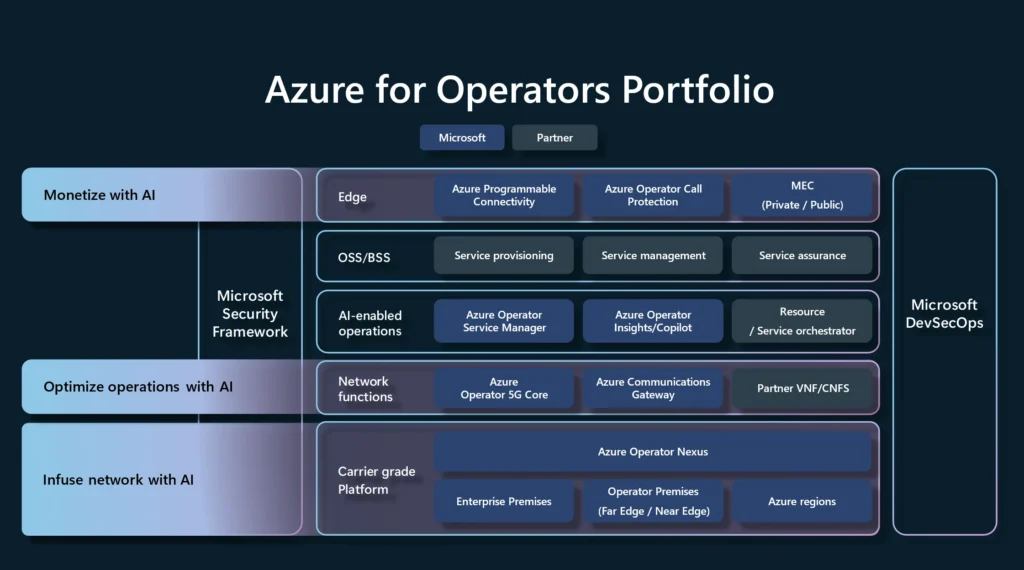

Research Note: Microsoft Updates Azure for Operators

At the recent MWC 2024 in Barcelona, Microsoft shared updates on its Azure for Operators portfolio, demonstrating significant advancements in generative AI and its application in telecommunications.

This Research Note takes an in-depth look at what Microsoft announced.

Quick Take: IBM & the GSMA’s Collaboration for GenAI Adoption in Telecom

The GSMA and IBM recently announced a significant new collaboration to promote the adoption and development of generative artificial intelligence (AI) skills within the telecom industry.

This partnership launches through two main initiatives: the GSMA Advance’s AI Training program and the GSMA Foundry Generative AI program.

Research Note: Intel Edge & AI/RAN MWC Announcements

Intel announced a new edge platform and highlighted its momentum in AI-assisted vRAN/O-RAN acceleration at the recent MWC conference in Barcelona.

Research Note: NEC & Partners Demonstrate Breakthrough in Open vRAN Technology

NEC Corporation, along with Arm, Qualcomm Technologies, Inc., Red Hat, and Hewlett Packard Enterprise (HPE) recently showcased a successful demonstration of Open Virtual Radio Access Network (vRAN) and 5G Core Virtual User Plane Function (vUPF) technology in Tokyo.

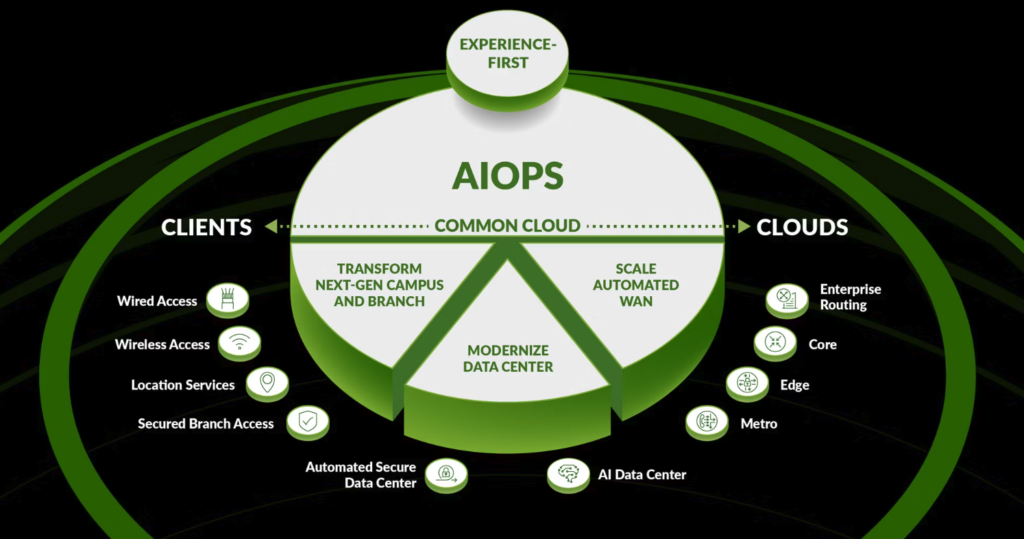

Quick Take: Juniper Network’s AI-Native Networking Platform

Juniper Networks announced its AI-Native Networking Platform, designed to fully integrate AI into network operations to enhance experiences for users and operators. The platform, a first in the industry, is built to use AI to make network connections more reliable, secure, and measurable.

Quick Take: WEKA Brings Data Platform to NexGen Cloud

WekaIO is partnering with NexGen Cloud, the leading UK-based sustainable infrastructure-as-a-service provider, to establish a high-performance foundation for NexGen Cloud’s upcoming AI Supercloud. This Supercloud and NexGen Cloud’s Hyperstack GPU-as-a-Service platform will leverage WEKA’s technology.

Quick Take: Dynatrace Runecast Acquisition

Earlier this month, on the eve of its annual Perform customer conference, Dynatrace announced the definitive acquisition of Runecast, an AI-powered security and compliance solutions provider, including Cloud Security Posture Management.

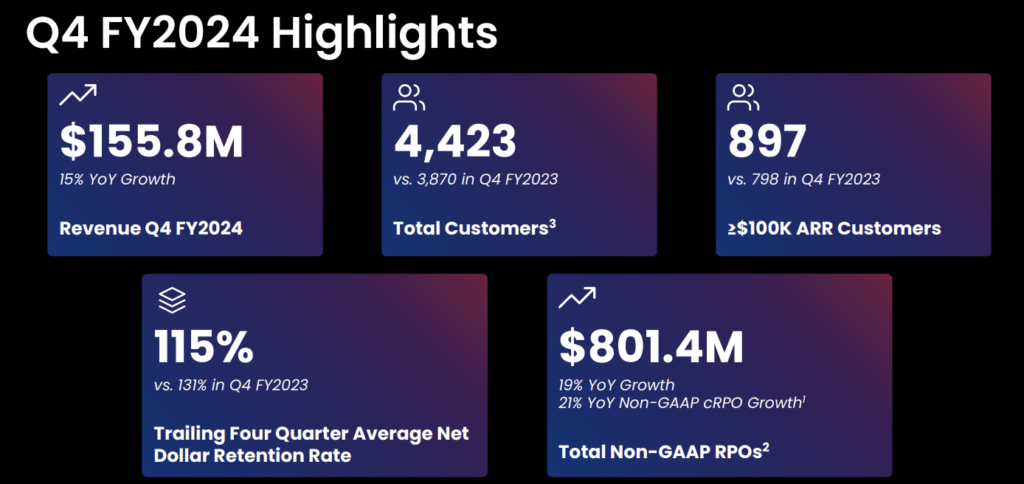

Research Note: Nutanix FQ2 2024 Earnings

Nutanix reported results for its fiscal Q2 2024, which beat consensus estimates for revenue and earnings. The company also provided healthy guidance for the coming quarters.

Research Note: Pure Storage FQ4 2024 Earnings

In its fiscal Q4 2024 earnings release, Pure Storage exceeded revenue and operating profit guidance, demonstrating strong financial performance and market demand for its products and services.

One of the most exciting aspects of Pure’s earnings is the growth of its subscription-based business, now accounting for more than 40% of its total revenue

Quick Take: Venafi ‘Stop Unauthorized Code’ Solution

Venafi has launched its new “Stop Unauthorized Code Solution,” designed to address the rising complexities inherent in software development security, specifically targeting unauthorized code and software supply chain attacks.

Quick Take: OpenText Cloud Edition 24.1

OpenText recently announced its OpenText Cloud Edition 24.1, bringing new generative AI capabilities to its OpenText Content Aviator, while also bringing new features targeting supply chain efficiency, improved business intelligence, security and governance, and even DevOps. There’s a lot in this release; let’s take a look.

Quick Take: AI-RAN Alliance launch at MWC Barcelona 2024

The AI-RAN Alliance is a collaborative initiative focused on the integration of artificial intelligence (AI) into cellular technologies to enhance radio access network (RAN) technology and mobile networks.

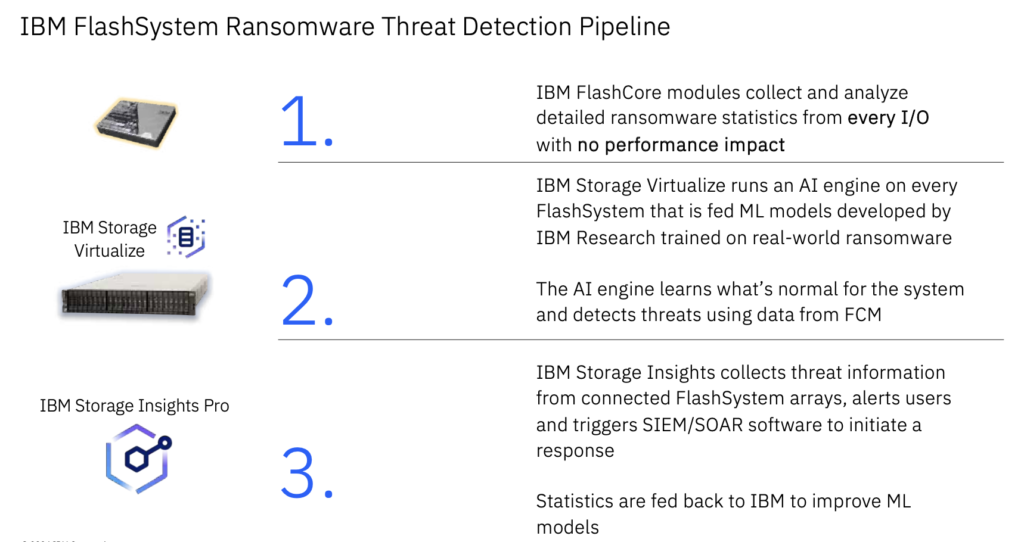

Research Note: IBM’s Enhanced FlashSystem Malware Detection

IBM’s new FlashSystem ransomware detection capabilities are driven by advanced AI technologies, new fourth-generation FlashCore Module (FCM) technology, and IBM Storage Defender software enhancements.

Quick Take: Clumio’s $75M Series D Round

Clumio, an emerging leader in the public cloud backup and recovery space, announced that it raised $75M in series D funding, showcasing strong investor confidence in its innovative cloud data management solutions.

Research Note: Dell Telecom Infrastructure Blocks for Red Hat 2.0

Dell Technologies has updated its Dell Telecom Infrastructure Blocks for Red Hat, which is a strategic solution offered by Dell Technologies, specifically designed to aid telecommunications operators in deploying and scaling out modern telecom networks, particularly for 5G. These blocks are critical components in simplifying the implementation of cloud-native network infrastructures.

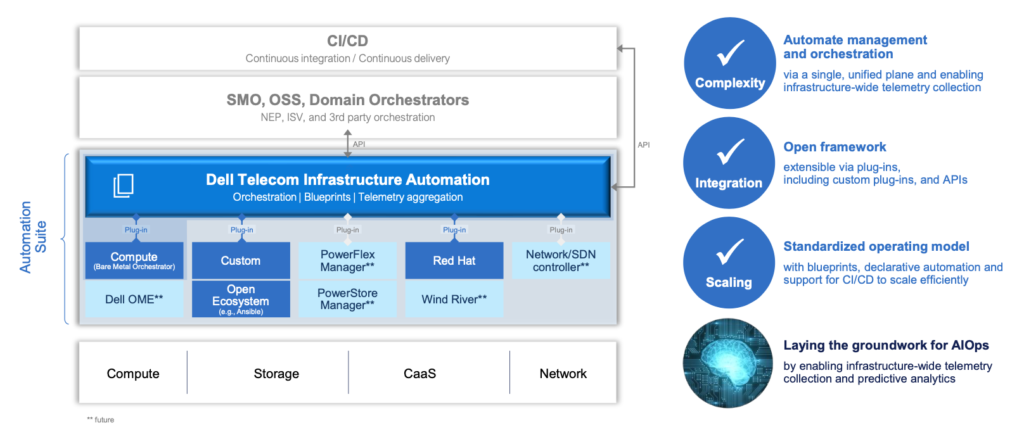

Research Note: Dell Telecom Infrastructure Automation Suite

Dell Technologies introduced its new Dell Telecom Infrastructure Automation Suite to help CSPs navigate this terrain by delivering a groundbreaking solution to streamline the transition to modern, cloud-based telecom networks.

Research Note: Lenovo FQ3 2024 Earnings

This Research Note explores Lenovo Group financial and operational performance during its fiscal year Q3 2024.

Amidst fluctuating global market conditions, Lenovo’s adaptability and innovative approach are scrutinized, offering valuable insights into how the tech giant continues to navigate challenges and capitalize on opportunities within the dynamic tech industry.

Quick Take: Oracle’s OCI Generative AI Service

Oracle announced the general availability of its OCI Generative AI Service, along with several substantial enhancements to its data science and cloud offerings. Let’s take a look at what Oracle announced.

Research Note: Hammerspace Hyperscale NAS

Hammerspace unveiled its new high-performance NAS architecture, Hyperscale NAS, to cater to the growing demands of enterprise AI, machine learning, deep learning initiatives, and the increasing use of GPU computing both on-premises and in the cloud.

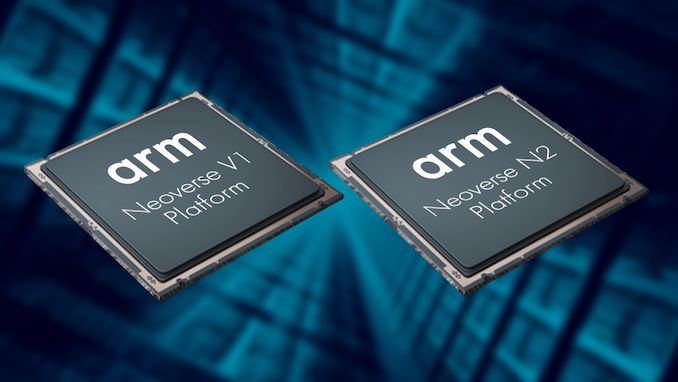

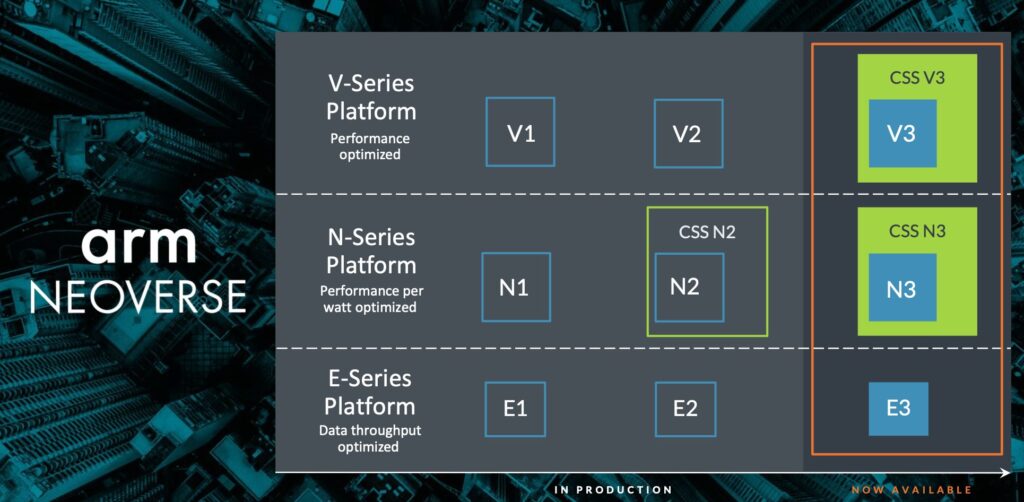

Research Brief: Inside Arm’s Neoverse CSS N3 & V3 Announcements

Arm brings two new Neoverse compute subsystems to market, each based on its third-generation Neoverse IP, extending its N-Series and V-Series product lines. These new platforms, Neoverse CSS N3, and V3, aim to improve performance-per-watt and support the implementation of new technologies like chiplets.

Research Note: Palo Alto Networks FQ2 2024 Earnings

Palo Alto Networks announced its fiscal Q2 2024 earnings with impressive figures that beat consensus for both revenue and EPS, showcasing its continued growth and market resilience. The company reported a 19% year-over-year increase in revenue, reaching $1.98 billion, along with a 16% rise in billings.

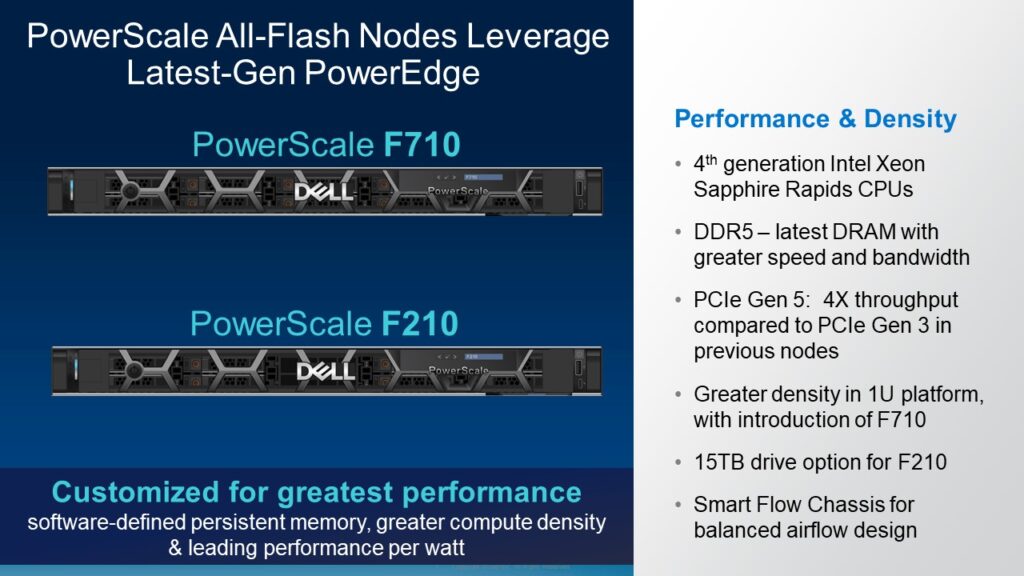

Quick Take: Dell’s PowerScale F210 and F710

Dell Technologies today announced the introduction of two new all-flash storage arrays, the PowerScale F210 and F710, as part of its commitment to delivering AI-optimized infrastructure. The PowerScale platform is designed to be AI-ready, offering exceptional performance, scalability, efficiency, federal-grade security, and multi-cloud agility.

Quick Take: IBM Acquisition of Advanced’s Application Modernization Division

IBM announced the pending acquisition of application modernization capabilities from Advanced, a strategic move by IBM to bolster its position in hybrid cloud and AI. The terms of the deal were not disclosed, but is expected to close in the second quarter of 2024.

This acquisition will enhance IBM Consulting’s services in mainframe application and data modernization, marking a significant step in IBM’s ongoing commitment to supporting clients through their digital transformation journeys.

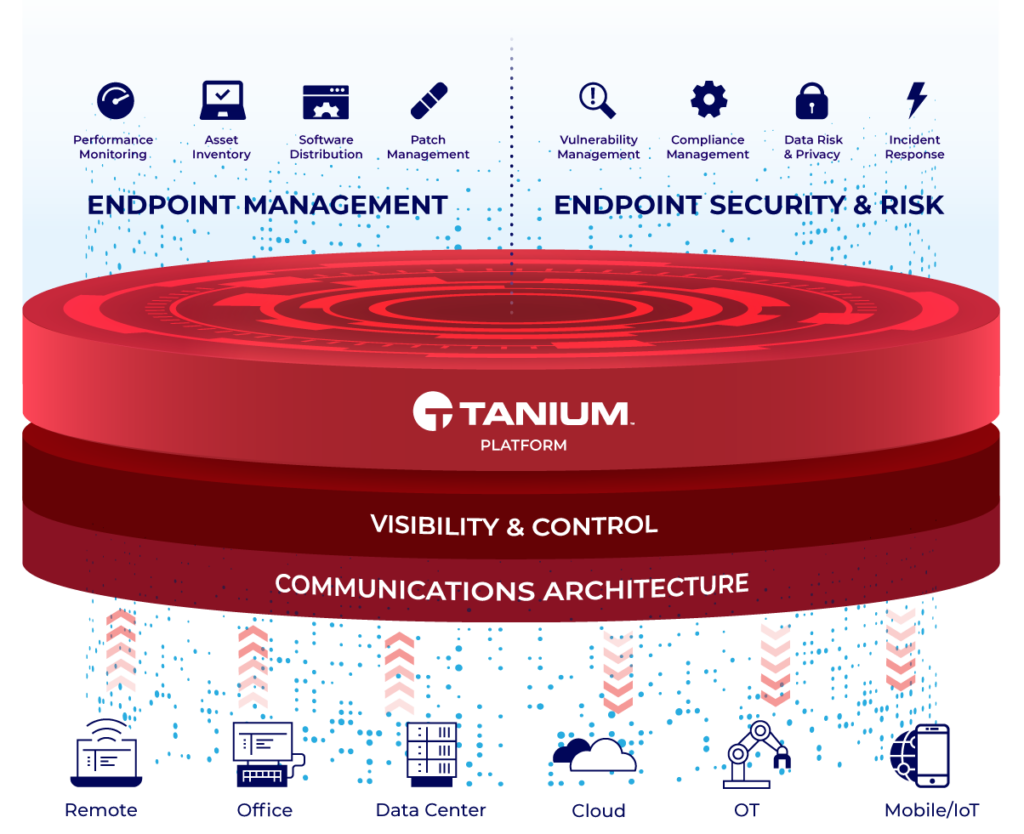

Quick Take: Tanium’s Autonomous Endpoint Management (AEM)

Tanium, a leader in converged endpoint management, is bringing AEM into the mainstream, highlighting the technology as the future direction of its XEM platform at its recent Converge event.

Let’s look at AEM and how it might impact the cybersecurity market.

Observability’s Now Strategic for IT

Observability allows IT organizations to monitor and understand the internal states of systems by examining their outputs. Observability has transcended its origins in monitoring, evolving into a sophisticated discipline that leverages AI to provide deep insights and predictive capabilities; it’s become a strategic need for enterprise IT.

Quick Take: Microsoft & Vodafone’s Strategic Engagement

Vodafone and Microsoft announced a significant 10-year strategic partnership aimed at driving digital transformation for businesses and consumers across Europe and Africa, leveraging their combined strengths in technology and connectivity.

The collaboration will focus on enhancing Vodafone’s customer experience through Microsoft’s AI, expanding Vodafone’s managed IoT connectivity platform, developing new digital and financial services for SMEs, and revamping Vodafone’s global data center strategy.

Research Note: Honeywell’s $300M Investment in Quantinuum

Honeywell continues its investments in quantum computing by announcing a $300 million equity fundraising for Quantinuum, the leading integrated quantum computing company, valuing it at $5 billion pre-money.

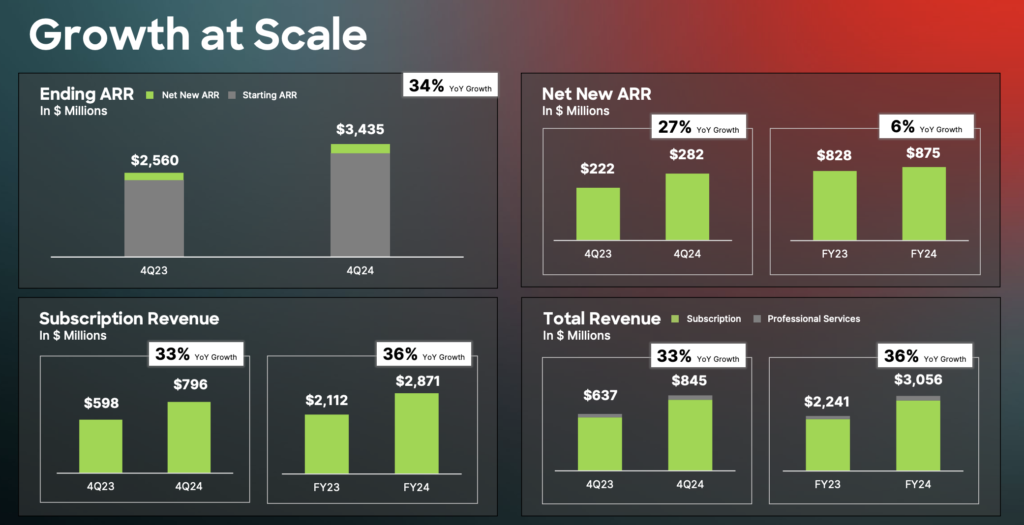

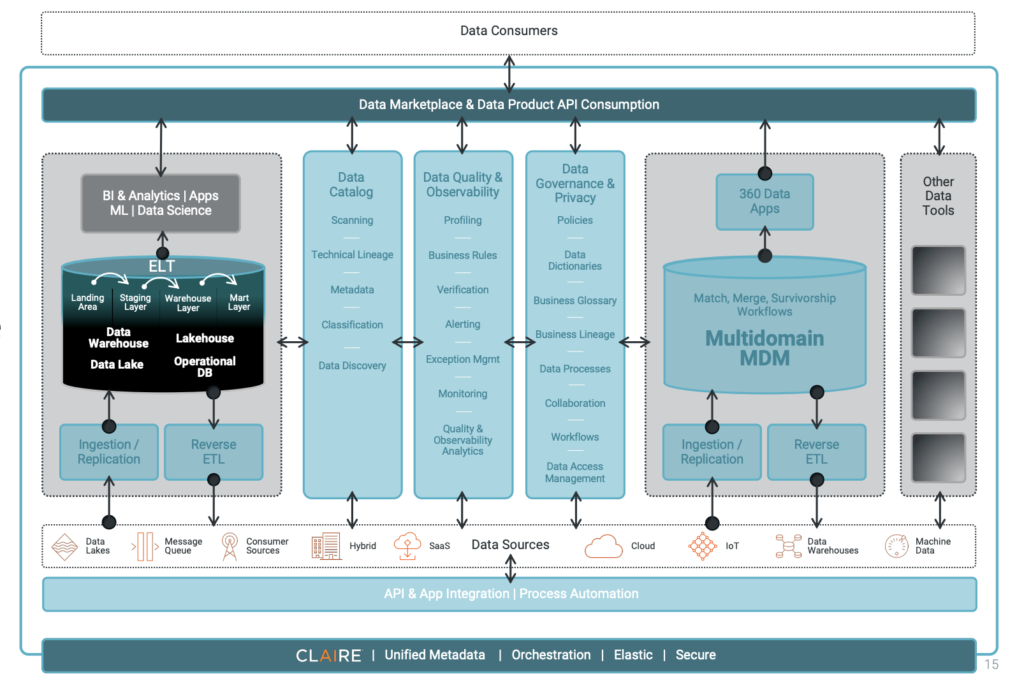

Research Note: Informatica Q4 2024 Earnings

Informatica, a prominent player in the enterprise cloud data management sector, this week announced its financial results for the fourth quarter, showcasing significant strides in its cloud-first strategy.

This Research Note delves into the key financial achievements and strategic milestones that Informatica has attained amidst a rapidly evolving digital landscape.

Research Note: Cisco FQ2 2024 Earnings

Cisco Systems, Inc., this week announced its financial results for the second quarter of its fiscal year 2024.