Explainer: What Does It Mean to “Support Apache Iceberg” in a Storage System

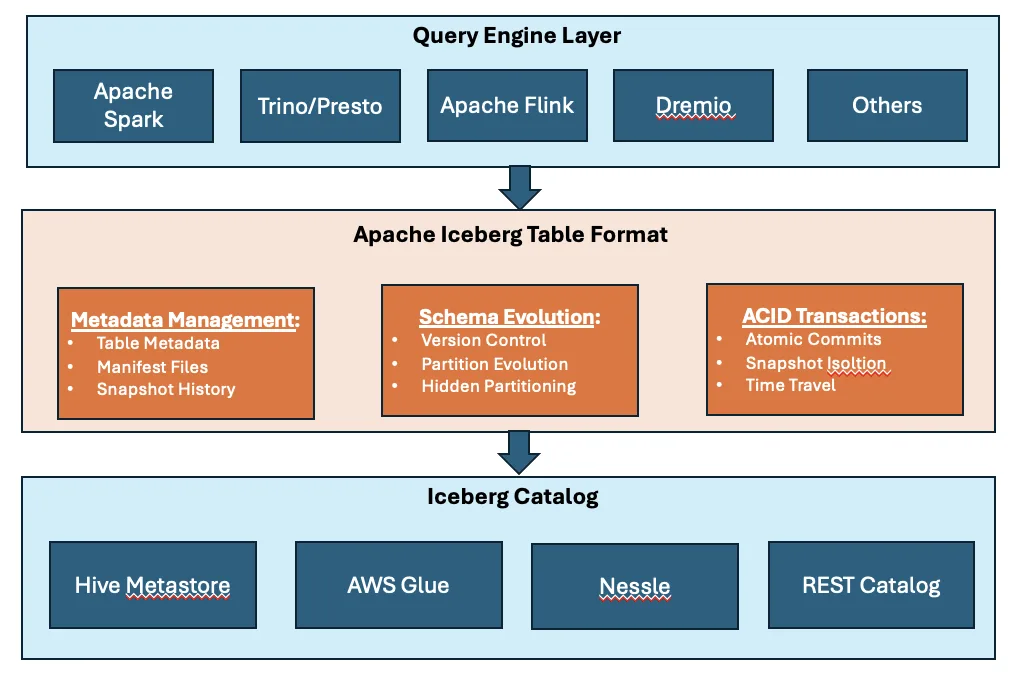

Over the past several years, “Apache Iceberg support” has quietly become table stakes in modern data infrastructure conversations. Storage vendors list it in press releases, lakehouse platforms lead with it and architects assume it.

“Chips” is now the “C” in CES

While the focus of CES 2026 remained on consumer electronics, this year felt different. More expansive, with the semiconductor industry dominating the pre-show with overlapping announcements that reveal diverging strategies for AI workload acceleration, manufacturing sovereignty, and market expansion beyond traditional computing segments.

Research Note: VAST’s Novel Approach to NVIDIA’s new Inference Context Memory Storage Platform

VAST Data announced support for NVIDIA’s recently unveiled Inference Context Memory Storage (ICMS) Platform, targeting the NVIDIA Rubin GPU architecture. The announcement addresses the challenge of managing KV cache data that exceeds GPU and CPU memory capacity as context windows scale to millions of tokens across multi-turn, agentic AI workflows.

Research Note: Improving Inference with NVIDIA’s Inference Context Memory Storage Platform

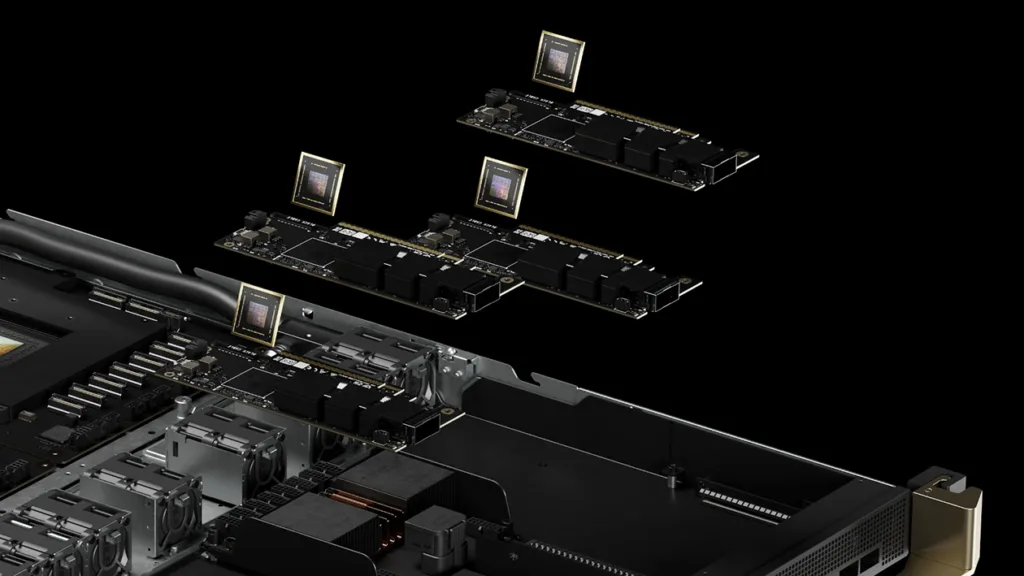

At NVIDIA Live at CES 2026, NVIDIA introduced its Inference Context Memory Storage (ICMS) platform as part of its Rubin AI infrastructure architecture. NVIDIA’s ICMS addresses KV cache scaling challenges in LLM inference workloads.

The technology targets a specific gap in existing memory hierarchies where GPU high-bandwidth memory proves too limited for growing context requirements while general-purpose network storage introduces latency and power consumption penalties that degrade inference efficiency.

NVIDIA at CES: When the Compute Stack Outgrew the Showroom

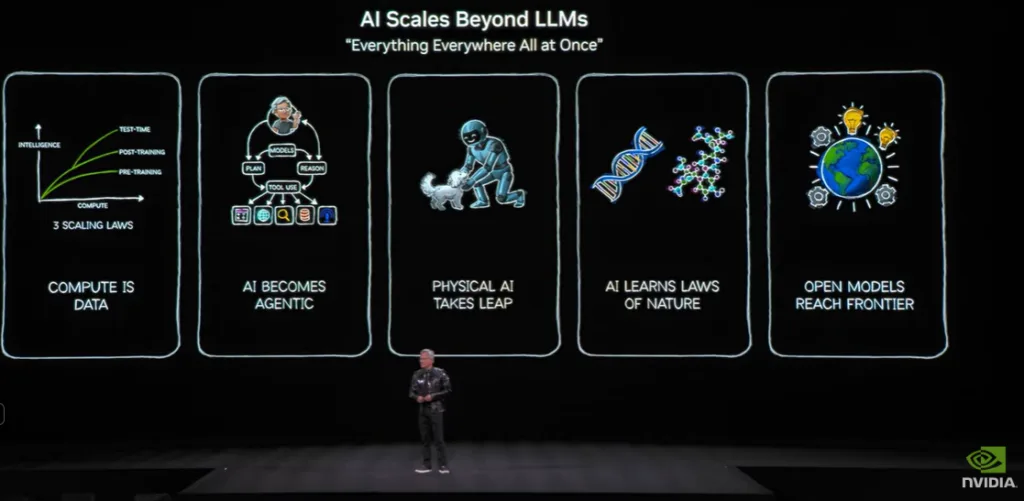

Jensen used the stage to argue that the world is shifting from CPU‑centric computing to AI‑driven, GPU‑first platforms, and the shift isn’t just for hyperscalers.

Research Note: Dynatrace & Google Cloud Collaborate on Observability for Agentic AI

Dynatrace and Google Cloud have expanded their collaboration to provide observability capabilities for agentic AI workloads through two primary integrations: a Gemini CLI extension for developer access to observability data within terminal environments, and an A2A protocol integration with Gemini Enterprise for real-time system monitoring.

The Ecosystem Takes Center Stage at AWS re:Invent 2025

AWS re:Invent 2025 has become an industry show, with this year’s event showcasing a partner ecosystem focused on agentic AI integration and unified observability. The conference featured major announcements from enterprise ISVs, security platforms, and enterprise software providers, all positioning their technologies to work seamlessly with AWS’s new AI capabilities.

Research Note: IBM to Acquire Confluent for Real-Time Event Streaming

IBM has entered a definitive agreement to acquire Confluent for $11 billion in cash ($31 per share), adding enterprise-grade Apache Kafka streaming infrastructure to its hybrid cloud and AI portfolio. Confluent brings 6,500 enterprise customers, proven streaming architecture handling real-time data flows across hybrid environments, and capabilities specifically relevant to emerging agentic AI requirements. The […]

Research Note: Marvell to Acquire Silicon Photonics Player Celestial AI

Marvell Technology recently announced a definitive agreement to acquire Celestial AI for $3.25 billion in upfront consideration ($1 billion cash plus $2.25 billion in stock), with potential earnout payments of up to an additional $2.25 billion based on revenue milestones through fiscal 2029.

Research Note: AWS S3 AI-Focused Enhancements

AWS announced several enhancements to its S3 storage platform at its recent re:Invent 2025, strengthening its object storage capabilities for adjacent markets, including vector databases, enterprise file systems, and enterprise data lakes.

The announcements include the general availability of S3 Vectors with substantially increased scale limits, new S3 integration with FSx for NetApp ONTAP file systems, cost-optimization features for S3 Tables, and expanded performance monitoring through S3 Storage Lens.

Research Note: AWS Releases Trainium3, Teases Trainium4

At its recent AWS re:Invent event, AWS moved its custom AI accelerator strategy into a new phase with the general availability of EC2 Trn3 UltraServers based on the Trainium3 chip and the public preview of its next-generation Trainium4.

Research Note: HPE Announcements at Discover Barcelona 2025

At HPE Discover Barcelona 2025, Hewlett Packard Enterprise centered its announcements on three core pillars of enterprise infrastructure: networking for AI workloads, hybrid cloud and virtualization enhancements, and AI infrastructure systems at scale.

Research Note: VAST Data Expands Cloud Presence with Microsoft Azure and Google Cloud Integrations

This month VAST Data announced integrations with both Microsoft Azure and Google Cloud, significantly expanding its hyperconverged approach to AI Storage, what its calls its “AI Operating System,” further into major public cloud environments.

Azure customers will gain access to VAST’s complete data services suite running on Azure infrastructure, while Google Cloud users receive the first fully managed VAST AI OS service. Both integrations emphasize eliminating data migration barriers and supporting agentic AI workloads.

SC25: Beyond Super Computing

Supercomputing 2025 delivered a clear message to enterprise IT leaders: the infrastructure conversation has fundamentally changed. The announcements from SC25 were about architectural transformation.

From rack-scale designs to quantum integration to facility-level engineering, the building blocks of large-scale AI and HPC systems are being reimagined.

Research Note: VAST Data Integrates with Google Cloud & Microsoft Azure

Over the past month, VAST Data has announced partnerships with both Google Cloud and Microsoft Azure to deliver its hyperconverged approach to AI data management (which it calls an “AI Operating System”) as a managed service in public cloud environments.

Research Note: Dell Adds 20+ Features to its AI Factory

Dell Technologies announced more than 20 updates to its AI Factory portfolio ahead of next week’s SC25 event, spanning compute, storage, networking, and cooling infrastructure. The announcements center on three primary themes: expanded support for NVIDIA Blackwell GPUs across multiple server platforms, introduction of AMD MI355X-based systems, and deeper integration of automation tools across the infrastructure stack.

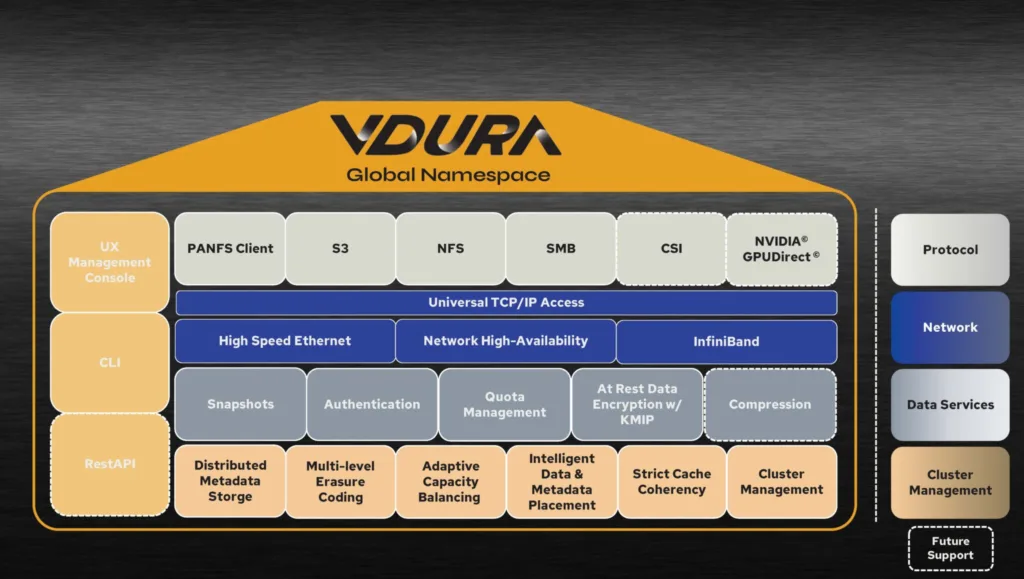

Research Note: VDURA Data Platform v12

VDURA recently announced Version 12 of its VDURA Data Platform (VDP), formerly known as PanFS, introducing three primary architectural enhancements to its parallel file system: an elastic Metadata Engine for distributed metadata processing, system-wide snapshot capabilities, and native support for SMR disk drives.

Quick Take: Qualcomm’s Diversification Strategy is Paying Off

Qualcomm’s fiscal Q4 2025 results tell a story that extends far beyond the smartphone chips that built the company. While the guidance-busting earnings numbers, $11.3 billion in revenue and $3.00 EPS, are impressive, it’s not the full story.

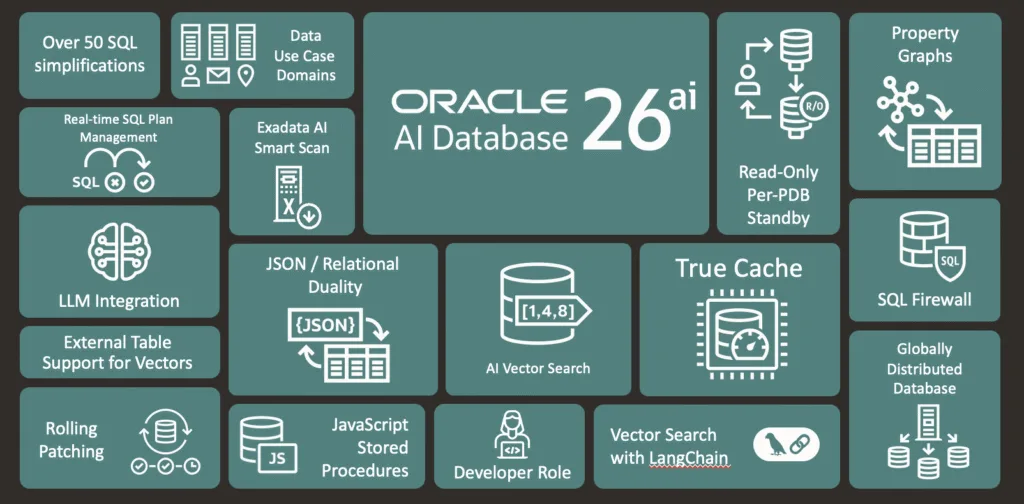

Research Brief: Oracle Database 26ai Delivers Fully Integrated AI

Oracle released its Oracle Database 26ai at its recent AI World event in Las Vegas. The update makes the database an integrated platform that embeds artificial intelligence capabilities across data management, development, and analytics operations.

The platform integrates vector search capabilities with traditional database functions, supports agentic AI workflows through in-database tools and MCP servers, and extends analytics capabilities through Iceberg table format support. Oracle also bundles in advanced AI features, including AI Vector Search, at no additional cost.

Research Brief: Cisco Partner Summit Infrastructure Announcements

At its 2025 Partner Summit in San Diego this week, Cisco announced three interconnected infrastructure initiatives to address the operational, connectivity, and edge-computing requirements of AI workloads.

The announcements span network management simplification through AgenticOps workflows, GPU-as-a-Service connectivity via SD-WAN integration with Megaport AI Exchange, and a new converged edge computing platform called Unified Edge.

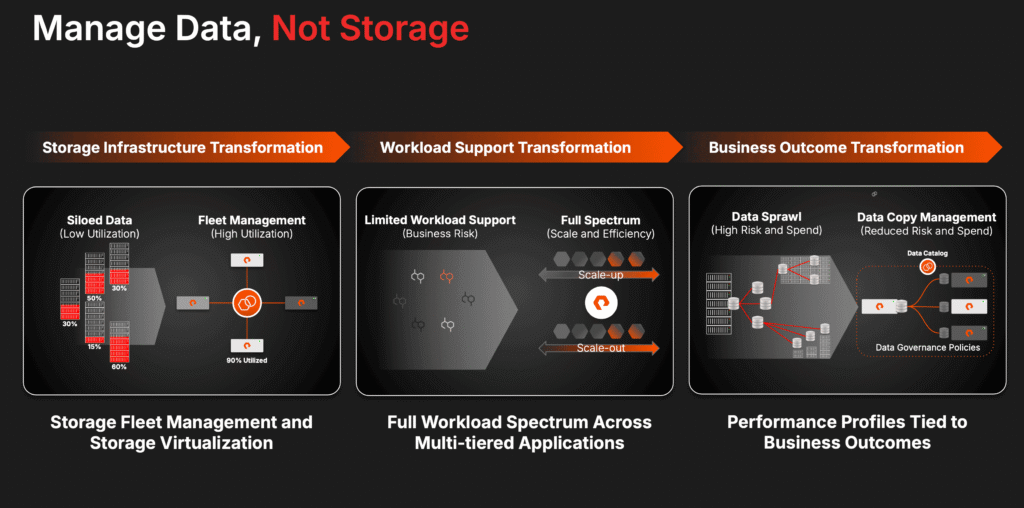

Research Report: Addressing Enterprise AI Data Challenges At Scale with the Dell AI Data Platform

This Research Report looks at how the Dell AI Data Platform addresses the challenges of AI-at-Scale with a purpose-built, modular approach that integrates file, block, and object storage into a unified system optimized for AI. Key components include data engines that enable aggregation, in-place querying, and searching across both structured and unstructured data sets, as well as storage engines that provide the necessary performance, scale, and security across on-premises, edge, and cloud environments.

Research Note: Qualcomm Introduces AI200 & AI250 for Data Center Inference

Qualcomm Technologies recently announced two data center inference accelerators, the AI200 and AI250, targeting commercial availability in 2026 and 2027, respectively. The products are Qualcomm’s first strategic push into rack-scale AI inference.

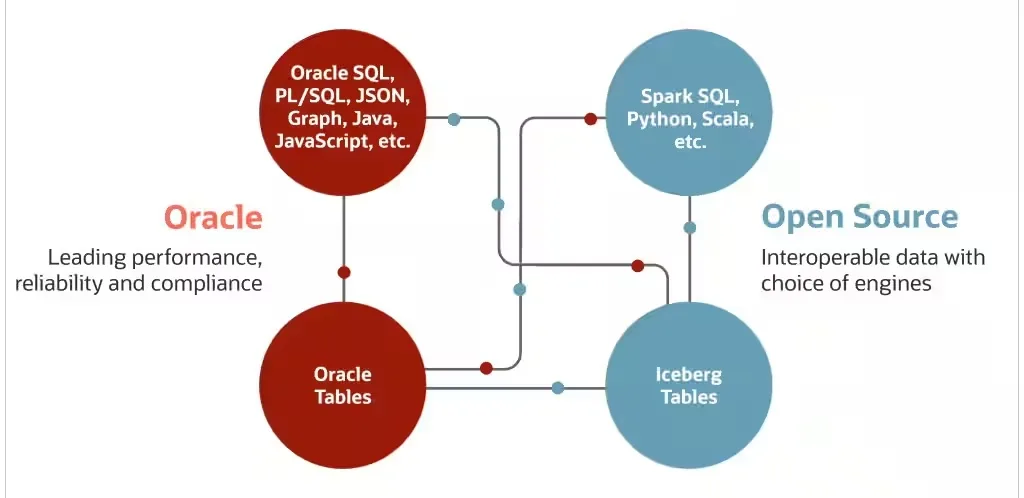

Research Brief: Oracle Autonomous AI Lakehouse

Oracle recently announced its Oracle Autonomous AI at its Oracle AI World event in Las Vegas. The new offering combines Oracle’s Autonomous AI Database platform with native Apache Iceberg support. The offering addresses persistent enterprise challenges around data lock-in and platform interoperability by providing standardized access to data across multiple clouds and catalogs.

Research Note: Red Hat AI 3.0

Red Hat released version 3.0 of its AI platform, introducing production-ready features for distributed inference, expanded hardware support, and foundational components for agentic AI systems.

Key additions include the generally available llm-d project for Kubernetes-native distributed inference, support for IBM Spyre accelerators alongside existing NVIDIA and AMD GPU options, and developer preview features for Llama Stack and MCP integration.

IBM TechXchange 2025: Enterprise AI Comes of Age in Orlando

I’ve just returned from IBM TechXchange 2025 in Orlando, and if there was one overarching message from this year’s event, it was this: the era of AI experimentation is over, and IBM is ready to help enterprises bring it to production environments.I’ve just returned from IBM TechXchange 2025 in Orlando, and if there was one overarching message from this year’s event, it was this: the era of AI experimentation is over, and IBM is ready to help enterprises bring it to production environments.

Research Note: IBM Releases Granite Model for DocLing Document Processing

IBM recently released Granite-Docling-258M, a specialized vision-language model for document conversion that operates at 258 million parameters under an Apache 2.0 license. The new model is a production-ready iteration of the experimental SmolDocling-256M-preview released earlier this year and incorporates architectural improvements and stability enhancements.

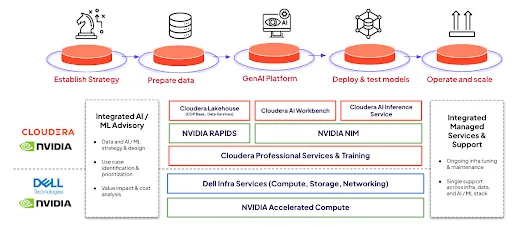

Research Note: Cloudera Adds Support for Dell ObjectScale

Cloudera announced the integration of Dell ObjectScale into its AI-in-a-Box offering, positioning the collaboration as a comprehensive Private AI platform for enterprise-scale deployments.

The new integration allows Cloudera’s compute engines to operate directly against Dell’s object storage infrastructure, creating what the vendors characterize as a validated, integrated data platform.

Research Note: MongoDB Application Modernization Platform (AMP)

At its recent MongoDB.local NYC event, MongoDB launched its Application Modernization Platform (AMP), seeing the database vendor’s expansion into full-stack enterprise application transformation services.

The new platform combines AI-powered code transformation tools, proven migration frameworks, and professional services to modernize legacy applications for MongoDB’s Atlas cloud platform.

Research Note: CrowdStrike to Acquire AI Security Firm Pangea

CrowdStrike announced its intent to acquire Pangea Cyber for a reported $260 million, adding specialized AI security capabilities to its expanding agentic security platform.

Pangea targets a critical vulnerability in enterprise AI: protecting AI agents and LLMs from prompt injection attacks and other AI-specific threats.

Research Note: DXC Technology and 7AI Partner on Agentic Security Operations

Announced earlier this month at Black Hat, DXC Technology has partnered with cybersecurity startup 7AI to launch the DXC Agentic Security Operations Center (SOC) for delivering managed security services.

The collaboration integrates 7AI’s autonomous AI agents into DXC’s global security operations workflow, covering alert ingestion, investigation, and remediation processes.

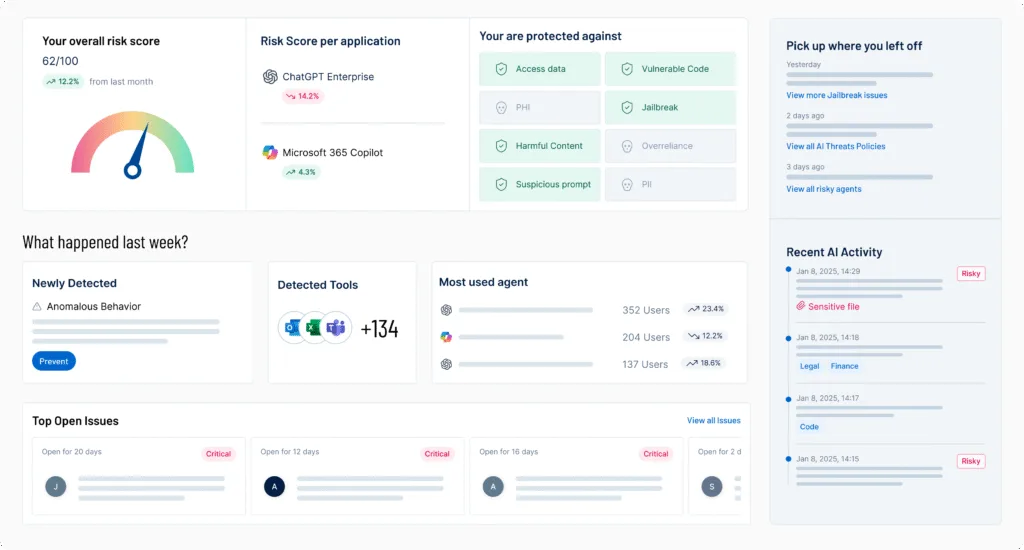

Research Note: Tenable Brings Exposure Management to Enterprise AI

Tenable recently expanded its Tenable One exposure management platform with AI Exposure, a comprehensive solution designed to address generative AI security risks in enterprise environments. Announced at Black Hat USA 2025, the platform addresses the growing visibility gap as organizations rapidly adopt AI tools, such as ChatGPT Enterprise and Microsoft Copilot.

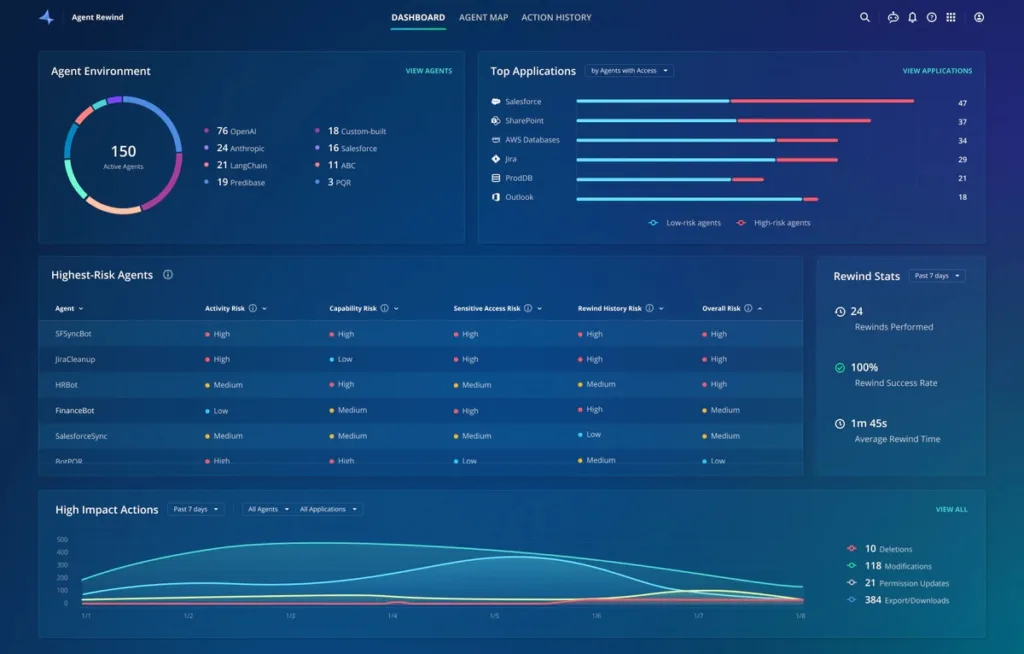

Research Note: Rubrik Safeguards Agentic Workflows with Agent Rewind

Rubrik recently introduced Agent Rewind, a solution targeting the emerging challenge of AI agent error recovery. The new offering, powered by technology from Rubrik’s acquisition of AI infrastructure startup Predibase earlier this year, provides visibility, audit trails, and rollback capabilities for actions taken by autonomous AI agents across enterprise systems.

Research Note: SentinelOne to Acquire Prompt Security

SentinelOne recently announced a definitive agreement to acquire Prompt Security for an estimated $250-300 million, more than 10x the startup’s funding history. The transaction, expected to close by November 2025, will allow SentinelOne to address enterprise AI governance risks through real-time monitoring and control of generative AI tool usage.

Research Note: AWS Open Sources MCP Server for Aparch Spark History Server

AWS recently announced the open-source release of Spark History Server MCP, a specialized implementation of the Model Context Protocol (MCP). This server enables AI agents to directly access and analyze historical execution data from Apache Spark workloads through a standardized, structured interface.

Research Note: AGNTCY Moves to Linux Foundation

AGNTCY delivers foundational infrastructure for the “Internet of Agents”, enabling AI agents from different frameworks, vendors, and deployment environments to discover each other, establish identity, communicate securely, and share runtime observability data.

Broadcom’s Tomahawk Ultra: Challenging Nvidia’s Dominance in AI Networking

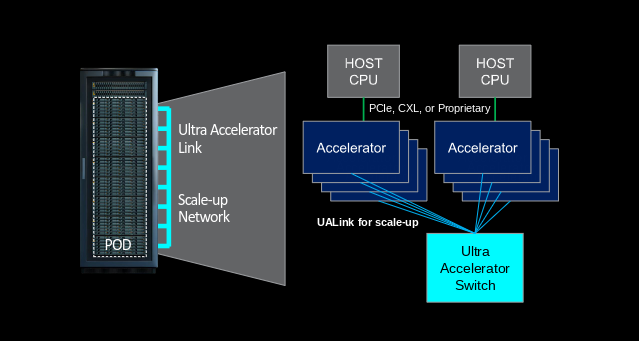

The significance of this battle lies in “scale-up” computing – the technique of ensuring closely located chips can communicate at lightning speed.

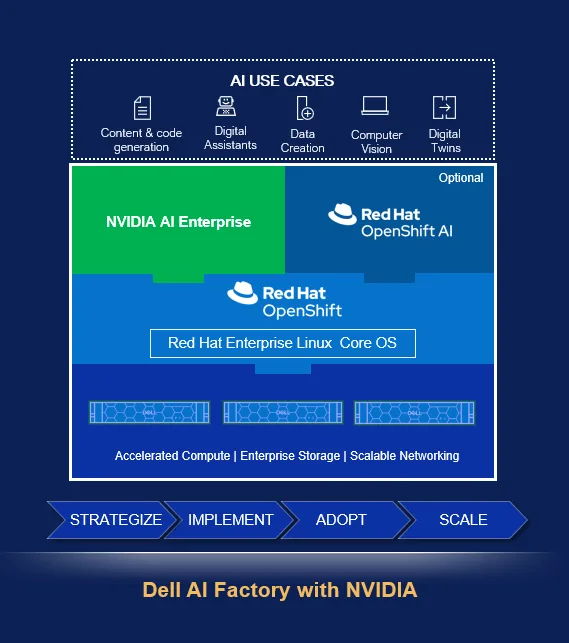

Research Note: Red Hat OpenShift on Dell AI Factory with NVIDIA

Dell Technologies recently announced that its integrated Red Hat OpenShift with its Dell AI Factory with NVIDIA platform is now generally available to customers. The solution was previewed earlier this year at Dell Tech world. The updated solution combines Dell PowerEdge infrastructure, NVIDIA GPU acceleration, Red Hat container orchestration, and NVIDIA AI Enterprise software into a validated stack.

Open Flash Platform Initiative Aims to Redefine Flash Storage for AI

Six industry participants recently launched the Open Flash Platform (OFP) initiative, backed by six founding members: Hammerspace, the Linux Foundation, Los Alamos National Laboratory (LANL), ScaleFlux, SK hynix, and Xsight Labs.

The initiative purports to address the challenges presented by the next wave of AI-driven data storage, specifically the limitations of power, heat, and data center space.

Research Note: AWS Enhances S3 for Enterprise AI

At its recent AWS Summit in NYC, Amazon Web Services introduced two significant enhancements to its S3 object storage service: S3 Vectors for cost-effective vector data storage and expanded S3 Metadata capabilities for comprehensive object visibility.

Research Note: Enterprise AI Agents Take Center Stage at AWS Summit NYC 2025

AWS recently concluded its New York City Summit with a clear message: the future of enterprise software is agentic AI, and Amazon aims to own the infrastructure that enables it.

Research Note: Oracle Introduces MCP Server for Oracle Database

Oracle this week introduced its new MCP Server for Oracle Database, leveraging Anthropic’s Model Context Protocol to enable direct interaction with its AI assistant on the database platform. The new capability transforms AI-database workflows (from code generation to execution), allowing large language models to connect, query, and analyze Oracle databases while maintaining existing security frameworks.

With MCP Server for Oracle Database, Oracle becomes the first major database vendor to implement MCP, continuing its aggressive approach to quickly bringing MCP capabilities to its database customers.

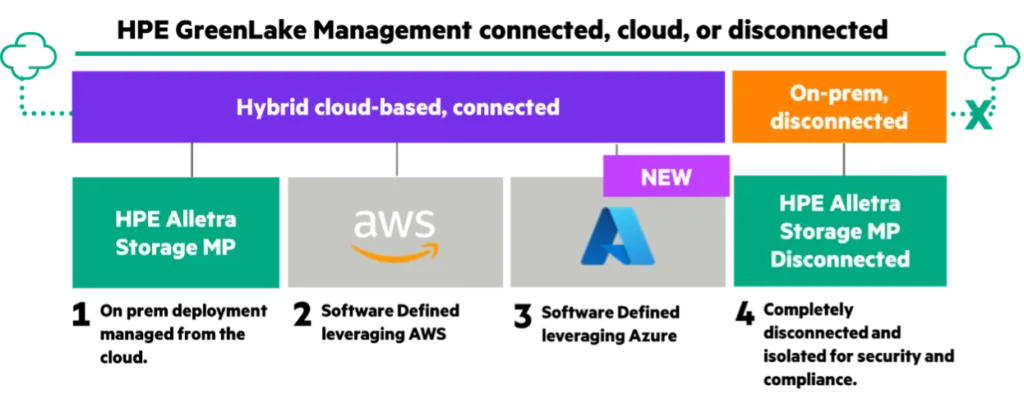

Research Note: HPE’s Updated AI Factory

At its recent Discover event, HPE announced an expansion of its NVIDIA-based AI Computing portfolio with three distinct AI factory configurations targeting enterprise, service provider, and sovereign deployment scenarios.The offerings center on the upgraded HPE Private Cloud AI platform, which integrates NVIDIA Blackwell GPUs with HPE ProLiant Gen12 servers, custom storage solutions, and orchestration software.

HPE Discover 2025: The AI Evolution Takes Center Stage (and Everywhere Else) Day 1 Wrap Up

Las Vegas, Nevada – Antonio Neri took the stage at HPE Discover 2025’s opening day keynote, captivating a massive audience at the iconic Sphere in Las Vegas. Thousands of excited attendees filled the unique venue, buzzing with anticipation to hear HPE’s vision and groundbreaking announcements for the future of AI, hybrid cloud, and networking. The […]

Research Note: Pure Storage’s New Enterprise Data Cloud

At its recent Pure //Accelerate event, Pure Storage introduced its new Enterprise Data Cloud (EDC) architecture, positioning the concept as a unified data management platform that consolidates storage operations across hybrid environments.

The EDC centers on Pure Fusion, the company’s storage orchestration layer, with new automation capabilities, including workload presets, workflow orchestration, and enhanced security integrations.

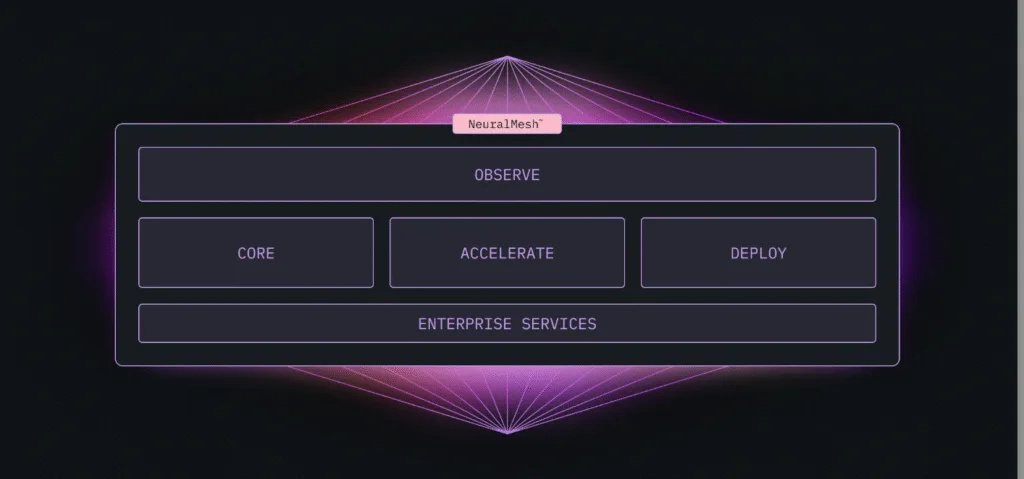

Research Brief: WEKA NeuralMesh, a Micro-Services-based AI Data Architecture

WEKA recently announced NeuralMesh, its software-defined, microservices-based storage platform engineered for high-performance, distributed AI workloads. NeuralMesh introduces a dynamic mesh architecture that departs from traditional monolithic or appliance-bound storage systems.

It addresses the requirements of emerging AI applications, including agentic AI, large-scale inference, and token warehouse infrastructure, by delivering consistent microsecond-level latency, fault isolation, and scale-out performance across hybrid environments.

Research Note: AMD Raises its Game at its Advancing AI 2025 Event

AMD announced a comprehensive portfolio of AI infrastructure solutions at its recent Advancing AI 2025 event, positioning itself as a full-stack competitor to NVIDIA.

The announcements include the immediate availability of MI350 Series GPUs with 4x generational performance improvements, the ROCm 7.0 software platform achieving 3.5x gains in inference, and the AMD Developer Cloud for broader ecosystem access.

AMD also previewed its 2026 “Helios” rack solution, which integrates MI400 GPUs, EPYC “Venice” CPUs, and Pensando “Vulcano” NICs.

Research Note: Cisco Introduces AI Canvas

Earlier this week at Cisco Live San Diego 2025, Cisco unveiled AI Canvas, a groundbreaking Generative UI designed to redefine IT operations. This initiative is a core component of Cisco’s broader AgenticOps strategy, aiming to infuse AI agents directly into network management. AI Canvas targets the increasing complexity of modern IT environments, particularly with the […]

Cisco Live Day 1 Recap

San Diego- the sun’s shining, the breeze is cool, and Cisco Live! 2025 just kicked off, bringing all sorts of cool vibes and as expected, a boatload of announcements. But here’s the real talk: Cisco has come to play with their AI powered networking gear.

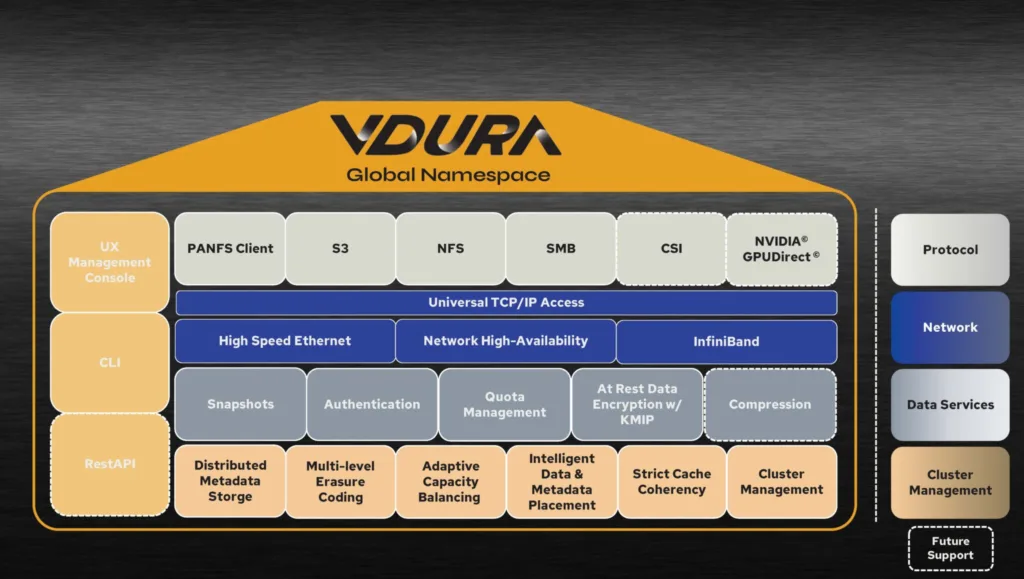

Research Note: VDURA Data Platform 11.2

Earlier this month, VDURA announced Version 11.2 of its Data Platform, featuring native Kubernetes CSI support, end-to-end encryption, and the VDURACare Premier support package.

The release also includes a technology preview of V-ScaleFlow, a data movement capability that VDURA claims will reduce flash requirements by over 50% through intelligent tiering between QLC flash and high-capacity HDDs.

Quick Take: Dell Tech World 2025

Last week I was back in Las Vegas for Dell Technologies World 2025. The company has a broad portfolio but was telling a single-minded story: Dell has a relentless focus on AI, emphasizing the Dell AI Factory and the shift towards on-premises enterprise AI solutions.

Research Note: Dell Updates its Dell AI Factory with NVIDIA

At its annual Dell Technologies World event in Las Vegas, Dell announced significant updates to its AI Factory with NVIDIA, expanding the platform’s hardware capabilities and introducing new managed services.

The updated platform targets enterprises transitioning from AI experimentation to full-scale implementation, particularly for agentic AI and multi-modal applications.

Key updates include new PowerEdge server configurations supporting up to 256 NVIDIA Blackwell Ultra GPUs per rack, enhanced data platform integrations, and comprehensive managed services.

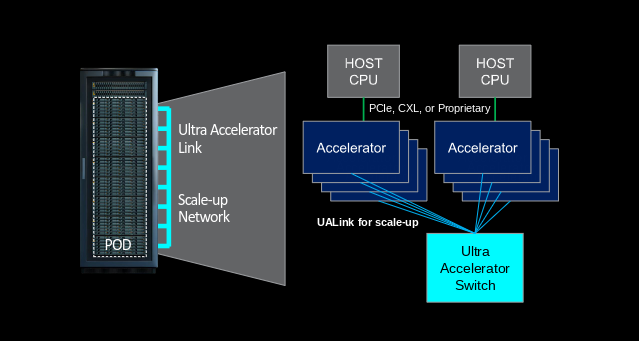

Quick Take: UALink & OCP Join Forces

The Open Compute Project (OCP) Foundation and the Ultra Accelerator Link (UALink) Consortium have announced a strategic collaboration to standardize and deploy high-performance, open scale-up interconnects for next-generation AI and HPC clusters.

Research Note: IBM Orchestrate for Enterprise Agentic AI

At IBM Think 2025 in Boston, IBM announced its new watsonx Orchestrate, catching the shift that sees enterprise AI moving beyond simple model deployment toward agent orchestration.

The platform enables organizations to build, deploy, and manage AI agents across enterprise environments with minimal technical expertise required.

Research Note: Palo Alto Networks Prisma AIRS for AI Protection

At RSAC 2025, Palo Alto Networks launched its new Prisma AIRS (AI Security), a comprehensive security platform targeting threats across enterprise AI ecosystems. Building upon their “Secure AI by Design” portfolio introduced last year, Prisma AIRS addresses emerging security challenges posed by the proliferation of AI applications, agents, and models.

Quick Take: Palo Alto Networks to Acquire Protect AI

Today, Palo Alto Networks announced that it has entered into a definitive agreement to acquire Protect AI, which secures AI and ML applications. The deal is part of Palo Alto Networks’ broader strategy to expand its cybersecurity portfolio into AI risk management.

Research Note: Veeam’s VeeamOn Announcements

At its annual VeeamON 2025 event, Veeam Software announced three significant expansions to its data resilience platform. The updates focus on identity protection, AI integration, and security partnerships.

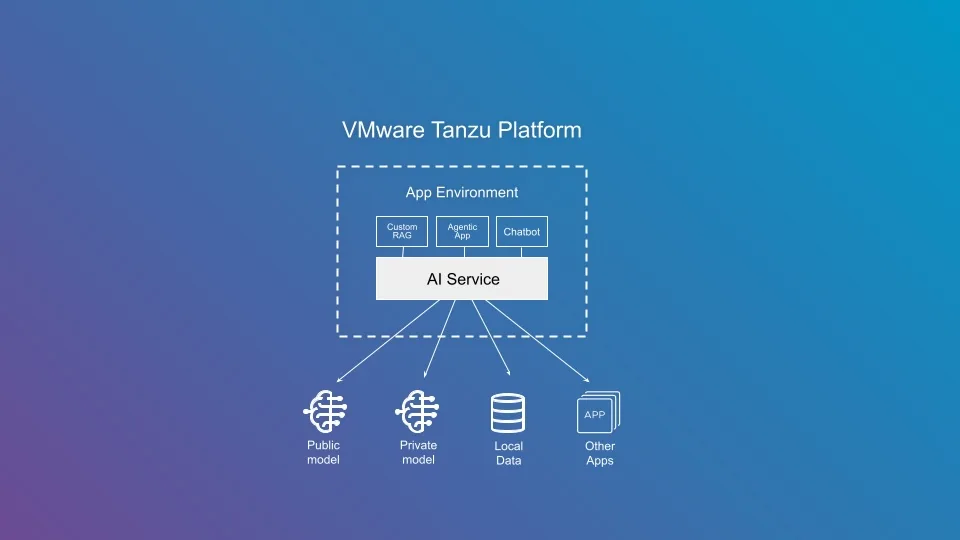

Research Note: VMware Tanzu’s AI Makeover

Broadcom’s VMware is repositioning Tanzu from a Kubernetes-centric application platform to a GenAI-first PaaS. The latest release introduces support for Anthropic’s Model Context Protocol (MCP) for agentic AI, deepens integration with the Claude LLM, and introduces a rearchitected platform focused on private cloud AI workloads.

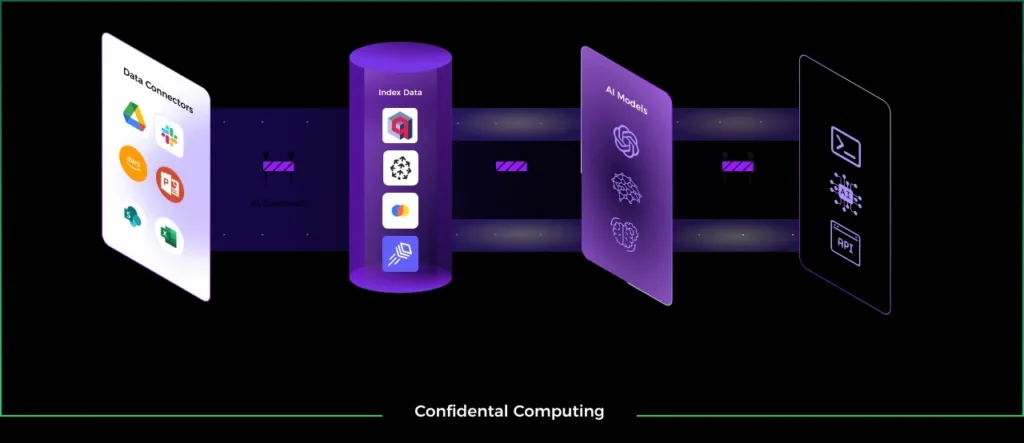

Research Note: Fortanix Armet AI Public Preview

Fortanix recently launched a public preview of Armet AI, a turnkey generative AI platform that integrates confidential computing and enterprise-grade data governance.

Targeted at enterprises managing sensitive or regulated data, Armet AI addresses the challenges of building secure and compliant generative AI systems by combining Intel’s trusted execution environments (SGX, TDX) with fine-grained access control, policy enforcement, and AI-specific security mechanisms.

Research Note: NetApp Updates Google NetApp Cloud Volumes

At the recent Google Cloud Next event, NetApp and Google Cloud announced enhancements to Google Cloud NetApp Volumes, their fully managed file storage service. The updates focus on increasing scalability, performance, and integration capabilities while reducing complexity for enterprise workloads.

Key improvements include throughput increases to 30GiBps for Premium and Extreme service levels, independent scaling of capacity and performance for the Flex service level, integration with Google Cloud’s Vertex AI Platform, and support for Google Cloud Assured Workloads.

Research Note: UALink Consortium Releases UALink 1.0

The UALink Consortium recently released its Ultra Accelerator Link (UALink) 1.0 specification. This industry-backed standard challenges the dominance of NVIDIA’s proprietary NVLink/NVSwitch memory fabric with an open alternative for high-performance accelerator interconnect technology.

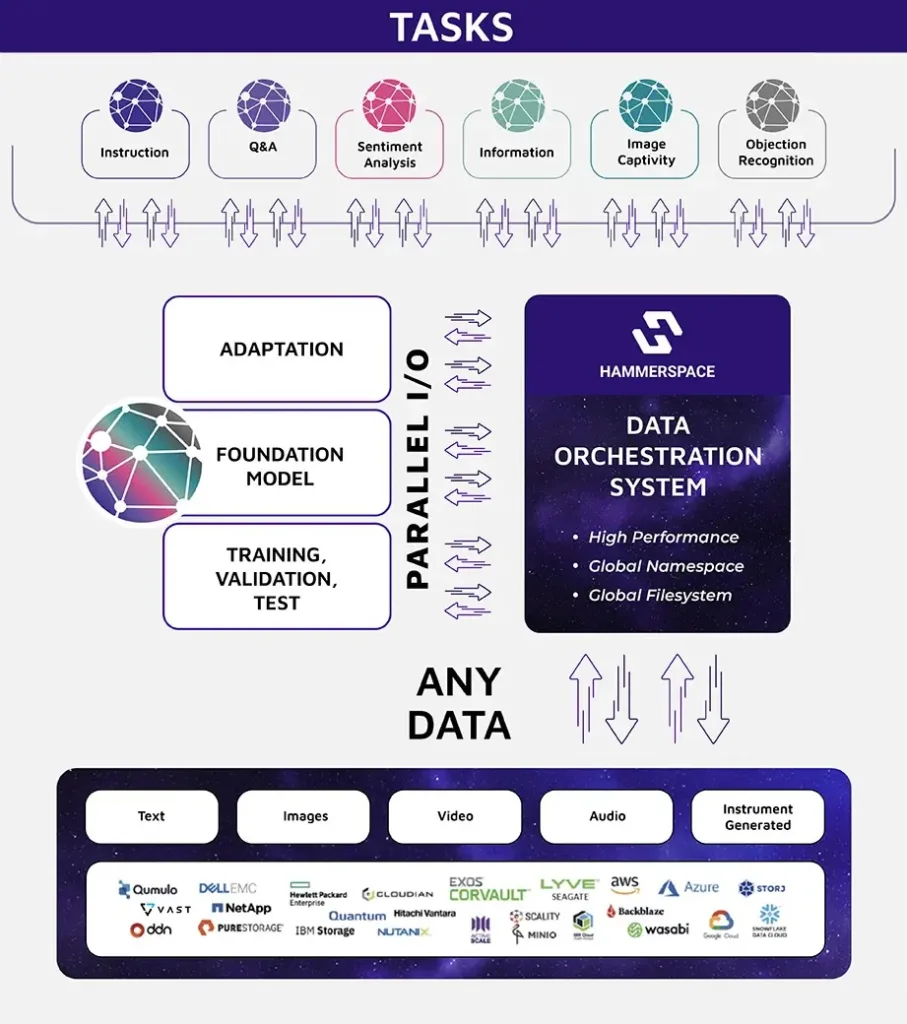

Research Note: Hammerspace $100M Series B to Accelerate AI Infrastructure Growth

Hammerspace, which provides a high-performance data orchestration solution for AI and hybrid cloud environments, announced it’s raised $100 million in a Series B funding round.

The round, led by Altimeter Capital and including participation from ARK Invest and other strategic investors, values Hammerspace at more than $500 million.

OFC 2025: Optical Interconnects Take Center Stage in the AI-First Data Center

AI is reshaping the data center, bringing networking along for the ride. It’s clear that optical networking is rapidly moving from a back-end concern to a front-line enabler of next-generation infrastructure.

AI workloads, with their massive datasets, distributed training pipelines, and high-performance compute requirements, demand interconnect solutions that combine extreme bandwidth with low power consumption and low latency. At last month’s OFC 2025 event in San Francisco, this shift was unmistakable.

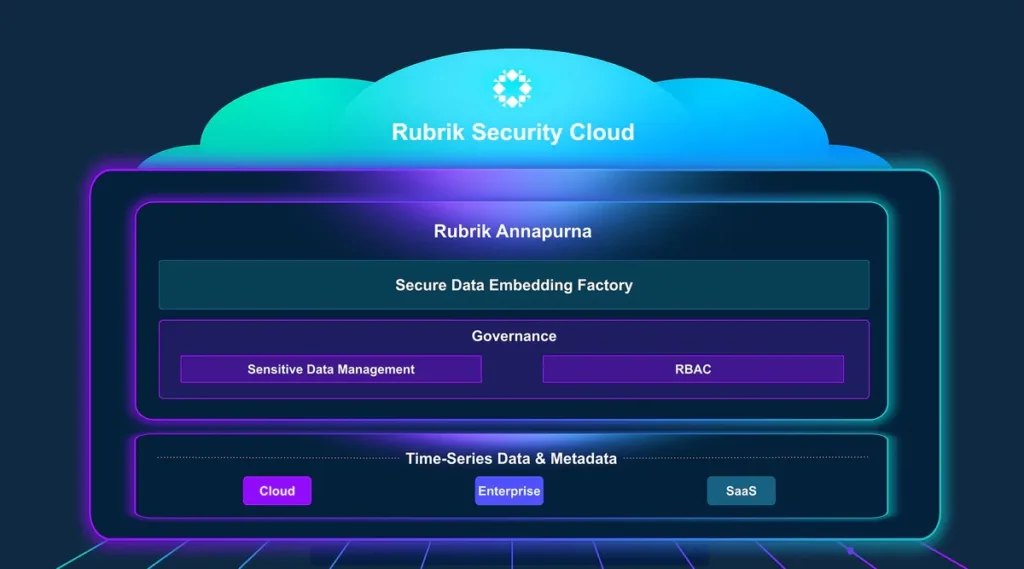

Research Note: Rubrik Expands Annapurna to GCP

At Google Cloud Next 2025, Rubrik announced the expansion of Rubrik Annapurna, its API-driven AI data security and governance platform, to Google Cloud. The announcement sees Rubrik delivering Annapurna as a secure data access layer for AI-driven application development within Google’s Agentspace framework.

Research Note: Anthropic/Databricks Partnership

Anthropic recently announced a new five-year strategic partnership with Databricks to integrate its Claude language models, including the newly released Claude 3.7 Sonnet, into the Databricks Data Intelligence Platform.

The deal, valued at approximately $100 million, will allow Databricks’ enterprise customers to build, deploy, and govern AI agents that operate directly on their proprietary enterprise data. Databricks will offer Claude models natively through its platform across AWS, Microsoft Azure, and Google Cloud.

Research Note: Dell’s Data Protection & Storage Updates

Dell Technologies this week announced a comprehensive set of updates spanning its data protection and storage platforms, including PowerProtect Data Domain, PowerProtect Data Manager, PowerScale, and PowerStore.

Quick Take: Qualcomm Acquires VinAI’s MovianAI Division

Qualcomm last week announced its acquisition of MovianAI, the generative AI division of VinAI, a leading Vietnamese AI research firm within the Vingroup ecosystem. The acquisition will enhance Qualcomm’s generative AI R&D capabilities by bringing VinAI’s deep expertise in AI, machine learning, computer vision, and natural language processing to its already-strong arsenal.

Research Note: Lenovo AI Announcements @ GTC 2025

At NVIDIA GTC 2025, Lenovo showed off its latest Hybrid AI Factory platforms in partnership with NVIDIA, focused on agentic AI.

The Lenovo Hybrid AI Advantage framework integrates a full-stack hardware and software solution, optimized for both private and public AI model deployments, and spans on-prem, edge, and cloud environments.

Research Note: NetApp AI Data Announcements @ GTC 2025

At the recent GTC 2025 event, NetApp announced, in collaboration with NVIDIA, a comprehensive set of product validations, certifications, and architectural enhancements to its intelligent data products.

The announcements include NetApp’s integration with the NVIDIA AI Data Platform, support for NVIDIA’s latest accelerated computing systems, and expanded availability of enterprise-grade AI infrastructure offerings, including NetApp AFF A90 and NetApp AIPod.

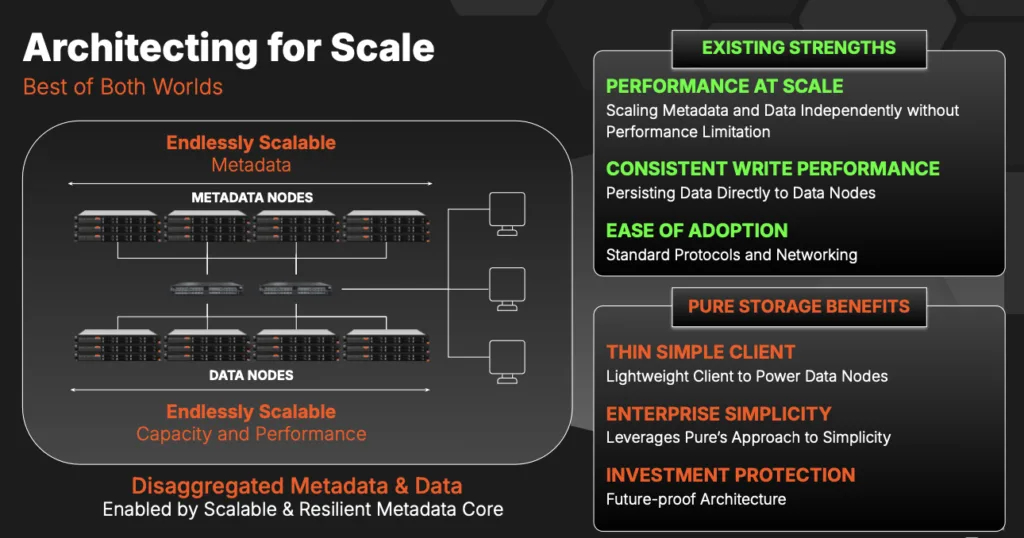

Metadata: The Silent Bottleneck in AI Infrastructure

One of the most impactful but underappreciated architectural changes impacting storage performance for AI is how these solutions manage metadata. Separating metadata processing from data storage unlocks significant gains in performance, scalability, and efficiency across AI workloads. Let’s look at why metadata processing matters for AI.

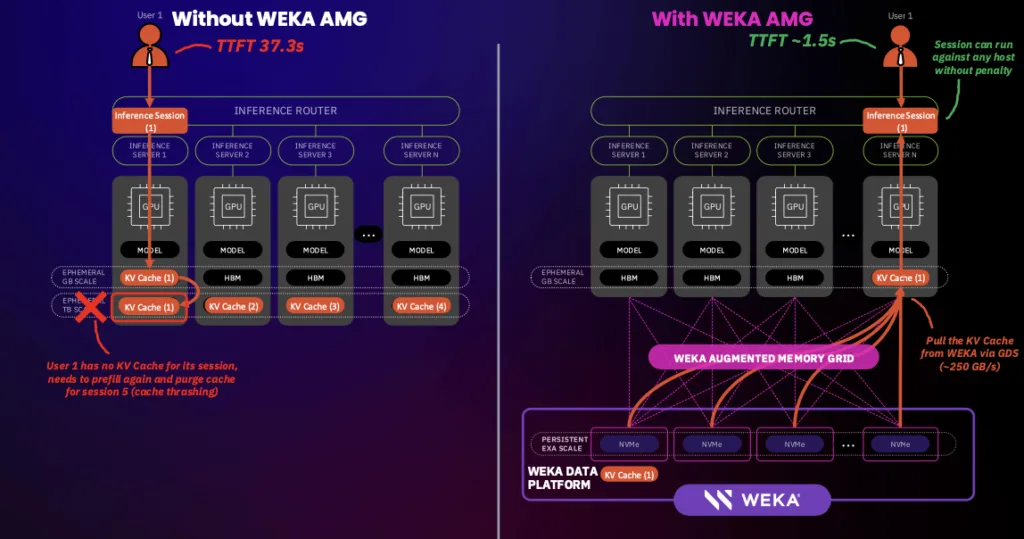

Research Note: WEKA Augmented Memory Grid

At the recent NVIDIA GTC conference, WEKA announced the general availability of its Augmented Memory Grid, a software-defined storage extension engineered to mitigate the limitations of GPU memory during large-scale AI inferencing.

The Augmented Memory Grid is a new approach that integrates with the WEKA Data Platform and leverages NVIDIA Magnum IO GPUDirect Storage (GDS) to bypass CPU bottlenecks and deliver data directly to GPU memory with microsecond latency.

Research Note: IBM Content-Aware Storage for RAG AI Workflows

At the recent NVIDIA GTC event, IBM announced new content-aware capabilities for its Storage Scale platform, expanding its AI infrastructure offerings to support more efficient, semantically rich data access for enterprise AI applications.

Research Note: HPE’s New Full-Stack Enterprise AI Infrastructure Offerings

At the recent NVIDIA GTC 2025, Hewlett Packard Enterprise (HPE) and NVIDIA jointly introduced NVIDIA AI Computing by HPE, full-stack AI infrastructure offerings targeting enterprise deployment of generative, agentic, and physical AI workloads.

The solutions span private cloud AI platforms, observability and management software, reference blueprints, AI development environments, and new AI-optimized servers featuring NVIDIA’s Blackwell architecture.

Liquid Cooling is Front & Center at GTC 2025

One thing was clear at the just-wrapped NVIDIA GTC event: the race to cool the next generation of HPC and AI systems is intensifying.

Let’s take a quick look at some of our favorite announcements.

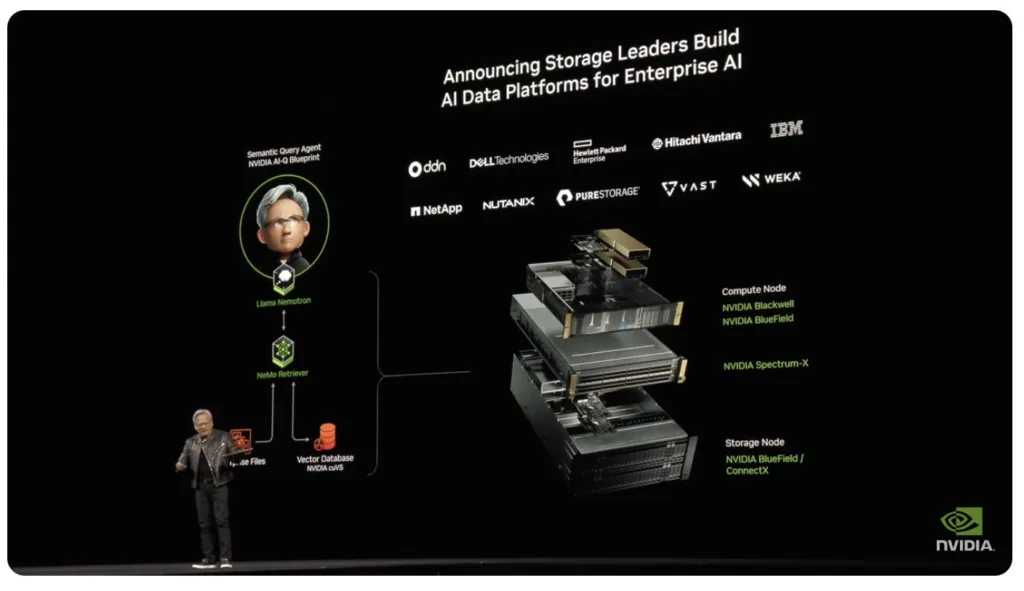

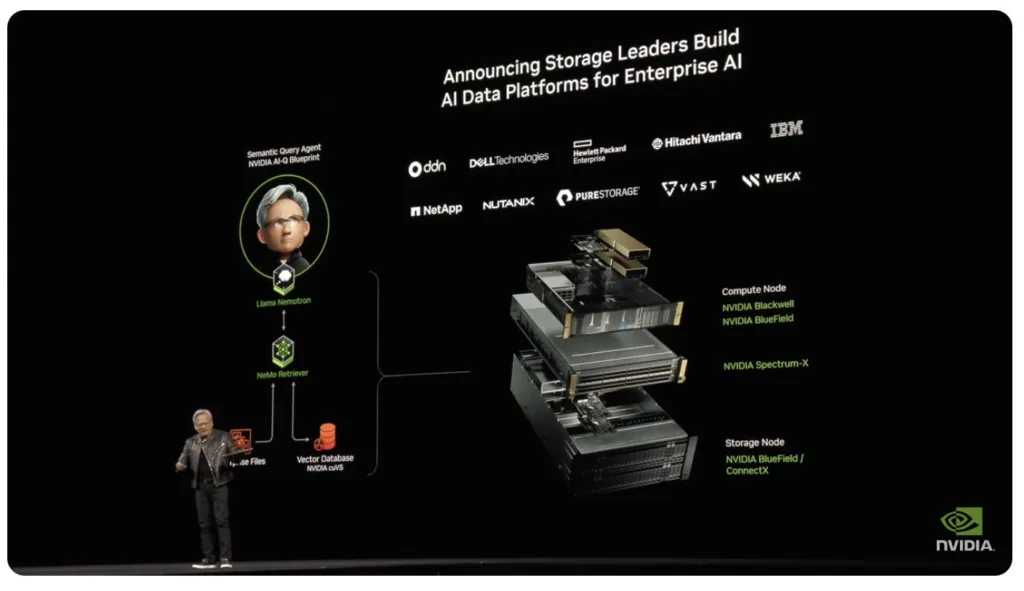

Research Note: NVIDIA AI Storage Certifications & AI Data Platform

At its annual GTC event in San Jose, NVIDIA announced an expansion of its NVIDIA-Certified Systems program, including the new NVIDIA AI Data Platform reference design, to include enterprise storage certification to help streamline AI factory deployments.

Research Note: HPE Storage Enhancements for AI

At NVIDIA GTC 2025, Hewlett Packard Enterprise (HPE) announced a slew of new storage capabilities, including a new unified data layer. These capabilities are designed to accelerate AI adoption by integrating structured and unstructured data across multi-vendor and multi-cloud environments.

NVIDIA GTC 2025: The Super Bowl of AI

If you thought AI was already moving fast, buckle up, Jensen Huang threw more fuel on the fire. NVIDIA’s GTC 2025 keynote wasn’t just about new GPUs; it was a full-scale vision of computing’s future, one where AI isn’t just a tool — it’s the foundation of everything.

Let’s look at what Jensen talk about during his 2+ hour keynote.

Research Note: VDURA V5000 All-Flash AI Storage Appliance

VDURA recently announced its new V5000 All-Flash Appliance, a high-performance storage solution engineered for AI and high-performance computing workloads. The system integrates with the VDURA V11 Data Platform for a combination of high throughput, low-latency access, and seamless scalability.

Research Note: Pure Storage FlashBlade//EXA

Pure Storage recently announced the launch of its new FlashBlade//EXA, a high-performance storage platform designed for AI and HPC workloads. FlashBlade//EXA extends the company’s Purity operating environment and DirectFlash technology to provide extreme performance, scalability, and metadata management that addresses the increasing demands of AI-driven applications.

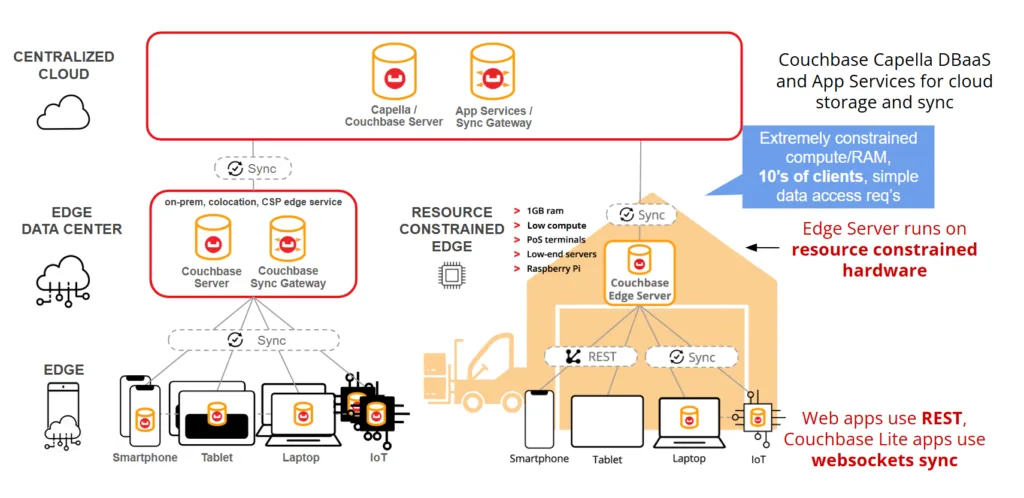

Research Note: Couchbase Edge Server for Offline-First Data Processing

Couchbase recently announced its new Couchbase Edge Server, a lightweight, offline-first database and sync solution designed for edge computing environments. The new solution extends Couchbase’s mobile data synchronization capabilities to resource-constrained deployments where full-scale database solutions are not feasible.

CoreWeave’s Wild Ride Towards IPO

CoreWeave, the AI-focused cloud provider that’s that was early in catching and riding the generative AI boom, is officially gunning for the big leagues. The NVIDIA-backed company has filed for an IPO, looking to capitalize on the insatiable demand for AI compute.

That’s big news for the AI infrastructure world, where CoreWeave has rapidly positioned itself as a major player, taking on the likes of Amazon, Google, and Microsoft.

But before you start picturing ringing bells on Wall Street and champagne toasts, there’s more to the story. A lot more.

Research Note: ServiceNow to Acquire Moveworks

ServiceNow has agreed to acquire Moveworks, an enterprise AI assistant and search technology provider. The cash-and-stock transaction, expected to close in the second half of 2025, is ServiceNow’s largest acquisition.

The acquisition strengthens ServiceNow’s agentic AI strategy and expands its capabilities in AI-driven enterprise automation, employee experience, and search.

Research Note: NVIDIA & Cisco Partner on Spectrum-X

Cisco and NVIDIA announced an expanded partnership to unify AI data center networking by integrating Cisco Silicon One with NVIDIA Spectrum-X.

The companies will create a joint architecture that supports high-performance, low-latency AI workloads across enterprise and cloud environments.

Research Note: IBM to Acquire DataStax

IBM recently announced its intent to acquire DataStax, which specializes in NoSQL and vector database solutions built on Apache Cassandra.

The acquisition aligns with IBM’s broader strategy to enhance its watsonx enterprise AI stack by integrating advanced data management capabilities, particularly for handling unstructured and semi-structured data.

Research Note: IBM Granite 3.2 Models

IBM recently introduced Granite 3.2, bringing significant new capabilities to its AI model lineup that brings enhanced reasoning, multimodal capabilities, improved forecasting, and more efficient safety models.

Research Note: WEKA & HPE Set SpecStorage Records

WEKA and HPE recently announced record-breaking results across all five benchmark workloads in the SPECstorage Solution 2020 suite. The results were achieved using the WEKA Data Platform on the HPE Alletra Storage Server 4110, powered by Intel Xeon processors.

The results show the system’s ability to handle data-intensive workloads, including AI, genomics, software development, and video analytics, with high efficiency and low latency.

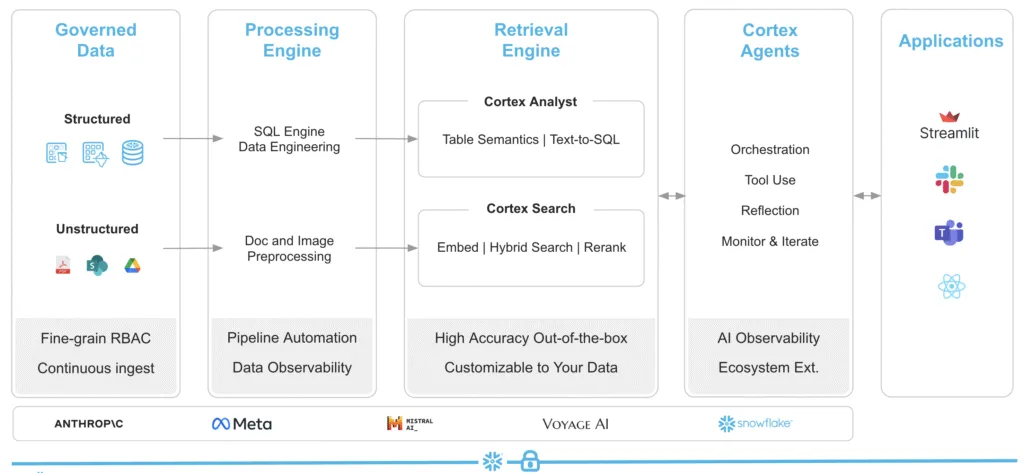

Research Note: Snowflake Deep Integration with Anthropic’s Claude

Snowflake announced that it’s integrated Anthropic’s Claude models across its Cortex AI platform, enabling enterprises to query and analyze data using natural language instead of SQL. The partnership targets the fundamental challenge of data democratization, allowing business users to access insights without requiring technical expertise.

The integration spans three core products: Cortex Analyst converts natural language to SQL, Cortex Search retrieves unstructured data, and Cortex Agents orchestrates complex multi-step operations.

Infrastructure News Roundup: January 2025

January isn’t usually a big month for announcements related to enterprise infrastructure, but then this isn’t a normal January. Let’s look at what happened.

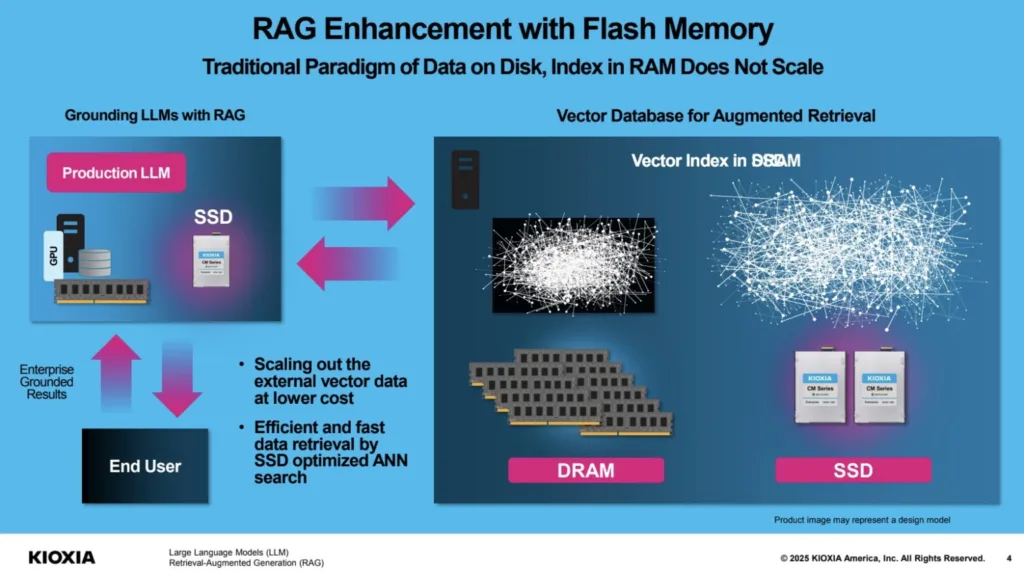

Research Note: Kioxia’s Open Source AiSAQ ANN Search

Today, Kioxia announced the open-source release of All-in-Storage ANNS with Product Quantization (AiSAQ), an approximate nearest neighbor search (ANNS) technology optimized for SSD-based storage. AiSAQ enables large-scale retrieval-augmented generation (RAG) workloads by offloading vector data from DRAM to SSDs, significantly reducing memory requirements.

Research Note: DeepSeek’s Impact on IT Infrastructure Market

Chinese AI startup DeepSeek recently introduced an AI model, DeepSeek-R1l that the company claims that it matches or surpasses models from industry. The move created significant buzz in the AI industry. Though the claims remain unverified, the potential to democratize AI training and fundamentally alter industry dynamics is clear.

The AI Debate at Davos: Concerns and Controversies Surrounding Stargate

The annual World Economic Forum in Davos is known for sparking high-profile discussions on global challenges, and this year was no exception. Against the backdrop of the recently announced $500 billion Stargate Project — an ambitious AI infrastructure initiative led by OpenAI, SoftBank, and Oracle—prominent figures in artificial intelligence (AI) raised concerns about the future of the technology and its societal implications.

Meta’s AI Ambitions in the Wake of Stargate

As the tech world buzzes about Project Stargate, Meta has quietly, but assertively, announced its own transformative AI initiatives. While the $500 billion Stargate Project promises to build exclusive, centralized AI infrastructure for OpenAI, Meta is charting a different course. With a focus on scalability, accessibility, and open-source innovation, Meta’s AI moves reflect a strategic vision that balances ambition with practicality.

Research Note: The Stargate Project

The Stargate Project, announced at a political event on January 25, 2025, is a joint venture between OpenAI, SoftBank, Oracle, and MGX that will invest up to $500 billion by 2030 to develop AI infrastructure across the United States.

Quick Take: CoreWeave & IBM Partner on NVIDIA Grace Blackwell Supercomputing

IBM and CoreWeave, a leading AI specialty AI cloud provider, together announced a collaboration to deliver one of the first NVIDIA GB200 Grace Blackwell Superchip-enabled AI supercomputers to market.

Research Note: UALink Consortium Expands Board, adds Apple, Alibaba Cloud & Synopsys

The Ultra Accelerator Link Consortium (UALink), an industry organization taking a collaborative approach to advance high-speed interconnect standards for next-generation AI workloads, announced an expansion to its Board of Directors, welcoming Alibaba Cloud, Apple, and Synopsys – joining existing member companies like AMD, AWS, Cisco, Google, HPE, Intel, Meta, and Microsoft.

CES 2025- Must See Tech

Can you believe it? The Consumer Electronics Show, aka CES, is just days away. I know, the timing is hard for all of us considering there hasn’t been much time to recover from our New Years festivities. No rest for the weary as we head out to Vegas for the big event.

The show is always full of surprises, so stay tuned next week for lots of announcements to hit the wire. In the meantime, I have a few thoughts to share on what I will be looking for at the show.

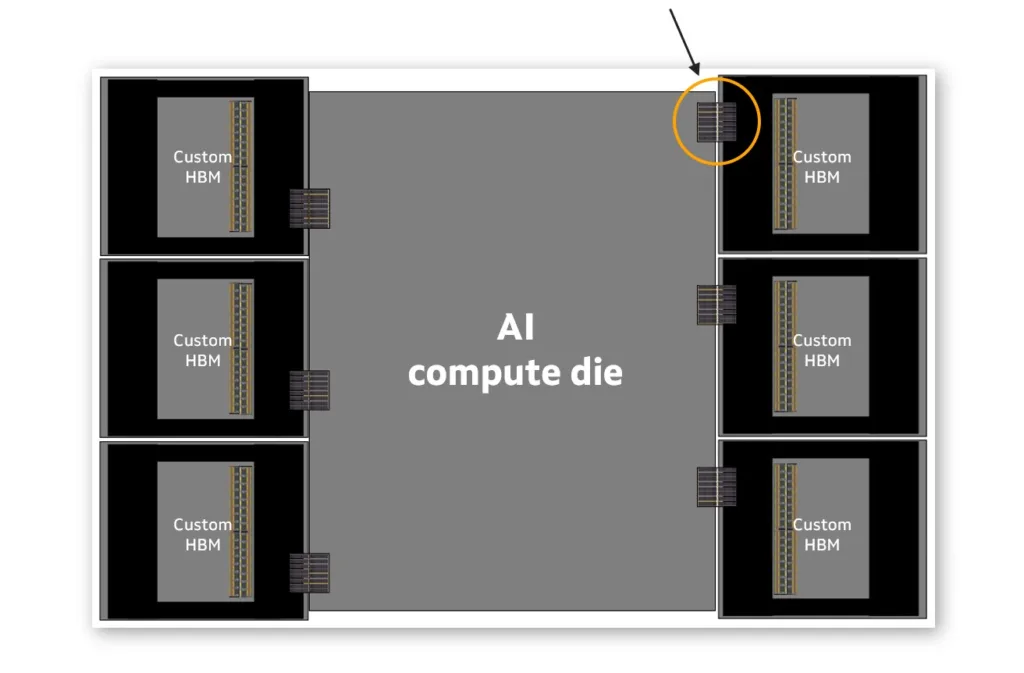

Research Note: Marvell Custom HBM for Cloud AI

Marvell recently announced a new custom high-bandwidth memory (HBM) compute architecture that addresses the scaling challenges of XPUs in AI workloads. The new architecture enables higher compute and memory density, reduced power consumption, and lower TCO for custom XPUs.

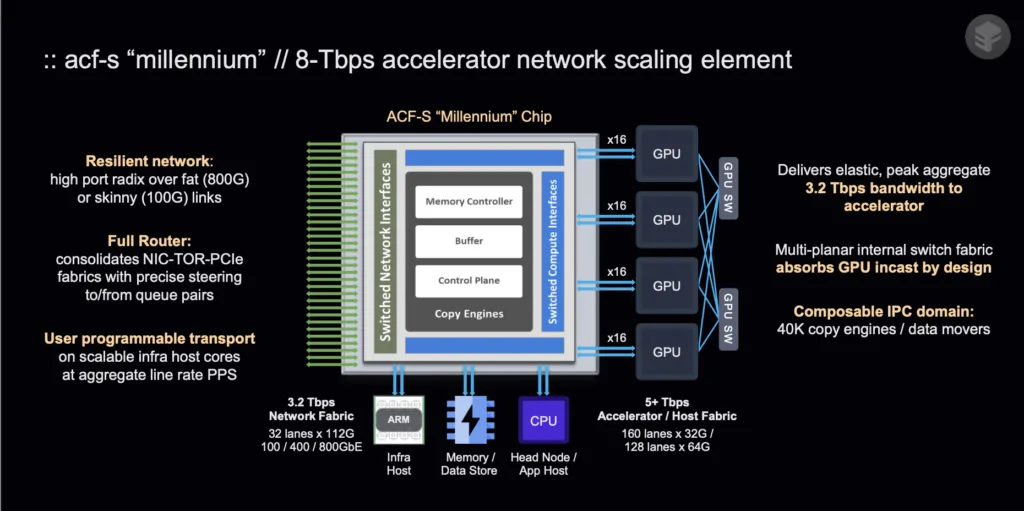

Research Note: Enfabrica ACF-S Millennium

First detailed at Hot Chips 2024, Enbrica recently announced that its ACF-S “Millennium” chip, which addresses the limitations of traditional networking hardware for AI and accelerated computing workloads, will be available to customers in calendar Q1 2025.

Research Note: Dell AI Products & Services Updates

Dell Technologies has made significant additions to its AI portfolio with its recent announcements at SC24 and Microsoft Ignite 2024 in November. The announcements span infrastructure, ecosystem partnerships, and professional services, targeting accelerated AI adoption, operational efficiency, and sustainability in enterprise environments.

Understanding AI Data Types: The Foundation of Efficient AI Models

AI Datatypes aren’t just a technical detail—it’s a critical factor that affects performance, accuracy, power efficiency, and even the feasibility of deploying AI models.

Understanding the datatypes used in AI isn’t just for hands-on practitioners, you often see published benchmarks and other performance numbers broken out by datatype (just look at an NVIDIA GPU data sheet). What’s it all mean?

Research Note: AWS Trainium2

Tranium is AWS’s machine learning accelerator, and this week at its re:Invent event in Las Vegas, it announced the second generation, the cleverly named Trainium2, purpose-built to enhance the training of large-scale AI models, including foundation models and large language models.