Broadcom’s Tomahawk Ultra: Challenging Nvidia’s Dominance in AI Networking

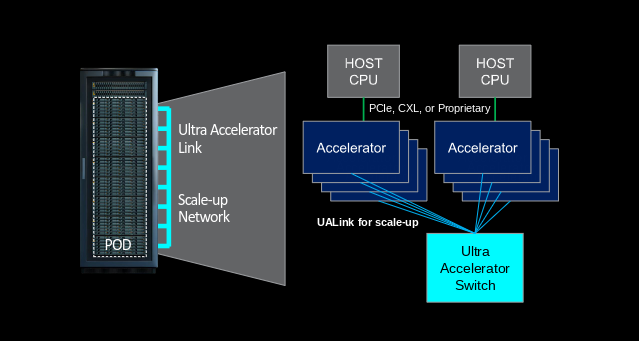

The significance of this battle lies in “scale-up” computing – the technique of ensuring closely located chips can communicate at lightning speed.

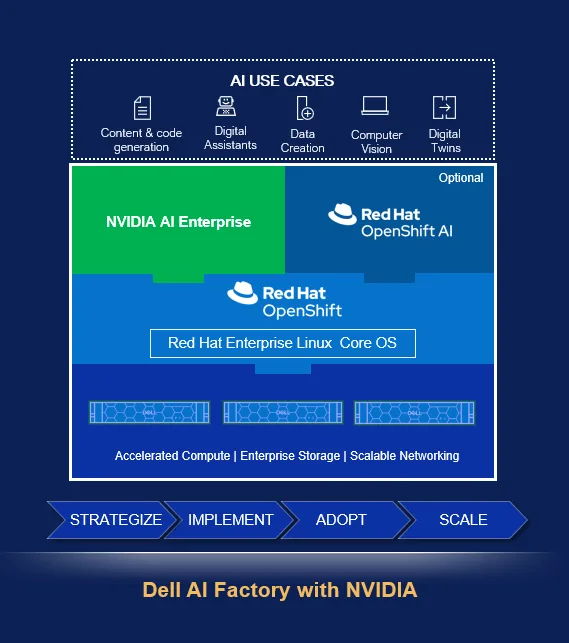

Research Note: Red Hat OpenShift on Dell AI Factory with NVIDIA

Dell Technologies recently announced that its integrated Red Hat OpenShift with its Dell AI Factory with NVIDIA platform is now generally available to customers. The solution was previewed earlier this year at Dell Tech world. The updated solution combines Dell PowerEdge infrastructure, NVIDIA GPU acceleration, Red Hat container orchestration, and NVIDIA AI Enterprise software into a validated stack.

Research Note: HPE’s Updated AI Factory

At its recent Discover event, HPE announced an expansion of its NVIDIA-based AI Computing portfolio with three distinct AI factory configurations targeting enterprise, service provider, and sovereign deployment scenarios.The offerings center on the upgraded HPE Private Cloud AI platform, which integrates NVIDIA Blackwell GPUs with HPE ProLiant Gen12 servers, custom storage solutions, and orchestration software.

Research Note: AMD Raises its Game at its Advancing AI 2025 Event

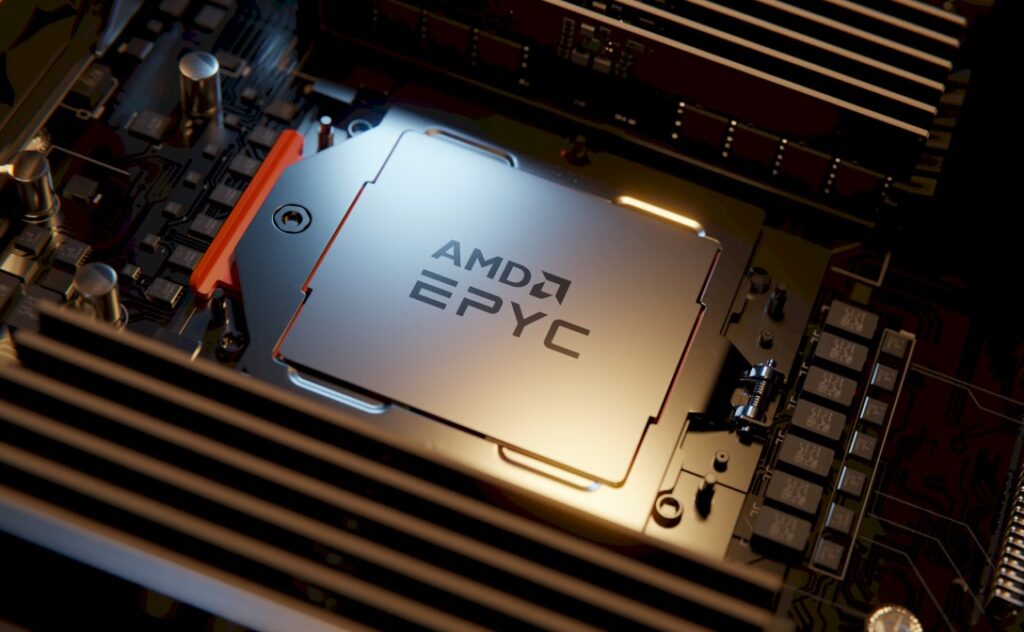

AMD announced a comprehensive portfolio of AI infrastructure solutions at its recent Advancing AI 2025 event, positioning itself as a full-stack competitor to NVIDIA.

The announcements include the immediate availability of MI350 Series GPUs with 4x generational performance improvements, the ROCm 7.0 software platform achieving 3.5x gains in inference, and the AMD Developer Cloud for broader ecosystem access.

AMD also previewed its 2026 “Helios” rack solution, which integrates MI400 GPUs, EPYC “Venice” CPUs, and Pensando “Vulcano” NICs.

Research Note: Dell Updates its Dell AI Factory with NVIDIA

At its annual Dell Technologies World event in Las Vegas, Dell announced significant updates to its AI Factory with NVIDIA, expanding the platform’s hardware capabilities and introducing new managed services.

The updated platform targets enterprises transitioning from AI experimentation to full-scale implementation, particularly for agentic AI and multi-modal applications.

Key updates include new PowerEdge server configurations supporting up to 256 NVIDIA Blackwell Ultra GPUs per rack, enhanced data platform integrations, and comprehensive managed services.

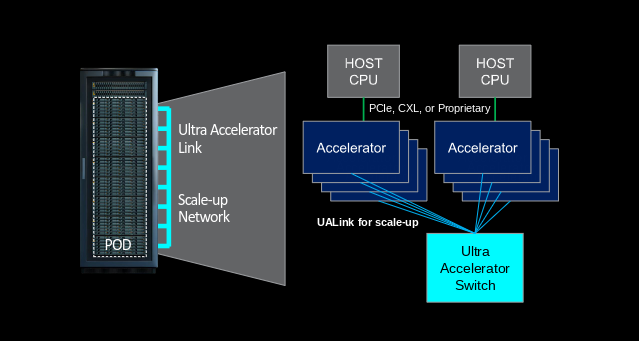

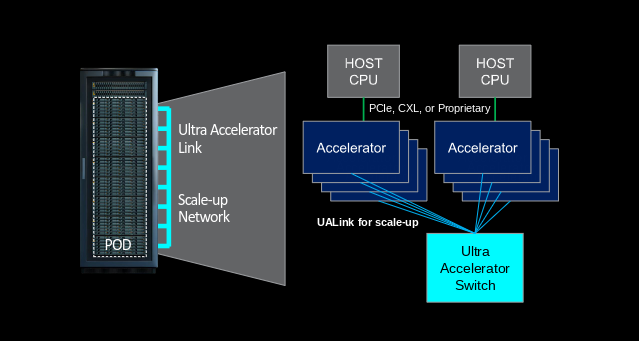

Quick Take: UALink & OCP Join Forces

The Open Compute Project (OCP) Foundation and the Ultra Accelerator Link (UALink) Consortium have announced a strategic collaboration to standardize and deploy high-performance, open scale-up interconnects for next-generation AI and HPC clusters.

NAND Insider Newsletter: Week of April 28, 2025

Each week NAND Research puts out a newsletter for our industry customers taking a look at what’s driving the week, and what happened last week that caught our attention. Below is a excerpt from this week’s, April 28, 2025.

NAND Insider Newsletter: April 21 2025

Each week NAND Research puts out a newsletter for our industry customers taking a look at what’s driving the week, and what happened last week that caught our attention. Below is a excerpt from this week’s, April 21, 2025.

Research Note: UALink Consortium Releases UALink 1.0

The UALink Consortium recently released its Ultra Accelerator Link (UALink) 1.0 specification. This industry-backed standard challenges the dominance of NVIDIA’s proprietary NVLink/NVSwitch memory fabric with an open alternative for high-performance accelerator interconnect technology.

NAND Insider Newsletter: April 6 2025

Each week NAND Research puts out a newsletter for our industry customers taking a look at what’s driving the week, and what happened last week that caught our attention. Below is a excerpt from this week’s, April 6, 2025.

NAND Insider Newsletter: March 30 2025

Every week NAND Research puts out a newsletter for our industry customers taking a look at what’s driving the week, and what happened last week that caught our attention. Below is a excerpt from this week’s, March 30, 2025.

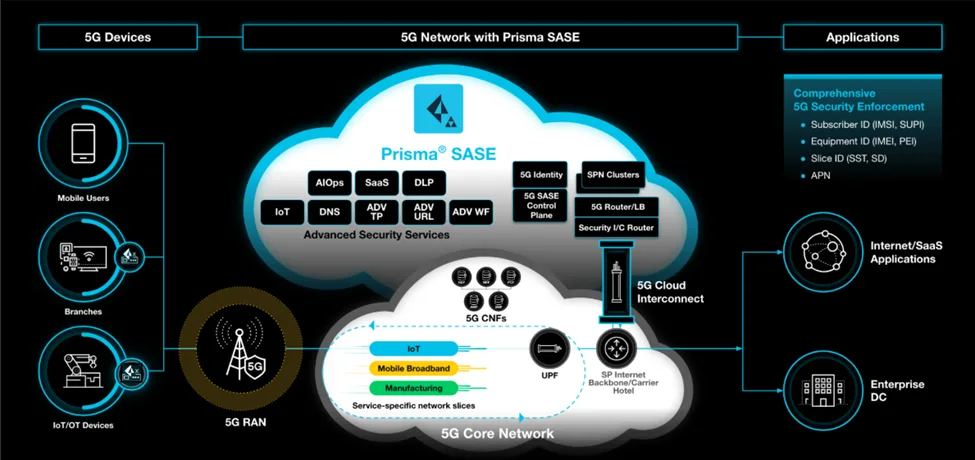

Research Note: Palo Alto Networks Prisma SASE for 5G

Earlier this month at MWC 2025, Palo Alto Networks announced the general availability of Prisma SASE 5G, its new cloud-delivered cybersecurity solution for enterprises leveraging 5G connectivity.

The offering expands the company’s SASE portfolio to provide integrated Zero Trust security for 5G-enabled infrastructure, including mobile users, IoT/OT devices, and SD-WAN endpoints.

Research Note: Lenovo AI Announcements @ GTC 2025

At NVIDIA GTC 2025, Lenovo showed off its latest Hybrid AI Factory platforms in partnership with NVIDIA, focused on agentic AI.

The Lenovo Hybrid AI Advantage framework integrates a full-stack hardware and software solution, optimized for both private and public AI model deployments, and spans on-prem, edge, and cloud environments.

Research Note: NetApp AI Data Announcements @ GTC 2025

At the recent GTC 2025 event, NetApp announced, in collaboration with NVIDIA, a comprehensive set of product validations, certifications, and architectural enhancements to its intelligent data products.

The announcements include NetApp’s integration with the NVIDIA AI Data Platform, support for NVIDIA’s latest accelerated computing systems, and expanded availability of enterprise-grade AI infrastructure offerings, including NetApp AFF A90 and NetApp AIPod.

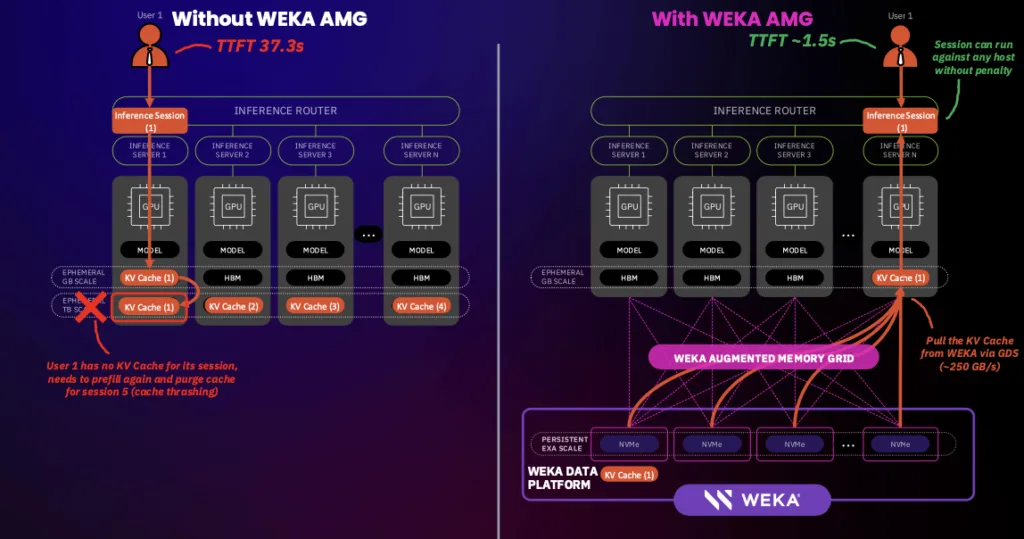

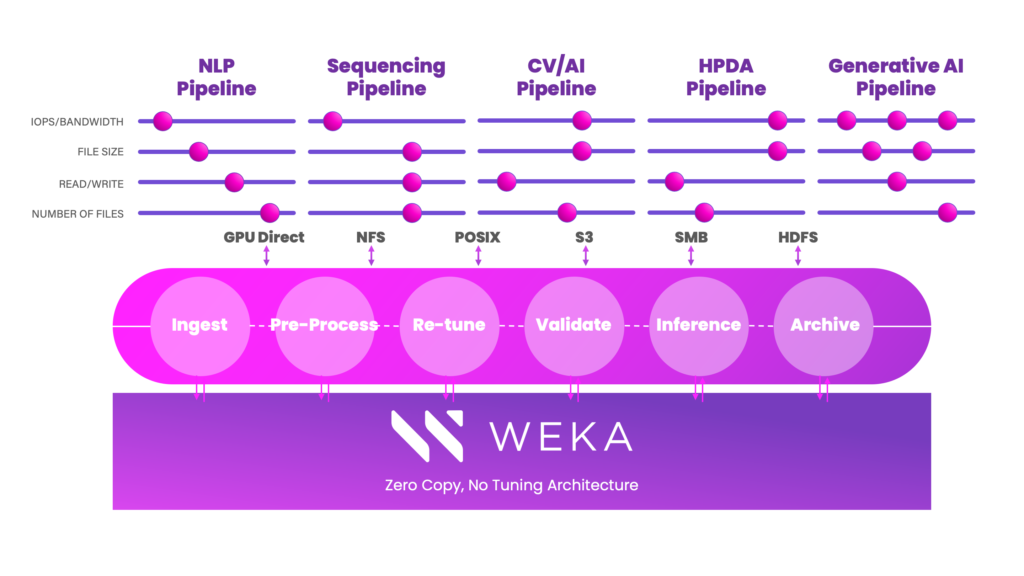

Research Note: WEKA Augmented Memory Grid

At the recent NVIDIA GTC conference, WEKA announced the general availability of its Augmented Memory Grid, a software-defined storage extension engineered to mitigate the limitations of GPU memory during large-scale AI inferencing.

The Augmented Memory Grid is a new approach that integrates with the WEKA Data Platform and leverages NVIDIA Magnum IO GPUDirect Storage (GDS) to bypass CPU bottlenecks and deliver data directly to GPU memory with microsecond latency.

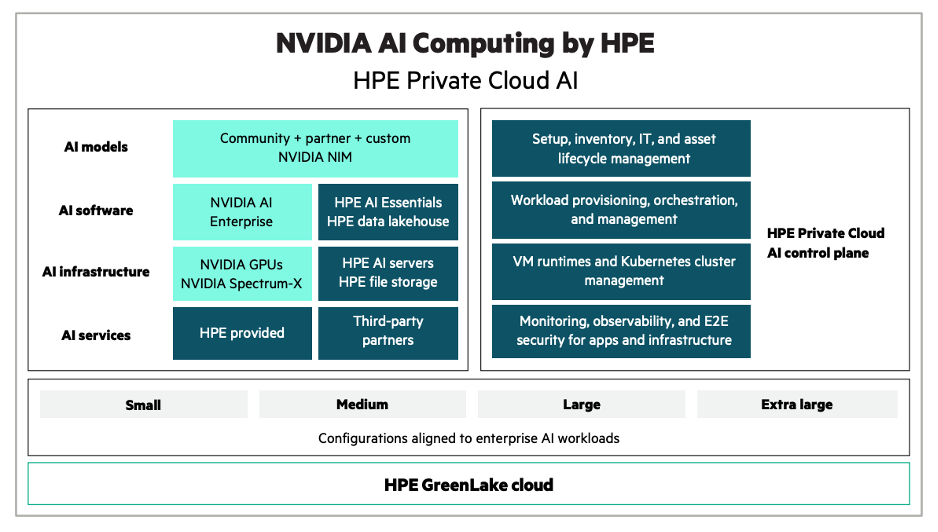

Research Note: HPE’s New Full-Stack Enterprise AI Infrastructure Offerings

At the recent NVIDIA GTC 2025, Hewlett Packard Enterprise (HPE) and NVIDIA jointly introduced NVIDIA AI Computing by HPE, full-stack AI infrastructure offerings targeting enterprise deployment of generative, agentic, and physical AI workloads.

The solutions span private cloud AI platforms, observability and management software, reference blueprints, AI development environments, and new AI-optimized servers featuring NVIDIA’s Blackwell architecture.

MWC 2025 Playbook: Enterprise IT’s Big AI & 5G Moment

If you blinked, you might have missed the tidal wave of AI, 5G, and cloud announcements at Mobile World Congress 2025. But don’t worry—we’ve got the cheat sheet.

Liquid Cooling is Front & Center at GTC 2025

One thing was clear at the just-wrapped NVIDIA GTC event: the race to cool the next generation of HPC and AI systems is intensifying.

Let’s take a quick look at some of our favorite announcements.

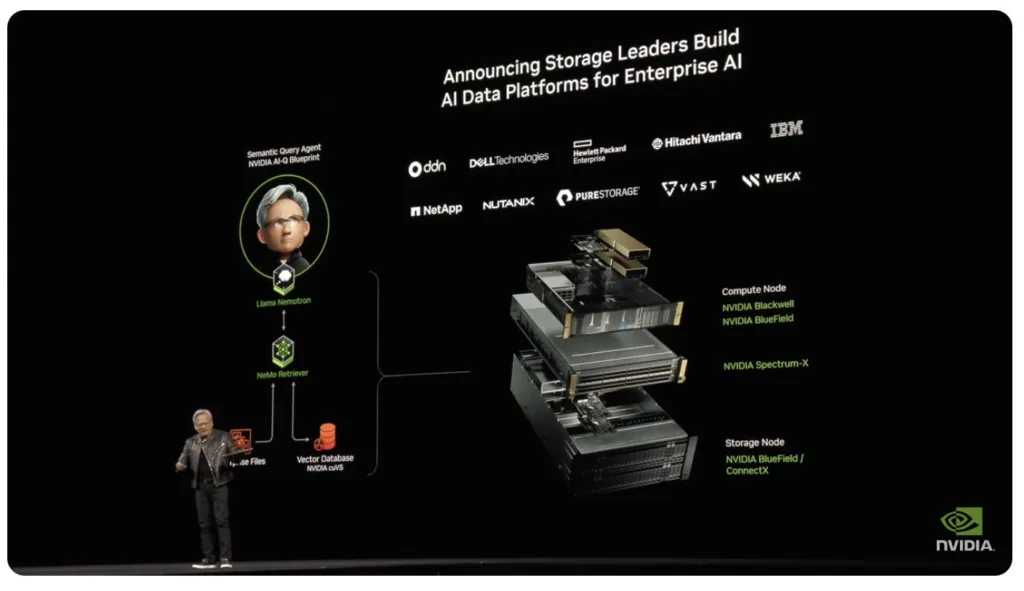

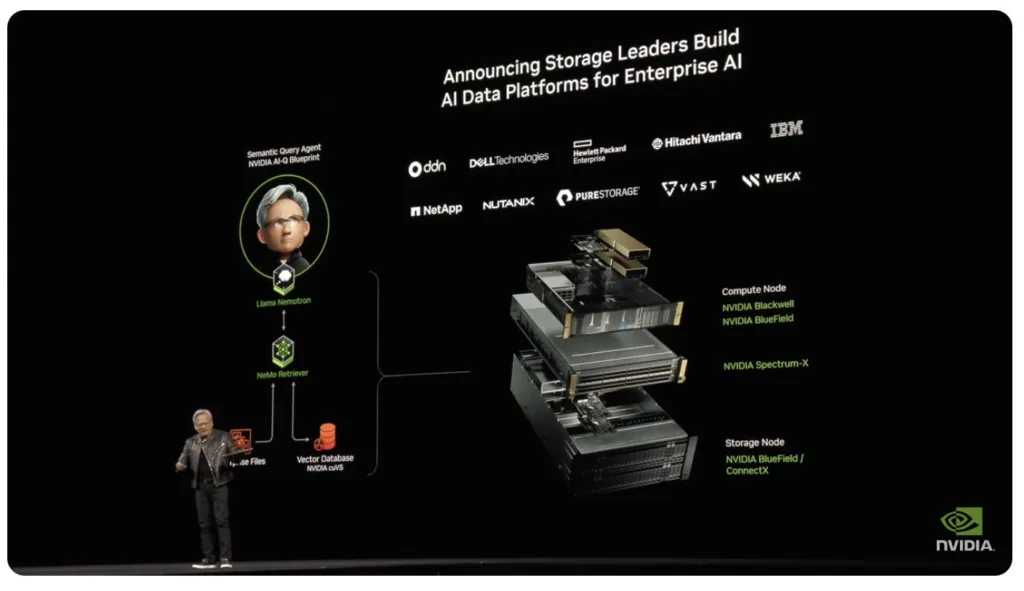

Research Note: NVIDIA AI Storage Certifications & AI Data Platform

At its annual GTC event in San Jose, NVIDIA announced an expansion of its NVIDIA-Certified Systems program, including the new NVIDIA AI Data Platform reference design, to include enterprise storage certification to help streamline AI factory deployments.

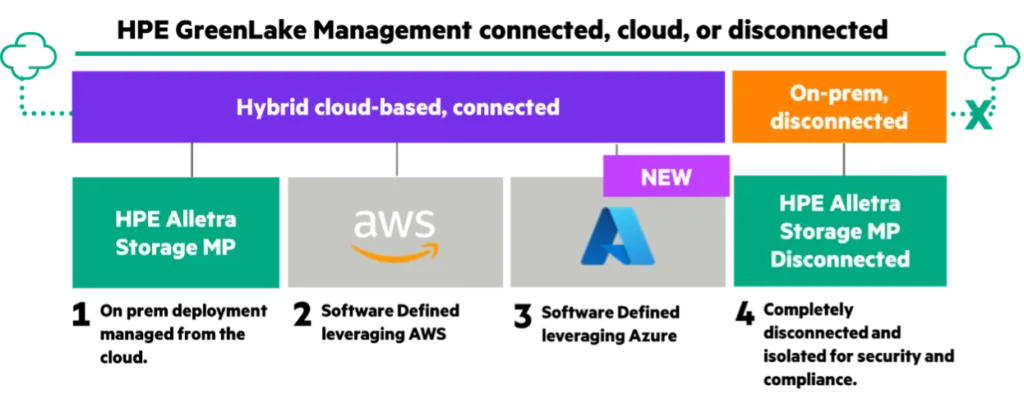

Research Note: HPE Storage Enhancements for AI

At NVIDIA GTC 2025, Hewlett Packard Enterprise (HPE) announced a slew of new storage capabilities, including a new unified data layer. These capabilities are designed to accelerate AI adoption by integrating structured and unstructured data across multi-vendor and multi-cloud environments.

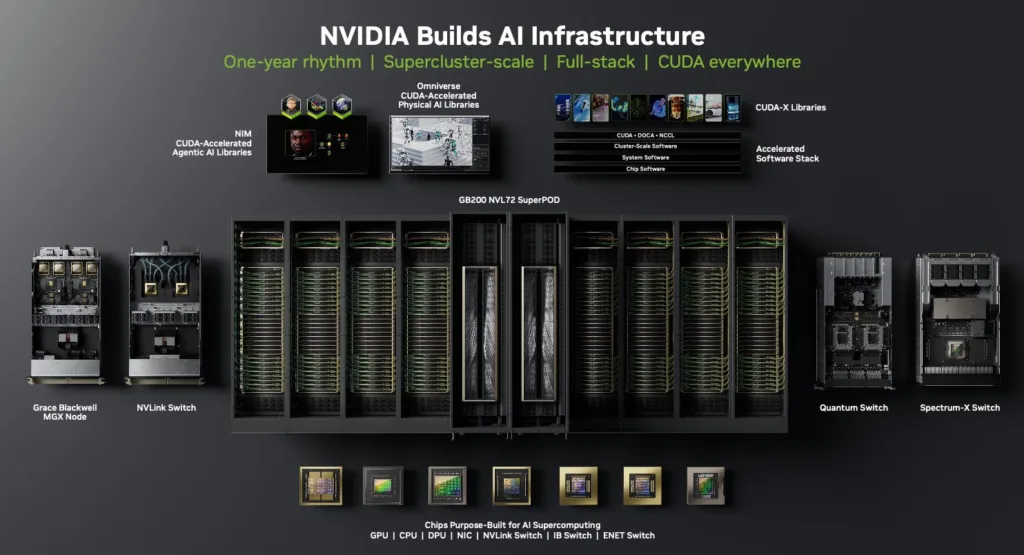

NVIDIA GTC 2025: The Super Bowl of AI

If you thought AI was already moving fast, buckle up, Jensen Huang threw more fuel on the fire. NVIDIA’s GTC 2025 keynote wasn’t just about new GPUs; it was a full-scale vision of computing’s future, one where AI isn’t just a tool — it’s the foundation of everything.

Let’s look at what Jensen talk about during his 2+ hour keynote.

CoreWeave’s Wild Ride Towards IPO

CoreWeave, the AI-focused cloud provider that’s that was early in catching and riding the generative AI boom, is officially gunning for the big leagues. The NVIDIA-backed company has filed for an IPO, looking to capitalize on the insatiable demand for AI compute.

That’s big news for the AI infrastructure world, where CoreWeave has rapidly positioned itself as a major player, taking on the likes of Amazon, Google, and Microsoft.

But before you start picturing ringing bells on Wall Street and champagne toasts, there’s more to the story. A lot more.

Enterprise Infrastructure Tracker: March 2025

We’re barely two months into the new year and already the enterprise infrastructure market is heating up.

Here’s what caught our attention over the past month:

NAND Insider Newsletter: March 3, 2025

Every week NAND Research puts out a newsletter for our industry customers taking a look at what’s driving the week, and what happened last week that caught our attention. Below is a excerpt from this week’s, March 3, 2025.

Research Note: NVIDIA & Cisco Partner on Spectrum-X

Cisco and NVIDIA announced an expanded partnership to unify AI data center networking by integrating Cisco Silicon One with NVIDIA Spectrum-X.

The companies will create a joint architecture that supports high-performance, low-latency AI workloads across enterprise and cloud environments.

Infrastructure News Roundup: January 2025

January isn’t usually a big month for announcements related to enterprise infrastructure, but then this isn’t a normal January. Let’s look at what happened.

Research Note: DeepSeek’s Impact on IT Infrastructure Market

Chinese AI startup DeepSeek recently introduced an AI model, DeepSeek-R1l that the company claims that it matches or surpasses models from industry. The move created significant buzz in the AI industry. Though the claims remain unverified, the potential to democratize AI training and fundamentally alter industry dynamics is clear.

Research Note: The Stargate Project

The Stargate Project, announced at a political event on January 25, 2025, is a joint venture between OpenAI, SoftBank, Oracle, and MGX that will invest up to $500 billion by 2030 to develop AI infrastructure across the United States.

NAND Insider Newsletter: January 21, 2025

Every week NAND Research puts out a newsletter for our industry customers. Below is an excerpt from this week’s.

Quick Take: CoreWeave & IBM Partner on NVIDIA Grace Blackwell Supercomputing

IBM and CoreWeave, a leading AI specialty AI cloud provider, together announced a collaboration to deliver one of the first NVIDIA GB200 Grace Blackwell Superchip-enabled AI supercomputers to market.

Research Note: UALink Consortium Expands Board, adds Apple, Alibaba Cloud & Synopsys

The Ultra Accelerator Link Consortium (UALink), an industry organization taking a collaborative approach to advance high-speed interconnect standards for next-generation AI workloads, announced an expansion to its Board of Directors, welcoming Alibaba Cloud, Apple, and Synopsys – joining existing member companies like AMD, AWS, Cisco, Google, HPE, Intel, Meta, and Microsoft.

NAND Insider Newsletter: January 12, 2025

Every week NAND Research puts out a newsletter for our industry customers. Below is a excerpt from this week’s.

CES 2025- Enterprise Tech Was There Too

Oh my, CES 2025 has taken me on one heck of a ride through the tech universe. I am an enterprise IT guy but, I must admit, the non-IT tech at CES had me fully distracted. Automated lawnmowers, high tech indoor garden planters, and my favorite- an ultra-realistic flight simulator. Wowzers- really neat stuff.

CES 2025- Must See Tech

Can you believe it? The Consumer Electronics Show, aka CES, is just days away. I know, the timing is hard for all of us considering there hasn’t been much time to recover from our New Years festivities. No rest for the weary as we head out to Vegas for the big event.

The show is always full of surprises, so stay tuned next week for lots of announcements to hit the wire. In the meantime, I have a few thoughts to share on what I will be looking for at the show.

Understanding AI Data Types: The Foundation of Efficient AI Models

AI Datatypes aren’t just a technical detail—it’s a critical factor that affects performance, accuracy, power efficiency, and even the feasibility of deploying AI models.

Understanding the datatypes used in AI isn’t just for hands-on practitioners, you often see published benchmarks and other performance numbers broken out by datatype (just look at an NVIDIA GPU data sheet). What’s it all mean?

Research Note: AWS Trainium2

Tranium is AWS’s machine learning accelerator, and this week at its re:Invent event in Las Vegas, it announced the second generation, the cleverly named Trainium2, purpose-built to enhance the training of large-scale AI models, including foundation models and large language models.

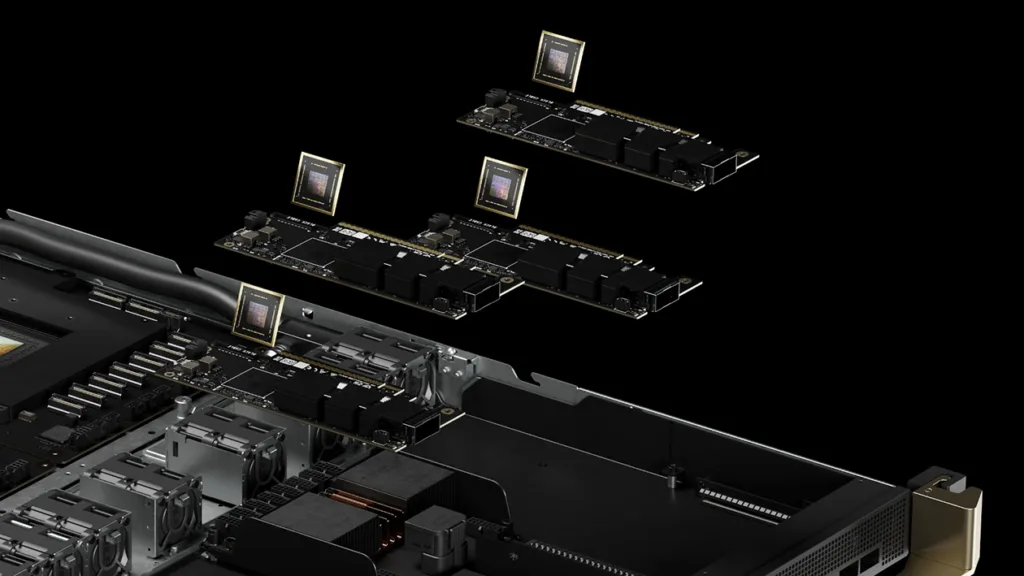

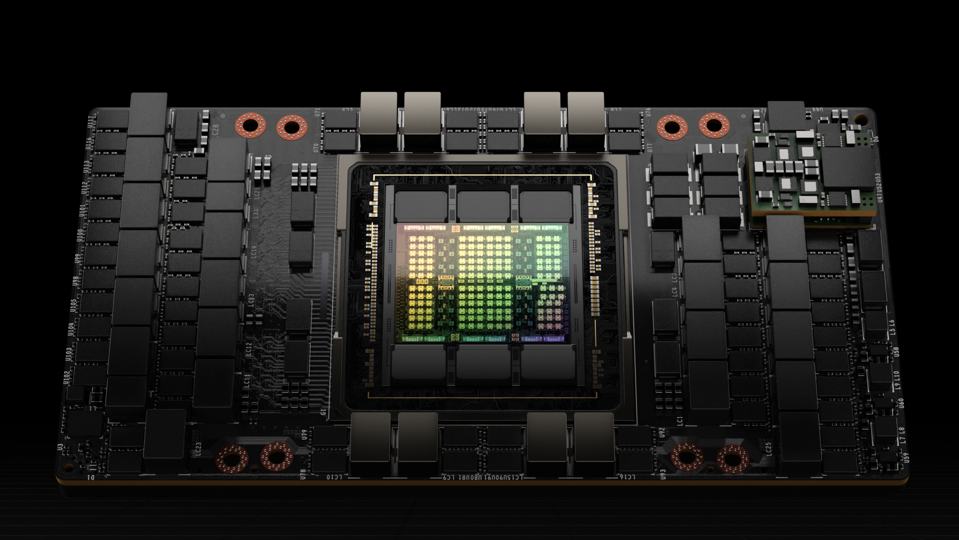

Research Note: NVIDIA SC24 Announcements

At the recent Supercomputing 2024 (SC24) conference in Atlanta, NVIDIA announced new hardware and software capabilities to enhance AI and HPC capabilities. This includes the new GB200 NVL4 Superchip, the general available of its H200 NVL PCIe, and several new software capabilities.

IT Infrastructure Round-Up: Emerging Trends in Infrastructure and Connectivity (Nov 2024)

November was a busy month of announcements in infrastructure and connectivity, revealing a convergence of innovation to meet the stringent demands of AI-driven workloads, scalability, and cost efficiency.

SC24: Shaping the Future of IT with High-Performance Computing and AI

Last week’s Supercomputing 2024 (SC24) conference in Atlanta brought together IT leaders, researchers, and industry innovators to unveil advancements in HPC and AI, with even a little quantum computing thrown in.

NAND Insider Newsletter: Week Ending Nov 17 2024

Every week we send a newsletter to our list of tech industry insiders. Here’s an excerpt of what we shared for the week ending November 17.

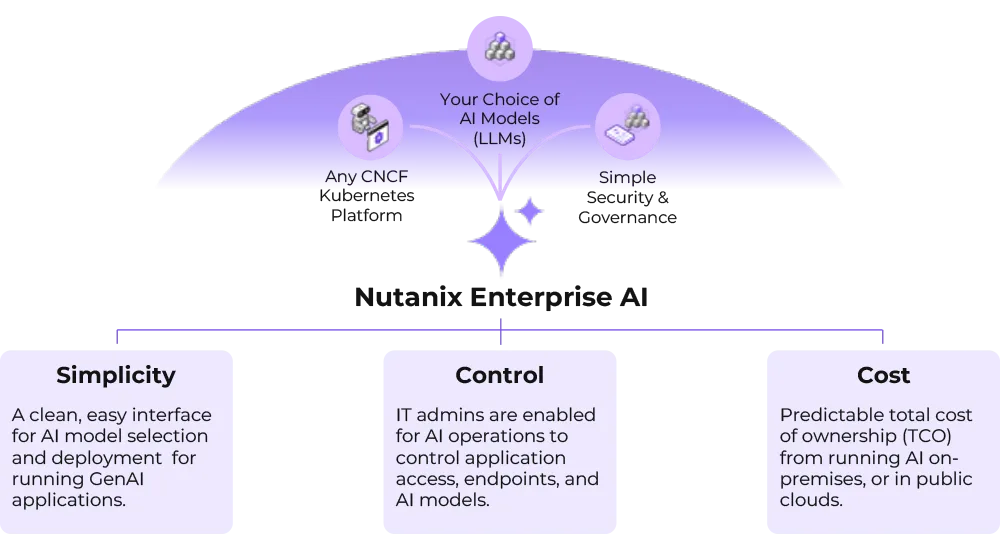

Research Note: Nutanix Enterprise AI

Nutanix recently introduced Nutanix Enterprise AI, its new cloud-native infrastructure platform that streamlines the deployment and operation of AI workloads across various environments, including edge locations, private data centers, and public cloud services like AWS, Azure, and Google Cloud.

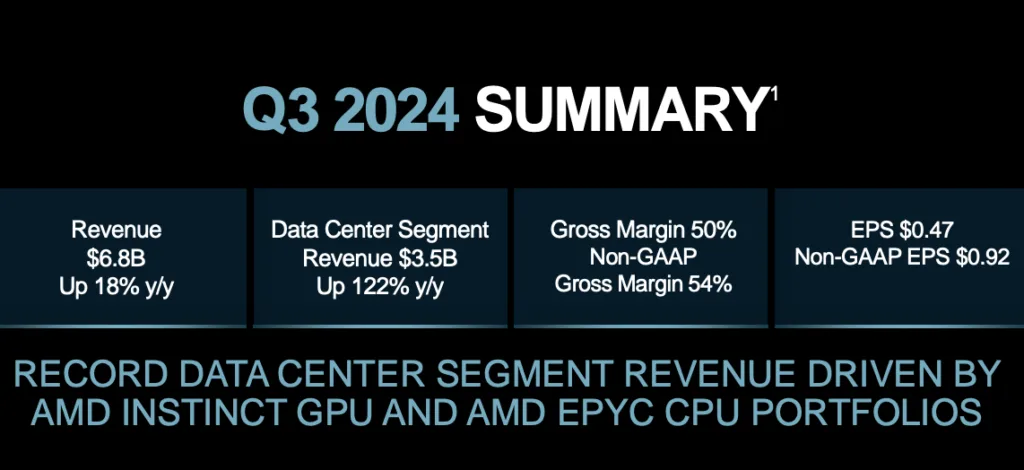

Quick Take: AMD Data Center Group Earnings – Q3 2024

AMD this week announced significant revenue and earnings growth in Q3 2024, driven primarily by exceptional performance in the Data Center segment. AMD’s Data Center revenue increased by 122% year-over-year, reaching a record $3.5 billion and marking over half of AMD’s total revenue this quarter. CEO Lisa Su, on the earnings call, attributed this growth to the success of the EPYC CPUs and MI300X GPUs, which experienced strong adoption across cloud, enterprise, and AI applications.

Research Note: WEKA’s New WEKApod Nitro & Extreme

WEKA this week expanded its footprint in the AI data infrastructure space with the release of two new data platform appliances designed to meet diverse AI deployment needs.

The new products, WEKApod Nitro and WEKApod Prime, are WEKA’s latest offerings for high-performance data solutions that support accelerated AI model training, high-throughput workloads, and enterprise AI demands. The new solutions address the rapid growth of generative AI, LLMs, and RAG and fine-tuning pipelines across industries.

OCP Global Summit 2024: Key Announcements

At the recent OCP Global Summit 2024, the organization unveiled several major initiatives highlighting OCP’s commitment to driving innovation and fostering collaboration in the tech ecosystem. This includes expanding its AI Strategic Initiative with contributions from NVIDIA and Meta, new alliances for sustainability and standardization, and the launch of an open chiplet marketplace.

MWC Las Vegas 2024: 5G Needs the Enterprise

Reflecting on the 2023 MWC Las Vegas convention, where AI was the central theme, it’s clear that enterprise 5G has now taken center stage. This shift builds on the momentum created by AI’s integration into various technologies. It underscores the importance of low latency, highly managed private 5G networks in successful AI at the edge deployments.

Quick Take: Cisco’s Investment in CoreWeave and its AI Infrastructure Strategy

Cisco is reportedly on the verge of investing in CoreWeave, one of the hottest neo-cloud providers specializing in AI infrastructure, in a transaction valuing the GPU cloud provider at $23 billion. CoreWeave has rapidly scaled its AI capabilities by utilizing NVIDIA GPUs for data centers, becoming a key player in the AI infrastructure market.

Research Note: VAST Data’s New AI Updates & Partnerships

VAST made a wide-ranging set of AI-focused announcements that extend it’s already impressive feature set to directly address the needs of enterprise AI. Beyond the new features, VAST also highlighted new strategic relationships and a new user community that help bring VAST technology into the enterprise.

JFrog Introduces Comprehensive Runtime Security Solution & Nvidia Integration

Announced this week at its annual swampUp event, the new JFrog Runtime is a robust runtime security solution that offers end-to-end protection for applications throughout their lifecycle. Alongside this launch, JFrog also revealed a new product integration with NVIDIA, which will enable users to secure and manage AI models more effectively using NVIDIA’s AI infrastructure.

Research Note: NVIDIA NIM Agent Blueprints

NVIDIA launched its new NIM Agent Blueprints, a catalog of pre-trained, customizable AI workflows to help enterprise developers quickly build and deploy generative AI applications for critical use cases, such as customer service, drug discovery, and data extraction from PDFs.

Research Note: AMD Acquires ZT Systems

AMD announced its strategic acquisition of ZT Systems, a specialty provider of AI and general-purpose compute infrastructure for major hyperscale companies, in a deal valued at $4.9 billion. The acquisition aligns with AMD’s AI strategy to enhance its capabilities in AI training and inferencing solutions for data centers.

Research Note: NVIDIA AI Factory & NIM Inference Microservices

NVIDIA announced its new NVIDIA AI Foundry, a service designed to supercharge generative AI capabilities for enterprises using Meta’s just-released Llama 3.1 models, along with its new NIM Inference microservices.

The new offerings significantly advance the ability to customize and deploy AI models for domain-specific applications.

Research Note: Mistral NeMo 12B Small Language Model

Mistral AI and NVIDIA launched Mistral NeMo 12B, a state-of-the-art language model for enterprise applications such as chatbots, multilingual tasks, coding, and summarization. The collaboration combines Mistral AI’s training data expertise with NVIDIA’s optimized hardware and software ecosystem, offering high performance across diverse applications.

Research Note: AMD Acquires Silo AI

AMD announced a definitive agreement to acquire Silo AI, Europe’s largest private AI lab, for approximately $665 million in an all-cash transaction. This acquisition aligns with AMD’s strategy to deliver end-to-end AI solutions based on open standards, enhancing its partnership with the global AI ecosystem.

Quick Take: VAST Data Platform Achieves NVIDIA Partner Network Certification

VAST Data announced that its VAST Data Platform has been certified as a high-performance storage solution for NVIDIA Partner Network cloud partners. The certification highlights VAST’s position as a leading data platform provider for AI cloud infrastructure and enhances its collaboration with NVIDIA in building next-generation AI factories.

HPE Updates Partner Programs for AI

At HPE Discover 2024, HPE announced an ambitious new AI enablement program in collaboration with NVIDIA to boost profitability and deliver new revenue streams for partners. This program includes enhanced competencies and resources across AI, compute, storage, networking, hybrid cloud, sustainability, and HPE GreenLake offerings.

Research Note: NVIDIA AI Computing by HPE

At its HPE Discover event in Las Vegas, Hewlett Packard Enterprise and NVIDIA announced a collaborative effort to accelerate the adoption of generative AI across enterprises.

The collaboration, branded as NVIDIA AI Computing by HPE, introduces a portfolio of co-developed AI solutions and joint go-to-market integrations to address the complexities and barriers of large-scale AI adoption.

Research Note: UALink Alliance & Accelerator Interconnect Specification

UALink is a new open standard designed to rival NVIDIA’s proprietary NVLink technology. It facilitates high-speed, direct GPU-to-GPU communication crucial for scaling out complex computational tasks across multiple graphics processing units (GPUs) or accelerators within servers or computing pods.

Research Brief: Dell AI Factory

At NVIDIA GTC earlier this year, Dell announced a collaboration with NVIDIA to deliver the Dell AI Factory with NVIDIA that was heavily based on NVIDIA technology. At this year’s Dell Tech World, Dell went further, introducing its own Dell AI Factory, while also updating the Dell AI Factory with NVIDIA.

Quick Take: Sygnia & NVIDIA’s Edge Security Play

Sygnia and NVIDIA announced a strategic collaboration to enhance cybersecurity in the energy and industrial sectors using AI-powered edge solutions. The aim is to directly improve data collection, threat detection, and response capabilities at the edge of industrial and critical infrastructure networks.

Nvidia And Dell Build An AI Factory Together

At last month’s Nvidia GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including options for liquid-cooling, which allows the server to deliver the full capabilities of Nvidia’s newly announced AI accelerators.

Research Note: NVIDIA H100 Confidential Computing

This week, NVIDIA made its confidential computing capabilities for its flagship NVIDIA Hopper H100 GPU, previewed in August 2023, generally available. This makes NVIDIA’s H100 the first GPU with these capabilities, which are critical for protecting data as it is being processed.

This Research Note looks at confidential computing and how it works on the NVIDIA H100 GPU.

AWS And Nvidia Expand AI Relationship

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate AI innovation and infrastructure capabilities globally.

The joint announcements included the introduction of Nvidia Grace Blackwell GPU-based Amazon EC2 instances, Nvidia DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Research Note: Lenovo’s AMD-based AI Portfolio Update

Lenovo announced a comprehensive set of AMD-based updates to its AI infrastructure portfolio, which include GPU-rich and thermal-efficient systems designed for compute-intensive workloads in various industries, including financial services and healthcare.

The new offerings, designed in partnership with AMD, address the growing demand for compute-intensive workloads across industries, providing the flexibility and scalability required for AI deployments.

Research Note: NVIDIA Acquires Run:AI

NVIDIA announced an agreement to acquire Run:ai, a startup specializing in chip management and orchestration software based on Kubernetes. The acquisition is part of CEO Jensen Huang’s strategy to diversify Nvidia’s revenue streams from chips to software.

Quick Take: SAP’s New Business AI Capabilities

At the recent NVIDIA GTC event, SAP and NVIDIA announced an expanded partnership to enhance generative AI integration across SAP’s cloud solutions and applications. The collaboration focuses on developing SAP Business AI, which integrates scalable, business-specific generative AI capabilities within various SAP offerings, including SAP Datasphere, and SAP BTP.

Quick Take: VAST Data’s Nvidia DPU-Based AI Cloud Architecture

VAST Data recently introduced a new AI cloud architecture based on Nvidia’s BlueField-3 DPU technology. The architecture is designed to improve performance, security, and efficiency for AI data services. The approach seeks to enhance data center operations and introduce a secure, zero-trust environment by integrating storage and database processing into AI servers.

Research Note: MLPerf Inference 4.0 Results

MLCommons released the results of its MLPerf Inference v4.0 benchmarks, which introduced two new workloads, Llama 2 and Stable Diffusion XL.

Since its inception in 2018, MLPerf has established itself as a crucial benchmark in the accelerator market. The benchmarks offer detailed comparisons across a variety of system configurations for specific use cases.

Is NVIDIA Lagging in Lucrative Automotive Segment?

Nvidia’s most recent earnings release is a tremendous achievement for the company, with reported revenue of $22.1 billion, up an incredible 265% year-on-year. Earnings grew an equally unbelievable 765% year-on-year.

Its automotive revenue was $281 million.

Research Note: Arm’s New Automotive Cores

Arm recently launched new safety-enabled AE processors incorporating Armv9 technology and server-class performance. These processors are tailored for AI-driven applications to enhance autonomous driving and advanced driver-assistance systems (ADAS).

Research Note: Dell AI Factory with NVIDIA

At the 2024 NVIDIA GTC conference, Dell Technologies unveiled the Dell AI Factory with Nvidia, a comprehensive set of enterprise AI solutions aimed at simplifying the adoption and integration of AI for businesses.

Dell also announced enhancements to its flagship PowerEdge XE9680 server, including introducing Dell’s first liquid-cooled server solution, which allows the server to deliver the full capabilities of NVIDIA’s newly announced AI accelerators.

Research Note: NVIDIA & AWS’s Broad AI-Focused Collaboration

At the Nvidia GTC event in San Jose, Nvidia and Amazon Web Services made a series of wide-ranging announcements that showed a broad and strategic collaboration to accelerate global AI innovation and infrastructure capabilities.

The joint announcements included the introduction of NVIDIA Grace Blackwell GPU-based Amazon EC2 instances, NVIDIA DGX Cloud integration, and, most critically, a pivotal collaboration called Project Ceiba.

Equinix Launches Fully Managed NVIDIA DGX AI

Equinix launched a fully managed private cloud service to facilitate enterprises’ acquisition and management of NVIDIA DGX AI supercomputing infrastructure. This service is aimed at helping businesses build and run custom generative AI models.

NVIDIA, Wind River & NTT DOCOMO Deliver First GPU-Accelerated 5G vRAN Solution

NVIDIA, along with Japanese telecom provider NTT DOCOMO, recently announced a pioneering GPU-accelerated commercial Open RAN 5G built on NVIDIA, Fujitsu, and Wind River technology. This marks the world’s first deployment of a GPU-accelerated commercial 5G network by a telecom provider.

Dell Technologies & NVIDIA Collaborate On Full-Stack Generative AI Solutions

Dell & NVIDIA deliver new validated designs for inference systems based on NVIDIA accelerators and software, a professional services offering to help enterprises embrace generative AI, and a new Dell Precision workstation for AI development.

NVIDIA H100 Dominates New MLPerf v3.0 Benchmark Results

To know how a system performs across a range of AI workloads, you look at its MLPerf benchmark numbers. AI is rapidly evolving, with generative AI workloads becoming increasingly prominent, and MLPerf is evolving with the industry. Its new MLPerf Training v3.0 benchmark suite introduces new tests for recommendation engines and large language model (LLM) […]

The Innovative Cooling Approach Behind NVIDIA’s $5M COOLERCHIPS Grant

Background Cooling a data center was a challenge even before the current AI-driven boom in accelerated computing heated up. Servers run hot, with processor thermal designs reaching 500 watts by 2025. Add GPUs to the mix, some of which approach 700W today, and the problems of power consumption and heat dissipation begin to expand exponentially. […]

NVIDIA Grows Momentum in Public Cloud

NVIDIA lives at the center of the AI revolution. Its GPUs are the most common, and most powerful. Beyond its hardware, NVIDIA is enabling the adoption of AI with software tools that span the gamut from edge inference to autonomous driving to medical imaging. The list truly is limitless.

NVIDIA Updates Data Center Platform Strategy at GTC 2023

It’s become clear that NVIDIA strives to own the entire platform for AI-infused analytics. The time is right, as accelerated AI is fueling a shift in how enterprises derive value from their data and how businesses operate and engage with their customers.

The 2023 Enterprise Storage Agenda

2023 is going to be an exciting year in enterprise storage. Principal Analyst Steve McDowell walks us through his predictions for the storage industry over the coming calendar year.